Causal Inference for the Brave and True

02 - randomised experiments, 02 - randomised experiments #, the golden standard #.

In the previous session, we saw why and how association is different from causation. We also saw what is required to make association be causation.

\( E[Y|T=1] - E[Y|T=0] = \underbrace{E[Y_1 - Y_0|T=1]}_{ATT} + \underbrace{\{ E[Y_0|T=1] - E[Y_0|T=0] \}}_{BIAS} \)

To recap, association becomes causation if there is no bias. There will be no bias if \(E[Y_0|T=0]=E[Y_0|T=1]\) . In words, association will be causation if the treated and control are equal or comparable, except for their treatment. Or, in more technical words, when the outcome of the untreated is equal to the counterfactual outcome of the treated. Remember that this counterfactual outcome is the outcome of the treated group if they had not received the treatment.

I think we did an OK job explaining how to make association equal to causation in math terms. But that was only in theory. Now, we look at the first tool we have to make the bias vanish: Randomised Experiments . Randomised experiments randomly assign individuals in a population to a treatment or to a control group. The proportion that receives the treatment doesn’t have to be 50%. You could have an experiment where only 10% of your samples get the treatment.

Randomisation annihilates bias by making the potential outcomes independent of the treatment.

\( (Y_0, Y_1) \perp\!\!\!\perp T \)

This can be confusing at first (it was for me). But don’t worry, my brave and true fellow, I’ll explain it further. If the outcome is independent of the treatment, doesn’t this also imply that the treatment has no effect? Well, yes! But notice I’m not talking about the outcomes. Instead, I’m talking about the potential outcomes. The potential outcome is how the outcome would have been under treatment ( \(Y_1\) ) or under control ( \(Y_0\) ). In randomized trials, we don’t want the outcome to be independent of the treatment since we think the treatment causes the outcome. But we want the potential outcomes to be independent of the treatment.

Saying that the potential outcomes are independent of the treatment is saying that they would be, in expectation, the same in the treatment or the control group. In simpler terms, it means that treatment and control groups are comparable. Or that knowing the treatment assignment doesn’t give me any information on how the outcome was previous to the treatment. Consequently, \((Y_0, Y_1)\perp T\) means that the treatment is the only thing generating a difference between the outcome in the treated and in the control group. To see this, notice that independence implies precisely that

\( E[Y_0|T=0]=E[Y_0|T=1]=E[Y_0] \)

Which, as we’ve seen, makes it so that

\( E[Y|T=1] - E[Y|T=0] = E[Y_1 - Y_0]=ATE \)

So, randomization gives us a way to use a simple difference in means between treatment and control and call that the treatment effect.

In a School Far, Far Away #

In 2020, the Coronavirus Pandemic forced businesses to adapt to social distancing. Delivery services became widespread, and big corporations shifted to a remote work strategy. With schools, it wasn’t different. Many started their own online repository of classes.

Four months into the crisis, many wonder if the introduced changes could be maintained. There is no question that online learning has its benefits. It is cheaper since it can save on real estate and transportation. It can also be more digital, leveraging world-class content from around the globe, not just from a fixed set of teachers. Despite all of that, we still need to answer if online learning has a negative or positive impact on the student’s academic performance.

One way to answer this is to take students from schools that give mostly online classes and compare them with students from schools that provide lectures in traditional classrooms. As we know by now, this is not the best approach. It could be that online schools attract only the well-disciplined students that do better than average even if the class were presential. In this case, we would have a positive bias, where the treated are academically better than the untreated: \(E[Y_0|T=1] > E[Y_0|T=0]\) .

Or on the flip side, it could be that online classes are cheaper and are composed chiefly of less wealthy students, who might have to work besides studying. In this case, these students would do worse than those from the presential schools even if they took presential classes. If this was the case, we would have a bias in the other direction, where the treated are academically worse than the untreated: \(E[Y_0|T=1] < E[Y_0|T=0]\) .

So, although we could make simple comparisons, it wouldn’t be compelling. One way or another, we could never be sure if there wasn’t any bias lurking around and masking our causal effect.

To solve that, we need to make the treated and untreated comparable \(E[Y_0|T=1] = E[Y_0|T=0]\) . One way to force this is by randomly assigning the online and presential classes to students. If we managed to do that, the treatment and untreated would be, on average, the same, except for the treatment they receive.

Fortunately, some economists have done that for us. They’ve randomized classes so that some students were assigned to have face-to-face lectures, others to have only online lessons, and a third group to have a blended format of both online and face-to-face classes. They collected data on a standard exam at the end of the semester.

Here is what the data looks like:

| gender | asian | black | hawaiian | hispanic | unknown | white | format_ol | format_blended | falsexam | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0 | 0.0 | 63.29997 |

| 1 | 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0 | 0.0 | 79.96000 |

| 2 | 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0 | 1.0 | 83.37000 |

| 3 | 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0 | 1.0 | 90.01994 |

| 4 | 1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1 | 0.0 | 83.30000 |

We can see that we have 323 samples. It’s not exactly big data, but something we can work with. To estimate the causal effect, we can simply compute the mean score for each of the treatment groups.

| gender | asian | black | hawaiian | hispanic | unknown | white | format_ol | format_blended | falsexam | |

|---|---|---|---|---|---|---|---|---|---|---|

| class_format | ||||||||||

| blended | 0.550459 | 0.217949 | 0.102564 | 0.025641 | 0.012821 | 0.012821 | 0.628205 | 0.0 | 1.0 | 77.093731 |

| face_to_face | 0.633333 | 0.202020 | 0.070707 | 0.000000 | 0.010101 | 0.000000 | 0.717172 | 0.0 | 0.0 | 78.547485 |

| online | 0.542553 | 0.228571 | 0.028571 | 0.014286 | 0.028571 | 0.000000 | 0.700000 | 1.0 | 0.0 | 73.635263 |

Yup. It’s that simple. We can see that face-to-face classes yield a 78.54 average score, while online courses yield a 73.63 average score. Not so good news for the proponents of online learning. The \(ATE\) for an online class is thus -4.91. This means that online classes cause students to perform about 5 points lower , on average. That’s it. You don’t need to worry that online courses might have poorer students that can’t afford face-to-face classes or, for that matter, you don’t have to worry that the students from the different treatments are different in any way other than the treatment they received. By design, the random experiment is made to wipe out those differences.

For this reason, a good sanity check to see if the randomisation was done right (or if you are looking at the correct data) is to check if the treated are equal to the untreated in pre-treatment variables. Our data has information on gender and ethnicity to see if they are similar across groups. We can say that they look pretty similar for the gender , asian , hispanic , and white variables. The black variable, however, seems a little bit different. This draws attention to what happens with a small dataset. Even under randomisation, it could be that, by chance, one group is different from another. In large samples, this difference tends to disappear.

The Ideal Experiment #

Randomised experiments or Randomised Controlled Trials (RCT) are the most reliable way to get causal effects. It’s a straightforward technique and absurdly convincing. It is so powerful that most countries have it as a requirement for showing the effectiveness of new medicine. To make a terrible analogy, you can think of RCT as Aang, from Avatar: The Last Airbender, while other techniques are more like Sokka. Sokka is cool and can pull some neat tricks here and there, but Aang can bend the four elements and connect with the spiritual world. Think of it this way, if we could, RCT would be all we would ever do to uncover causality. A well designed RCT is the dream of any scientist.

Unfortunately, they tend to be either very expensive or just plain unethical. Sometimes, we simply can’t control the assignment mechanism. Imagine yourself as a physician trying to estimate the effect of smoking during pregnancy on baby weight at birth. You can’t simply force a random portion of moms to smoke during pregnancy. Or say you work for a big bank, and you need to estimate the impact of the credit line on customer churn. It would be too expensive to give random credit lines to your customers. Or that you want to understand the impact of increasing the minimum wage on unemployment. You can’t simply assign countries to have one or another minimum wage. You get the point.

We will later see how to lower the randomisation cost by using conditional randomisation, but there is nothing we can do about unethical or unfeasible experiments. Still, whenever we deal with causal questions, it is worth thinking about the ideal experiment . Always ask yourself, if you could, what would be the perfect experiment you would run to uncover this causal effect? This tends to shed some light on the way how we can discover the causal effect even without the ideal experiment.

The Assignment Mechanism #

In a randomised experiment, the mechanism that assigns units to one treatment or the other is, well, random. As we will see later, all causal inference techniques will somehow try to identify the assignment mechanisms of the treatments. When we know for sure how this mechanism behaves, causal inference will be much more confident, even if the assignment mechanism isn’t random.

Unfortunately, the assignment mechanism can’t be discovered by simply looking at the data. For example, if you have a dataset where higher education correlates with wealth, you can’t know for sure which one caused which by just looking at the data. You will have to use your knowledge about how the world works to argue in favor of a plausible assignment mechanism: is it the case that schools educate people, making them more productive and leading them to higher-paying jobs. Or, if you are pessimistic about education, you can say that schools do nothing to increase productivity, and this is just a spurious correlation because only wealthy families can afford to have a kid get a higher degree.

In causal questions, we usually can argue in both ways: that X causes Y, or that it is a third variable Z that causes both X and Y, and hence the X and Y correlation is just spurious. For this reason, knowing the assignment mechanism leads to a much more convincing causal answer. This is also what makes causal inference so exciting. While applied ML is usually just pressing some buttons in the proper order, applied causal inference requires you to seriously think about the mechanism generating that data.

Key Ideas #

We looked at how randomised experiments are the simplest and most effective way to uncover causal impact. It does this by making the treatment and control groups comparable. Unfortunately, we can’t do randomised experiments all the time, but it is still helpful to think about what is the ideal experiment we would do if we could.

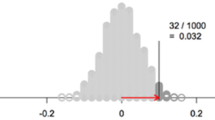

Someone familiar with statistics might be protesting right now that I didn’t look at the variance of my causal effect estimate. How can I know that a 4.91 points decrease is not due to chance? In other words, how can I know if the difference is statistically significant? And they would be right. Don’t worry. I intend to review some statistical concepts next.

References #

I like to think of this entire book as a tribute to Joshua Angrist, Alberto Abadie and Christopher Walters for their amazing Econometrics class. Most of the ideas here are taken from their classes at the American Economic Association. Watching them is what is keeping me sane during this tough year of 2020.

Cross-Section Econometrics

Mastering Mostly Harmless Econometrics

I’ll also like to reference the amazing books from Angrist. They have shown me that Econometrics, or ‘Metrics as they call it, is not only extremely useful but also profoundly fun.

Mostly Harmless Econometrics

Mastering ‘Metrics

My final reference is Miguel Hernan and Jamie Robins’ book. It has been my trustworthy companion in the most thorny causal questions I had to answer.

Causal Inference Book

The data used here is from a study of Alpert, William T., Kenneth A. Couch, and Oskar R. Harmon. 2016. “A Randomized Assessment of Online Learning” . American Economic Review, 106 (5): 378-82.

Contribute #

Causal Inference for the Brave and True is an open-source material on causal inference, the statistics of science. Its goal is to be accessible monetarily and intellectually. It uses only free software based on Python. If you found this book valuable and want to support it, please go to Patreon . If you are not ready to contribute financially, you can also help by fixing typos, suggesting edits, or giving feedback on passages you didn’t understand. Go to the book’s repository and open an issue . Finally, if you liked this content, please share it with others who might find it helpful and give it a star on GitHub .

Introduction to Statistics and Data Science

Chapter 7 Randomization and Causality

In this chapter we kick off the third segment of this book: statistical theory. Up until this point, we have focused only on descriptive statistics and exploring the data we have in hand. Very often the data available to us is observational data – data that is collected via a survey in which nothing is manipulated or via a log of data (e.g., scraped from the web). As a result, any relationship we observe is limited to our specific sample of data, and the relationships are considered associational . In this chapter we introduce the idea of making inferences through a discussion of causality and randomization .

Needed Packages

Let’s load all the packages needed for this chapter (this assumes you’ve already installed them). If needed, read Section 1.3 for information on how to install and load R packages.

7.1 Causal Questions

What if we wanted to understand not just if X is associated with Y, but if X causes Y? Examples of causal questions include:

- Does smoking cause cancer ?

- Do after school programs improve student test scores ?

- Does exercise make people happier ?

- Does exposure to abstinence only education lead to lower pregnancy rates ?

- Does breastfeeding increase baby IQs ?

Importantly, note that while these are all causal questions, they do not all directly use the word cause . Other words that imply causality include:

- Increase / decrease

In general, the tell-tale sign that a question is causal is if the analysis is used to make an argument for changing a procedure, policy, or practice.

7.2 Randomized experiments

The gold standard for understanding causality is the randomized experiment . For the sake of this chapter, we will focus on experiments in which people are randomized to one of two conditions: treatment or control. Note, however, that this is just one scenario; for example, schools, offices, countries, states, households, animals, cars, etc. can all be randomized as well, and can be randomized to more than two conditions.

What do we mean by random? Be careful here, as the word “random” is used colloquially differently than it is statistically. When we use the word random in this context, we mean:

- Every person (or unit) has some chance (i.e., a non-zero probability) of being selected into the treatment or control group.

- The selection is based upon a random process (e.g., names out of a hat, a random number generator, rolls of dice, etc.)

In practice, a randomized experiment involves several steps.

- Half of the sample of people is randomly assigned to the treatment group (T), and the other half is assigned to the control group (C).

- Those in the treatment group receive a treatment (e.g., a drug) and those in the control group receive something else (e.g., business as usual, a placebo).

- Outcomes (Y) in the two groups are observed for all people.

- The effect of the treatment is calculated using a simple regression model, \[\hat{y} = b_0 + b_1T \] where \(T\) equals 1 when the individual is in the treatment group and 0 when they are in the control group. Note that using the notation introduced in Section 5.2.2 , this would be the same as writing \(\hat{y} = b_0 + b_1\mathbb{1}_{\mbox{Trt}}(x)\) . We will stick with the \(T\) notation for now, because this is more common in randomized experiments in practice.

For this simple regression model, \(b_1 = \bar{y}_T - \bar{y}_C\) is the observed “treatment effect”, where \(\bar{y}_T\) is the average of the outcomes in the treatment group and \(\bar{y}_C\) is the average in the control group. This means that the “treatment effect” is simply the difference between the treatment and control group averages.

7.2.1 Random processes in R

There are several functions in R that mimic random processes. You have already seen one example in Chapters 5 and 6 when we used sample_n to randomly select a specifized number of rows from a dataset. The function rbernoulli() is another example, which allows us to mimic the results of a series of random coin flips. The first argument in the rbernoulli() function, n , specifies the number of trials (in this case, coin flips), and the argument p specifies the probability of “success”" for each trial. In our coin flip example, we can define “success” to be when the coin lands on heads. If we’re using a fair coin then the probability it lands on heads is 50%, so p = 0.5 .

Sometimes a random process can give results that don’t look random. For example, even though any given coin flip has a 50% chance of landing on heads, it’s possible to observe many tails in a row, just due to chance. In the example below, 10 coin flips resulted in only 3 heads, and the first 6 flips were tails. Note that TRUE corresponds to the notion of “success”, so here TRUE = heads and FALSE = tails.

Importantly, just because the results don’t look random, does not mean that the results aren’t random. If we were to repeat this random process, we will get a different set of random results.

Random processes can appear unstable, particularly if they are done only a small number of times (e.g. only 10 coin flips), but if we were to conduct the coin flip procedure thousands of times, we would expect the results to stabilize and see on average 50% heads.

Often times when running a randomized experiment in practice, you want to ensure that exactly half of your participants end up in the treatment group. In this case, you don’t want to flip a coin for each participant, because just by chance, you could end up with 63% of people in the treatment group, for example. Instead, you can imagine each participant having an ID number, which is then randomly sorted or shuffled. You could then assign the first half of the randomly sorted ID numbers to the treatment group, for example. R has many ways of mimicing this type of random assignment process as well, such as the randomizr package.

7.3 Omitted variables

In a randomized experiment, we showed in Section 7.2 that we can calculate the estimated causal effect ( \(b_1\) ) of a treatment using a simple regression model.

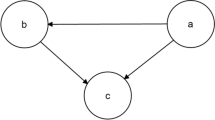

Why can’t we use the same model to determine causality with observational data? Recall our discussion from Section 5.3.2 . We have to be very careful not to make unwarranted causal claims from observational data, because there may be an omitted variable (Z), also known as a confounder :

Here are some examples:

- There is a positive relationship between sales of ice cream (X) from street vendors and crime (Y). Does this mean that eating ice cream causes increased crime? No. The omitted variable is the season and weather (Z). That is, there is a positive relationship between warm weather (Z) and ice cream consumption (X) and between warm weather (Z) and crime (Y).

- Students that play an instrument (X) have higher grades (Y) than those that do not. Does this mean that playing an instrument causes improved academic outcomes? No. Some omitted variables here could be family socio-economic status and student motivation. That is, there is a positive relationship between student motivation (and a family with resources) (Z) and likelihood of playing an instrument (X) and between motivation / resources and student grades (Y).

- Countries that eat a lot of chocolate (X) also win the most Nobel Prizes (Y). Does this mean that higher chocolate consumption leads to more Nobel Prizes? No. The omitted variable here is a country’s wealth (Z). Wealthier countries win more Nobel Prizes and also consume more chocolate.

Examples of associations that are misinterpreted as causal relationships abound. To see more examples, check out this website: https://www.tylervigen.com/spurious-correlations .

7.4 The magic of randomization

If omitted variables / confounders are such a threat to determining causality in observational data, why aren’t they also a threat in randomized experiments?

The answer is simple: randomization . Because people are randomized to treatment and control groups, on average there is no difference between these two groups on any characteristics other than their treatment .

This means that before the treatment is given, on average the two groups (T and C) are equivalent to one another on every observed and unobserved variable. For example, the two groups should be similar in all pre-treatment variables: age, gender, motivation levels, heart disease, math ability, etc. Thus, when the treatment is assigned and implemented, any differences between outcomes can be attributed to the treatment .

7.4.1 Randomization Example

Let’s see the magic of randomization in action. Imagine that we have a promising new curriculum for teaching math to Kindergarteners, and we want to know whether or not the curriculum is effective. Let’s explore how a randomized experiment would help us test this. First, we’ll load in a dataset called ed_data . This data originally came from the Early Childhood Longitudinal Study (ECLS) program but has been adapted for this example. Let’s take a look at the data.

It includes information on 335 Kindergarten students: indicator variables for whether they are female or minority students, information on their parents’ highest level of education, a continuous measure of the their socio-economic status (SES), and their reading and math scores. For our purposes, we will assume that these are all pre-treatment variables that are measured on students at the beginning of the year, before we conduct our (hypothetical) randomized experiment. We also have included two variables Trt_rand and Trt_non_rand for demonstration purposes, which we will describe below.

In order to conduct our randomized experiment, we could randomly assign half of the Kindergarteners to the treatment group to recieve the new curriculum, and the other half of the students to the control group to recieve the “business as usual” curriculum. Trt_rand is the result of this random assignment , and is an indicator variable for whether the student is in the treatment group ( Trt_rand == 1 ) or the control group ( Trt_rand == 0 ). By inspecting this variable, we can see that 167 students were assigned to treatment and 168 were assigned to control.

Remember that because this treatment assignment was random , we don’t expect a student’s treatment status to be correlated with any of their other pre-treatment characteristics. In other words, students in the treatment and control groups should look approximately the same on average . Looking at the means of all the numeric variables by treatment group, we can see that this is true in our example. Note how the summarise_if function is working here; if a variable in the dataset is numeric, then it is summarized by calculating its mean .

Both the treatment and control groups appear to be approximately the same on average on the observed characteristics of gender, minority status, SES, and pre-treatment reading and math scores. Note that since FEMALE is coded as 0 - 1, the “mean” is simply the proportion of students in the dataset that are female. The same is true for MINORITY .

In our hypothetical randomized experiment, after randomizing students into the treatment and control groups, we would then implement the appropriate (new or business as usual) math curriculum throughout the school year. We would then measure student math scores again at the end of the year, and if we observed that the treatment group was scoring higher (or lower) on average than the control group, we could attribute that difference entirely to the new curriculum. We would not have to worry about other omitted variables being the cause of the difference in test scores, because randomization ensured that the two groups were equivalent on average on all pre-treatment characteristics, both observed and unobserved.

In comparison, in an observational study, the two groups are not equivalent on these pre-treatment variables. In the same example above, let us imagine where instead of being randomly assigned to treatment, instead students with lower SES are assigned to the new specialized curriculum ( Trt_non_rand = 1 ), and those with higher SES are assigned to the business as usual curriculum ( Trt_non_rand = 0 ). The indicator variable Trt_non_rand is the result of this non-random treatment group assignment process.

In this case, the table of comparisons between the two groups looks quite different:

There are somewhat large differences between the treatment and control group on several pre-treatment variables in addition to SES (e.g. % minority, and reading and math scores). Notice that the two groups still appear to be balanced in terms of gender. This is because gender is in general not associated with SES. However, minority status and test scores are both correlated with SES, so assigning treatment based on SES (instead of via a random process) results in an imbalance on those other pre-treatment variables. Therefore, if we observed differences in test scores at the end of the year, it would be difficult to disambiguate whether the differences were caused by the intervention or due to some of these other pre-treatment differences.

7.4.2 Estimating the treatment effect

Imagine that the truth about this new curriculum is that it raises student math scores by 10 points, on average. We can use R to mimic this process and randomly generate post-test scores that raise the treatment group’s math scores by 10 points on average, but leave the control group math scores largely unchanged. Note that we will never know the true treatment effect in real life - the treatment effect is what we’re trying to estimate; this is for demonstration purposes only.

We use another random process function in R, rnorm() to generate these random post-test scores. Don’t worry about understanding exactly how the code below works, just note that in both the Trt_rand and Trt_non_rand case, we are creating post-treatment math scores that increase a student’s score by 10 points on average, if they received the new curriculum.

By looking at the first 10 rows of this data in Table 7.1 , we can convince ourselves that both the MATH_post_trt_rand and MATH_post_trt_non_rand scores reflect this truth that the treatment raises test scores by 10 points, on average. For example, we see that for student 1, they were assigned to the treatment group in both scenarios and their test scores increased from about 18 to about 28. Student 2, however, was only assigned to treatment in the second scenario, and their test scores increased from about 31 to 41, but in the first scenario since they did not receive the treatment, their score stayed at about 31. Remember that here we are showing two hypothetical scenarios that could have occurred for these students - one if they were part of a randomized experiment and one where they were part of an observational study - but in real life, the study would only be conducted one way on the students and not both.

| ID | MATH_pre | Trt_rand | MATH_post_trt_rand | Trt_non_rand | MATH_post_trt_non_rand |

|---|---|---|---|---|---|

| 1 | 18.7 | 1 | 28.4 | 1 | 28.9 |

| 2 | 30.6 | 0 | 31.2 | 1 | 40.8 |

| 3 | 31.6 | 0 | 32.2 | 0 | 31.3 |

| 4 | 31.4 | 0 | 32.0 | 1 | 41.6 |

| 5 | 24.2 | 1 | 34.0 | 1 | 34.4 |

| 6 | 49.8 | 1 | 59.5 | 0 | 49.5 |

| 7 | 27.1 | 0 | 27.7 | 1 | 37.3 |

| 8 | 27.4 | 0 | 27.9 | 1 | 37.5 |

| 9 | 25.1 | 1 | 34.9 | 1 | 35.3 |

| 10 | 41.9 | 1 | 51.6 | 0 | 41.6 |

Let’s examine how students in each group performed on the post-treatment math assessment on average in the first scenario where they were randomly assigned (i.e. using Trt_rand and MATH_post_trt_rand ).

Remember that in a randomized experiment, we calculate the treatment effect by simply taking the difference in the group averages (i.e. \(\bar{y}_T - \bar{y}_C\) ), so here our estimated treatment effect is \(49.6 - 39.6 = 10.0\) . Recall that we said this could be estimated using the simple linear regression model \(\hat{y} = b_0 + b_1T\) . We can fit this model in R to verify that our estimated treatment effect is \(b_1 = 10.0\) .

Let’s also look at the post-treatment test scores by group for the non-randomized experiment case.

Note that even though the treatment raised student scores by 10 points on average, in the observational case we estimate the treatment effect is much smaller. This is because treatment was confounded with SES and other pre-treatment variables, so we could not obtain an accurate estimate of the treatment effect.

7.5 If you know Z, what about multiple regression?

In the previous sections, we made clear that you cannot calculate the causal effect of a treatment using a simple linear regression model unless you have random assignment. What about a multiple regression model?

The answer here is more complicated. We’ll give you an overview, but note that this is a tiny sliver of an introduction and that there is an entire field of methods devoted to this problem. The field is called causal inference methods and focuses on the conditions under and methods in which you can calculate causal effects in observational studies.

Recall, we said before that in an observational study, the reason you can’t attribute causality between X and Y is because the relationship is confounded by an omitted variable Z. What if we included Z in the model (making it no longer omitted), as in:

\[\hat{y} = b_0 + b_1T + b_2Z\] As we learned in Chapter 6 , we can now interpret the coefficient \(b_1\) as the estimated effect of the treatment on outcomes, holding constant (or adjusting for) Z .

Importantly, the relationship between T and Y, adjusting for Z can be similar or different than the relationship between T and Y alone. In advance, you simply cannot know one from the other.

Let’s again look at our model fit1_non_rand that looked at the relationship between treatment and math scores, and compare it to a model that adjusts for the confounding variable SES.

The two models give quite different indications of how effective the treatment is. In the first model, the estimate of the treatment effect is 2.176, but in the second model once we control for SES, the estimate is 8.931. Again, this is because in our non-random assignment scenario, treatment status was confounded with SES.

Importantly, in the randomized experiment case, controlling for confounders using a multiple regression model is not necessary - again, because of the randomization. Let’s look at the same two models using the data from the experimental case (i.e. using Trt_rand and MATH_post_trt_rand ).

We can see that both models give estimates of the treatment effect that are roughly the same (10.044 and 9.891), regardless of whether or not we control for SES. This is because randomization ensured that the treatment and control group were balanced on all pre-treatment characteristics - including SES, so there is no need to control for them in a multiple regression model.

7.6 What if you don’t know Z?

In the observational case, if you know the process through which people are assigned to or select treatment then the above multiple regression approach can get you pretty close to the causal effect of the treatment on the outcomes. This is what happened in our fit2_non_rand model above where we knew treatment was determined by SES, and so we controlled for it in our model.

But this is rarely the case. In most studies, selection of treatment is not based on a single variable . That is, before treatment occurs, those that will ultimately receive the treatment and those that do not might differ in a myriad of ways. For example, students that play instruments may not only come from families with more resources and have higher motivation, but may also play fewer sports, already be great readers, have a natural proclivity for music, or come from a musical family. As an analyst, it is typically very difficult – if not impossible – to know how and why some people selected a treatment and others did not.

Without randomization, here is the best approach:

- Remember: your goal is to approximate a random experiment. You want the two groups to be similar on any and all variables that are related to uptake of the treatment and the outcome.

- Think about the treatment selection process. Why would people choose to play an instrument (or not)? Attend an after-school program (or not)? Be part of a sorority or fraternity (or not)?

- Look for variables in your data that you can use in a multiple regression to control for these other possible confounders. Pay attention to how your estimate of the treatment impact changes as you add these into your model (often it will decrease).

- State very clearly the assumptions you are making, the variables you have controlled for, and the possible other variables you were unable to control for. Be tentative in your conclusions and make clear their limitations – that this work is suggestive and that future research – a randomized experiment – would be more definitive.

7.7 Conclusion

In this chapter we’ve focused on the role of randomization in our ability to make inferences – here about causation. As you will see in the next few chapters, randomization is also important for making inferences from outcomes observed in a sample to their values in a population. But the importance of randomization goes even deeper than this – one could say that randomization is at the core of inferential statistics .

In situations in which treatment is randomly assigned or a sample is randomly selected from a population, as a result of knowing this mechanism , we are able to imagine and explore alternative realities – what we will call counter-factual thinking (Chapter 9 ) – and form ways of understanding when “effects” are likely (or unlikely) to be found simply by chance – what we will call proof by stochastic contradiction (Chapter 11 ).

Finally, we would be remiss to end this chapter without including this XKCD comic, which every statistician loves:

Other Formats

Causal inference: basic concepts and randomized experiments.

Hyunseung Kang

April 3, 2024

Concepts Covered Today

- Association versus causation

- Defining causal quantities with counterfactual/potential outcomes

- Connection to missing data

- Identification of the average treatment effect in a completely randomized experiment

- Covariate balance

Does daily smoking cause a decrease in lung function?

Data: 2009-2010 National Health and Nutrition Examination Survey (NHANES) .

- Treatment ( \(A\) ): Daily smoker ( \(A = 1\) ) vs. never smoker ( \(A = 0\) )

- Outcome ( \(Y\) ): ratio of forced expiratory volume in one second over forced vital capacity. \(Y \geq\) 0.8 is good lung function!

- Sample size is \(n=\) 2360.

| Lung Function (Y) | Smoking Status (A) |

|---|---|

| 0.940 | Never |

| 0.918 | Never |

| 0.808 | Daily |

| 0.838 | Never |

Association of Smoking and Lung Function

- \(\overline{Y}_{\rm daily (A = 1) }=\) 0.75 and \(\overline{Y}_{\rm never (A = 0)}=\) 0.81.

- \(t\) -stat \(=\) -11.8, two-sided p value: \(\ll 10^{-16}\)

Daily smoking is strongly associated with 0.06 reduction in lung function.

But, is the strong association evidence for causality ?

Definition of Association

Association : \(A\) is associated with \(Y\) if \(A\) is informative about \(Y\)

- If you smoke daily \((A = 1)\) , then it’s likely that your lungs aren’t functioning well ( \(Y\) ).

- If smoking status doesn’t provide any information about lung function, \(A\) is not associated with \(Y\) .

Formally, \(A\) is associated with \(Y\) if \(\mathbb{P}(Y | A) \neq \mathbb{P}(Y)\) .

Some parameters that measure association:

- Population difference in means: \(\mathbb{E}[Y | A=1] - \mathbb{E}[Y | A=0]\)

- Population covariance: \({\rm cov}(A,Y) = \mathbb{E}[ (A - \mathbb{E}[A])(Y - \mathbb{E}[Y])]\)

Estimators/tests that measure association:

- Sample difference in means, regression, etc.

- Two-sample t-tests, Wilcoxon signed-rank test, etc.

Defining Causation: Parallel Universe Analogy

Suppose John’s lung functions are different between the two universes.

- The difference in lung functions can only be attributed to the difference in smoking status.

- Why? All variables (except smoking status) are the same between the two parallel universes.

Key Point : comparing outcomes between parallel universes enable us to say any difference in the outcome must be due to a difference in the treatment status.

This provides a basis for defining a causal effect of \(A\) on \(Y\) .

Counterfactual/Potential Outcomes

Notation for outcomes in parallel universes:

- \(Y(1)\) : counterfactual/potential lung function if you smoked (i.e. parallel world where you smoked)

- \(Y(0)\) : counterfactual/potential lung function if you didn’t smoke (i.e. parallel world where you didn’t smoke)

Similar to the observed data table, we can create counterfactual/potential outcomes data table.

| \(Y(1)\) | \(Y(0)\) | |

|---|---|---|

| John | 0.5 | 0.9 |

| Sally | 0.8 | 0.8 |

| Kate | 0.9 | 0.6 |

| Jason | 0.6 | 0.9 |

For pedagogy, we’ll assume that all data tables are an i.i.d. sample from some population (i.e. \(Y_i(1), Y_i(0) \overset{\text{i.i.d.}}{\sim} \mathbb{P}\{Y(1),Y(0)\}\) ).

Similar to the observed data \((Y,A)\) , you can think of the counterfactual data table as an i.i.d. from some population distribution of \(Y(1),Y(0)\) (i.e. \(Y_i(1), Y_i(0) \overset{\text{i.i.d.}}{\sim} \mathbb{P}\{Y(1),Y(0)\}\) )

- This is often referred to as the super-population framework.

- Expectations are defined with respect to the population distribution (i.e. \(\mathbb{E}[Y(1)] = \int y \mathbb{P}(Y(1) = y)dy\) )

- The population distribution is fixed and the sampling generates the source of randomness (i.e. i.i.d. draws from \(\mathbb{P}\{Y(1),Y(0)\}\) , perhaps \(\mathbb{P}\{Y(1),Y(0)\}\) is jointly Normal?)

- For asymptotic analysis, \(\mathbb{P}\{Y(1),Y(0)\}\) is usually fixed (i.e. \(\mathbb{P}\{Y(1),Y(0)\}\) does not vary with sample size \(n\) ). In high dimensional regimes, \(\mathbb{P}\{Y(1),Y(0)\}\) will vary with \(n\) .

Or, you can think of \(n=4\) as the entire population.

- This is often referred to as the finite population / randomization inference or design-based framework.

- Expectations are defined with respect to the table above (i.e. \(\mathbb{E}[Y(1)] = (0.5+0.8+0.9+0.6)/4 =0.7\) )

- The counterfactual data table is the population and the treatment assignment (i.e. which counterfactual universe you get to see; see below) generates the randomness and the observed sample.

- For asymptotic analysis, both the population (i.e. the counterfactual data table) and the sample changes with \(n\) . In some vague sense, asymptotic analysis under the finite sample framework is inherently high dimensional.

Finally, you can think of data above as a simple random sample of size \(n\) from a finite population of size \(0 < n < N < \infty\) .

The latter two frameworks are uncommon in typical statistics courses, especially the second one. However, it’s very popular among some circles of causal inference folks (e.g. Rubin, Rosenbaum and their students). The appendix of Erich Leo Lehmann ( 2006 ) , Rosenbaum ( 2002b ) , and Li and Ding ( 2017 ) provide a list of technical tools to conduct this type of inference.

There has been a long debate about which the “right” framework for inference. My understanding is that it’s now (i.e. Apr. 2024) a matter of personal taste. Also, as Paul Rosenbaum puts it:

In most cases, their disagreement is entirely without technical consequence: the same procedures are used, and the same conclusions are reached…Whatever Fisher and Neyman may have thought, in Lehmann’s text they work together. (Page 40, Rosenbaum ( 2002b ) )

The textbook that Paul is referring to is (now) Erich L. Lehmann and Romano ( 2006 ) . Note that this quote touches on another debate in the literature in finite-sample inference, which is what is the correct null hypothesis to test. In general, it’s good to be aware of the differences between the frameworks and, as Lehmann did (see full quote), use the strengths of each different frameworks. For some interesting discussions on this topic, see Robins ( 2002 ) , Rosenbaum ( 2002a ) , Chapter 2.4.5 of Rosenbaum ( 2002b ) , and Abadie et al. ( 2020 ) . For other papers in this area, see Splawa-Neyman, Dabrowska, and Speed ( 1990 ) , Freedman and Lane ( 1983 ) , Freedman ( 2008 ) , and Lin ( 2013 ) .

Causal Estimands

Some quantities/parameters from the counterfactual outcomes:

- \(Y_{\rm John}(1) - Y_{\rm John}(0) = -0.4\) : Causal effect of John smoking versus not smoking (i.e. individual treatment effect )

- \(\mathbb{E}[Y(1)]\) : Average of counterfactual outcomes when everyone is a daily smoker.

- \(\mathbb{E}[Y(1) - Y(0)]\) : Difference in the average counterfactual outcomes when everyone is smoking versus when everyone is not smoking (i.e. average treatment effect, ATE )

A causal estimand/parameter is a function of the counterfactual outcomes.

Counterfactual Data Versus Observed Data

Table 1: Comparison of tables.

| \(Y(1)\) | \(Y(0)\) | |

|---|---|---|

| John | 0.5 | 0.9 |

| Sally | 0.8 | 0.8 |

| Kate | 0.9 | 0.6 |

| Jason | 0.6 | 0.9 |

| \(Y\) | \(A\) | |

|---|---|---|

| John | 0.9 | 0 |

| Sally | 0.8 | 1 |

| Kate | 0.6 | 0 |

| Jason | 0.6 | 1 |

For both, we can define parameters (i.e. \(\mathbb{E}[Y]\) or \(\mathbb{E}[Y(1)]\) ) and take i.i.d. samples from their respective populations to learn them.

- \(Y_i(1), Y_i(0) \overset{\text{i.i.d.}}{\sim} \mathbb{P}\{Y(1), Y(0)\}\) and \(\mathbb{P}\) is Uniform, etc.

If we can observe the counterfactual table, we can run your favorite statistical methods and estimate/test causal estimands.

The Main Problem of Causal Inference

If we can observe all counterfactual outcomes, causal inference reduces to doing usual statistical analysis with \(Y(0),Y(1)\) .

But, in many cases, we don’t get to observe all counterfactual outcomes.

A key goal in causal inference is to learn about the counterfactual outcomes \(Y(1), Y(0)\) from the observed data \((Y,A)\) .

- How do we learn about causal parameters (e.g. \(\mathbb{E}[Y(1)]\) ) from the observed data \((Y,A)\)

- What causal parameters are impossible to learn from the observed data?

Addressing this type of question is referred to as causal identification .

Causal Identification: SUTVA or Causal Consistency

First, let’s make the following assumption known as stable unit treatment value assumption (SUTVA) or causal consistency ( Rubin ( 1980 ) , page 4 of Hernán and Robins ( 2020 ) ).

\[Y = AY(1) + (1-A) Y(0)\]

Equivalently,

\[Y = Y(A) \text{ or if } A=a, Y = Y(a)\]

The assumption states the observed outcome is one realizaton of the counterfactual outcomes.

- It also states that there are no multiple versions of treatment.

- It also states that there is no interference , a term coined by Cox ( 1958 ) .

No Multiple Versions of Treatment

Daily smoking (i.e. \(A=1\) ) can include different type of smokers

- Daily smoker who smokes one pack of cigarettes per day

- Daily smoker who smokes one cigarette per day

- Daily smoker who vapes per day

The current \(Y(1)\) does not distinguish outcomes between different types of smokers.

We can define counterfactual outcomes for all kinds of daily smokers, say \(Y(k)\) for \(k=1,\ldots,K\) type of daily smokers. But, if \(A=1\) , which counterfactual outcome should this correspond to?

SUTVA eliminates these variations in the counterfactuals. Or, if \(Y(k)\) exists, it assumes that these variations \(Y(1) = Y(2) = \ldots =Y(K)\) .

Implicitly, SUTVA forces you to define meaningful \(Y(a)\) . Some authors restrict counterfactual outcomes to be based on well-defined interventions or “no causation without manipulation” ( Holland ( 1986 ) , Hernán and Taubman ( 2008 ) , Cole and Frangakis ( 2009 ) , VanderWeele ( 2009 ) ).

A healthy majority of people in causal inference argue that the counterfactual outcome of race and gender are ill-defined. For example, suppose we re interested in whether being a female causes lower income. We could define the counterfactual outcomes as

- \(Y(1)\) : Jamie’s income when Jamie is female

- \(Y(0)\) : Jamie’s income when Jamie is not female

Similarly, we are interested in whether being a black person causes lower income, we could define the counterfactual outcomes as

- \(Y(1)\) : Jamie’s income when Jamie is black

- \(Y(0)\) : Jamie’s income when Jamie is not black

But, if Jamie is a female, can there be a parallel universe where Jamie is a male? That is, is there a universe where everything else is the same (i.e. Jamie’s whole life experience up to 2024, education, environment, maybe Jamie gave birth to kids), but Jamie is now a male instead of a female?

Note that we can still measure the association of gender on income, for instance with a linear regression of income (i.e \(Y\) ) on gender (i.e. \(A\) ). This is a well-defined quantity.

There is an interesting set of papers on this topic: VanderWeele and Robinson ( 2014 ) , Vandenbroucke, Broadbent, and Pearce ( 2016 ) , Krieger and Davey Smith ( 2016 ) , VanderWeele ( 2016 ) . See Volume 45, Issue 6, 2016 issue of the International Journal of Epidemiology.

Some even take this example further and argue whether counterfactual outcomes are well-defined in the first place; see Dawid ( 2000 ) and a counterpoint in Sections 1.1, 2 and 3 of Robins and Greenland ( 2000 ) .

No Interference

Suppose we want to study the causal effect of getting the measles vaccine on getting the measles. Let’s define the following counterfactual outcomes:

- \(Y(0)\) : Jamie’s counterfactual measles status when Jamie is not vaccinated

- \(Y(1)\) : Jamie’s counterfactual measles status when Jamie is vaccinated

Suppose Jamie has a sibling Alex and let’s entertain the possible values of Jamie’s \(Y(0)\) based on Alex’s vaccination status.

- Jamie’s counterfactual measles status when Alex is vaccinated.

- Jamie’s counterfactual measles status when Alexis not vaccinated.

The current \(Y(0)\) does not distinguish between the two counterfactual outcomes.

We can again define counterfactual outcomes to incorporate this scenario, say \(Y(a,b)\) where \(a\) refers to Jamie’s vaccination status and \(b\) refers to Alex’s vaccination status.

SUTVA states that Jamie’s outcome only depends on Jamie’s vaccination status, not Alex’s vaccination status. Or, more precisely \(Y(a,b) = Y(a,b')\) for all \(a,b,b'\) .

In some studies, the no interference assumption is not plausible (e.g. vaccine studies, peer effects in classrooms/neighborhoods, air pollutions). Rosenbaum ( 2007 ) has a nice set of examples of when the no interference assumption is not plausible.

There is a lot of ongoing work on this topic ( Rosenbaum ( 2007 ) , Hudgens and Halloran ( 2008 ) , Tchetgen and VanderWeele ( 2012 ) ). I am interested in in this area as well and let me know if you want to learn more.

Causal Identification and Missing Data

Once we assume SUTVA (i.e. \(Y= AY(1) + (1-A)Y(0)\) ), causal identification can be seen as a problem in missing data.

| \(Y(1)\) | \(Y(0)\) | \(Y\) | \(A\) | |

|---|---|---|---|---|

| John | NA | 0.9 | 0.9 | 0 |

| Sally | 0.8 | NA | 0.8 | 1 |

| Kate | NA | 0.6 | 0.6 | 0 |

| Jason | 0.6 | NA | 0.6 | 1 |

Under SUTVA, we only see one of the two counterfactual outcomes based on \(A\) .

- \(A\) serves as the “missingness” indicator where \(A=1\) implies \(Y(1)\) is observed and \(A=0\) implies \(Y(0)\) is observed.

- \(Y\) is the “observed” value.

- Being able to only observe one counterfactual outcome in the observed data is known as the ``fundamental problem of causal inference’’ (page 476 of Holland ( 1988 ) ).

Assumption on Missingness Pattern

| \(Y(1)\) | \(Y(0)\) | \(Y\) | \(A\) | |

|---|---|---|---|---|

| John | NA | 0.9 | 0.9 | 0 |

| Sally | 0.8 | NA | 0.8 | 1 |

| Kate | NA | 0.6 | 0.6 | 0 |

| Jason | 0.6 | NA | 0.6 | 1. |

Suppose we are interested in learning the causal estimand \(\mathbb{E}[Y(1)]\) (i.e. the mean of the first column).

One approach would be to take the average of the “complete cases” (i.e. Sally’s 0.8 and Jason’s 0.6).

- Formally, we would use \(\mathbb{E}[Y | A=1]\) , the mean of the observed outcome \(Y\) among \(A=1\) .

- This approach is valid if the entries of the first column are missing completely at random (MCAR)

- In other words, the missingness indicator \(A\) flips a random coin per each individual and decides whether its \(Y(1)\) is missing or not.

This is essentially akin to a randomized experiment.

Formal Statement of MCAR

Formally, MCAR can be stated as \[A \perp Y(1) \text{ and } 0 < \mathbb{P}(A=1)\]

- Missingness occurs completely at random in the rows of the first column, say by a flip of a random coin.

- Missingness doesn’t occur more frequently for higher values of \(Y(1)\) ; this would violate \(A \perp Y(1)\) .

- If \(\mathbb{P}(A=1) =0\) , then all entries of the column \(Y(1)\) are missing and we can’t learn anything about its column mean.

Formal Proof of Causal Identification of \(\mathbb{E}[Y(1)]\)

Suppose SUTVA and MCAR hold:

- (A1): \(Y = A Y(1) + (1-A) Y(0)\)

- (A2): \(A \perp Y(1)\)

- (A3): \(0 < \mathbb{P}(A=1)\)

Then, we can identify the causal estimand \(\mathbb{E}[Y(1)]\) by writing it as the following function of the observed data \(\mathbb{E}[Y | A=1]\) : \[\begin{align*} \mathbb{E}[Y | A=1] &= \mathbb{E}[AY(1) + (1-A)Y(0) | A=1] && \text{(A1)} \\ &= \mathbb{E}[Y(1)|A=1] && \text{Definition of conditional expectation} \\ &= \mathbb{E}[Y(1)] && \text{(A2)} \end{align*}\] (A3) is used to ensure that \(\mathbb{E}[Y | A=1]\) is a well-defined quantity.

Technically speaking, to establish \(\mathbb{E}[Y(1)] = \mathbb{E}[Y | A=1]\) , we only need \(\mathbb{E}[Y(1) | A=1] = \mathbb{E}[Y(1)]\) and \(0 < \mathbb{P}(A=1)\) instead of \(A \perp Y(1)\) and \(0 < \mathbb{P}(A=1)\) ; note that \(A \perp Y(1)\) is equivalent to \(\mathbb{P}(Y(1) | A=1) = \mathbb{P}(Y(1))\) . In words, we only need \(A\) to be unrelated to \(Y(1)\) in expectation , not necessarily in the entire distribution.

Causal Identification of the ATE

In a similar vein, to identify the ATE \(\mathbb{E}[Y(1)-Y(0)]\) , a natural approach would be to use \(\mathbb{E}[Y | A=1] - \mathbb{E}[Y | A=0]\) , respectively.

This approach would be valid under the following variation of the MCAR assumption: \[A \perp Y(0),Y(1), \quad{} 0 < \mathbb{P}(A=1) < 1\]

- The first part states that the treatment \(A\) is independent of \(Y(1), Y(0)\) . This is called exchangeability or ignorability in causal inference.

- \(0 < \mathbb{P}(A=1) <1\) states that there is a non-zero probability of observing some entries from the column \(Y(1)\) and from the column \(Y(0)\) . This is called positivity or overlap in causal inference.

Note that \(A \perp Y(1)\) (i.e. missingness indicator \(A\) for the \(Y(1)\) column is completely random) is not equivalent to \(A \perp Y\) (i.e. \(A\) is not associated with \(Y\) ), with or without SUTVA.

- Without SUTVA, \(Y\) and \(Y(1)\) are completely different variables and thus, the two statements are generally not equivalent to each other. In other words, \(A \perp Y(1)\) makes an assumption about the counterfactual outcome whereas \(A \perp Y\) makes an assumption about the observed outcome.

- With SUTVA, \(Y = Y(1)\) only if \(A =1\) and thus, \(A \perp Y(1)\) does not necessarily imply that \(Y = AY(1) + (1-A)Y(0)\) is independent of \(A\) . To put it differently, \(A \perp Y(1)\) only tells about the lack of relationship between the column of \(Y(1)\) and the column of \(A\) . In contrast, \(A \perp Y\) tells me about the lack of relationship between the column \(Y\) , which is a mix of \(Y(1)\) and \(Y(0)\) under SUTVA, and the column of \(A\) .

Formal Proof of Causal Identification of the ATE

- (A2): \(A \perp Y(1), Y(0)\)

- (A3): \(0 < \mathbb{P}(A=1) < 1\)

Then, we can identify the ATE from the observed data via: \[\begin{align*} &\mathbb{E}[Y|A=1] - \mathbb{E}[Y | A=0] \\ =& \mathbb{E}[AY(1) + (1-A)Y(0) | A=1] \\ & \quad{} - \mathbb{E}[AY(1) + (1-A)Y(0) | A=0] && \text{(A1)} \\ =& \mathbb{E}[Y(1)|A=1] - \mathbb{E}[Y(0) | A=0] && \text{Definition of conditional expectation} \\ =& \mathbb{E}[Y(1)] - \mathbb{E}[Y(0)] && \text{(A2)} \end{align*}\]

(A3) ensures that the conditioning events in \(\mathbb{E}[\cdot |A=0]\) and \(\mathbb{E}[\cdot |A=1]\) are well-defined.

Suppose there is no association between \(A\) and \(Y\) , i.e., \(A \perp Y\) , and suppose (A3) holds. Then, \(\mathbb{E}[Y |A=1] = \mathbb{E}[Y|A=0] = \mathbb{E}[Y]\) . If we further assume SUTVA (A1), this implies that the average treatment effect (ATE) is zero \(\mathbb{E}[Y(1)] - \mathbb{E}[Y(0)] = 0\) .

Notice that SUTVA is required to claim that the ATE is zero if there is no association between \(A\) and \(Y\) . In general, without SUTVA, we can’t make any claims about \(Y(1)\) and \(Y(0)\) from any analyais done with the observed data \(Y,A\) since SUTVA links the counterfactual outcomes to the observed data.

Why Randomized Experiments Identify Causal Effects

Consider an ideal, completely randomized experiment (RCT):

- Treatment & control are well-defined (e.g. take new drug or placebo)

- Counterfactual outcomes do not depend on others’ treatment (e.g. taking the drug/placebo only impacts my own outcome)

- Assignment to treatment or control is completely randomized

- There is a non-zero probability of receiving treatment and control (e.g. some get drug while others get placebo)

Assumptions (A1)-(A3) are satisfied because

- From 1 and 2, SUTVA holds.

- From 3, treatment assignment \(A\) is completely random, i.e. \(A \perp Y(1), Y(0)\)

- From 4, \(0 < P(A=1) <1\)

This is why RCTs are considered the gold standard for identifying causal effects as all assumptions for causal identification are satisfied by the experimental design.

RCTs with Covariates

In addition to \(Y\) and \(A\) , we often collect pre-treatment covariates \(X\) .

| \(Y(1)\) | \(Y(0)\) | \(Y\) | \(A\) | \(X\) (Age) | |

|---|---|---|---|---|---|

| John | NA | 0.9 | 0.9 | 0 | 38 |

| Sally | 0.8 | NA | 0.8 | 1 | 30 |

| Kate | NA | 0.6 | 0.6 | 0 | 23 |

| Jason | 0.6 | NA | 0.6 | 1 | 26 |

If the treatment \(A\) is completely randomized (as in an RCT), we would also have \(A \perp X\) .

Note that we can then combine this into the existing (A2) as (A2): \[A \perp Y(1), Y(0), X\] Other assumptions, (A1) and (A3), remain the same.

Causal Identification of The ATE with Covariates

Even with the change in (A2), the proof to identify the ATE in an RCT remains the same as before.

- (A2): \(A \perp Y(1), Y(0),X\)

Then, we can identify the ATE from the observed data via:

\[ \mathbb{E}[Y(1)] - \mathbb{E}[Y(0)] = \mathbb{E}[Y|A=1] - \mathbb{E}[Y | A=0] \] However, we can also identify the ATE via \[ \mathbb{E}[Y(1)] - \mathbb{E}[Y(0)] = \mathbb{E}[\mathbb{E}[Y | X,A=1]|A=1] - \mathbb{E}[\mathbb{E}[Y | X,A=0]|A=0] \]

The new equality simply uses the law of total expectation, i.e. \(\mathbb{E}[Y|A=1] = \mathbb{E}[\mathbb{E}[Y|X,A=1]|A=1]\) . However, this new equality requires modeling \(\mathbb{E}[Y | X,A=a]\) correctly. We’ll discuss more about this in later lectures.

Covariate Balance

An important, conceptual implication of complete randomization of the treatment (i.e. \(A \perp X\) ) is that \[\mathbb{P}(X |A=1) = \mathbb{P}(X | A=0)\] This concept is known as covariate balance where the distribution of covariates are balanced between treated units and control units.

Often in RCTs (and non-RCTs), we check for covariate balance by comparing the means of \(X\) s among treated and control units (e.g. two-sample t-test of the mean of \(X\) ). This is to ensure that randomization was actually carried out properly.

In Chapter 9.1 of Rosenbaum ( 2020 ) , Rosenbaum recommends using the pooled variance when computing the difference in means of a covariate between the treated group and the control group. Specifically, let \({\rm SD}(X}_{A=1}\) be the standard deviation of the covariate in the treated group and \({\rm SD}(X}_{A=0}\) be the standard deviation of the covariate in the control group. Then, Rosenbaum suggests the statistic

\[ \text{Standardized difference in means} = \frac{\bar{X}_{A=1}-\bar{X}_{A=0}}{\sqrt{ ({\rm SD}(X)_{A=1}^2 + {\rm SD}(X)_{A=0}^2)/2}} \]

RCT Balances Measured and Unmeasured Covariates

Critically, the above equality would hold even if some characteristics of the person are unmeasured (e.g. everyone’s precise health status).

- Formally, let \(U\) be unmeasured variables and \(X\) be measured variables.

- Because \(A\) is completely randomized in an RCT, we have \(A \perp X, U\) and \[\mathbb{P}(X,U |A=1) = \mathbb{P}(X,U | A=0)\]

Complete randomization ensures that the distribution of both measured and unmeasured characteristics of individuals are the same between the treated and control groups.

Randomization Creates Comparable Groups

Roughly speaking, completely randomization creates two synthetic, parallel universes where, on average, the characteristics between universe \(A=0\) and universe \(A=1\) are identical.

Thus, in an RCT, any difference in \(Y\) can only be attributed a difference in the group label (i.e. \(A\) ) since all measured and unmeasured characteristics between the two universes are distributionally identical.

This was essentially the “big” idea from Fisher in 1935 where he used randomization as the “reasoned basis’’ for causal inference from RCTs. Paul Rosenbaum explains this more beautifully than I do in Chapter 2.3 of Rosenbaum ( 2020 ) .

Note About Pre-treatment Covariates

We briefly mentioned that covariates \(X\) must precede treatment assignment, i.e.

- We collect \(X\) (i.e. baseline covariates)

- We assign treatment/control \(A\)

- We observe outcome \(Y\)

If they are post-treatment covariates, then the treatment can have a causal effect on both the outcome \(Y\) and the covariates \(X\) .

In this case, it’s not unclear whether \(Y\) has a causal effect because of a causal effect in \(X\) . Studying this type of question is called causal mediation analysis.

In general, we don’t want to condition on post-treatment covariates \(X\) when the goal is to estimate the average treatment effect of \(A\) on \(Y\) .

- Analytic Perspective

- Open access

- Published: 17 June 2009

The role of causal criteria in causal inferences: Bradford Hill's "aspects of association"

- Andrew C Ward 1

Epidemiologic Perspectives & Innovations volume 6 , Article number: 2 ( 2009 ) Cite this article

20k Accesses

55 Citations

8 Altmetric

Metrics details

As noted by Wesley Salmon and many others, causal concepts are ubiquitous in every branch of theoretical science, in the practical disciplines and in everyday life. In the theoretical and practical sciences especially, people often base claims about causal relations on applications of statistical methods to data. However, the source and type of data place important constraints on the choice of statistical methods as well as on the warrant attributed to the causal claims based on the use of such methods. For example, much of the data used by people interested in making causal claims come from non-experimental, observational studies in which random allocations to treatment and control groups are not present. Thus, one of the most important problems in the social and health sciences concerns making justified causal inferences using non-experimental, observational data. In this paper, I examine one method of justifying such inferences that is especially widespread in epidemiology and the health sciences generally – the use of causal criteria. I argue that while the use of causal criteria is not appropriate for either deductive or inductive inferences, they do have an important role to play in inferences to the best explanation. As such, causal criteria, exemplified by what Bradford Hill referred to as "aspects of [statistical] associations", have an indispensible part to play in the goal of making justified causal claims.

Introduction

As noted by Salmon [ 1 ] and others [ 2 , 3 ], causal concepts are ubiquitous in every branch of theoretical science, in the practical disciplines and in everyday life. In the case of the social sciences, Marini and Singer write that "the identification of genuine causes is accorded a high priority because it is viewed as the basis for understanding social phenomena and building an explanatory science" [ 4 ]. Although health services research is not so interested in "building an explanatory science", it too, like the social sciences with which it often overlaps, sets a premium on identifying genuine causes [ 5 ]. Establishing "an argument of causation is an important research activity," write van Reekum et al., "because it influences the delivery of good medical care" [ 6 ]. Moreover, given the keen public and political attention given recently to issues of health care insurance and health care delivery, a "key question" for federal, state and local policy makers that falls squarely within the province of health services research is how much an effect different kinds of health insurance interventions have on people's health, "and at what cost" [ 7 ].

This focus on causality and causal concepts is also pervasive in epidemiology [ 8 – 14 ], with Morabia suggesting that a name "more closely reflecting" the subject matter of epidemiology is "'population health etiology', etiology meaning 'science of causation"' [ 15 ]. For example, Swaen and Amelsvoort write that one "of the main objectives of epidemiological research is to identify causes of diseases" [ 16 ], while Botti, et al. write that a "central issue in environmental epidemiology is the evaluation of the causal nature of reported associations between exposure to defined environmental agents and the occurrence of disease. [ 17 ]" Gori writes that epidemiologists "have long pressed the claim that their study belongs to the natural sciences ... [and seek] to develop theoretical models and to identify experimentally the causal relationships that may confirm, extend, or negate such models" [ 18 ], and Oswald even goes so far as to claim that epidemiologists are "obsessed with cause and effect. [ 19 ]" Of course, it is true that some writers [ 20 ] are a bit more cautious when describing how considerations of causality fit into the goals of epidemiology. Weed writes that the "purpose of epidemiology is not to prove cause-effect relationships ... [but rather] to acquire knowledge about the determinants and distributions of disease and to apply that knowledge to improve public health. [ 21 ]" Even here, though, what seems implicit is that establishing cause-and-effect relationships is still the ideal goal of epidemiology, and as Weed himself writes in a later publication, finding "a cause, removing it, and reducing the incidence and mortality of subsequent disease in populations are hallmarks of public health and practice" [ 22 ].

Often people base claims about the existence and strength of causal relations on applications of statistical methods to data. However, the source and type of data place important constraints on the choice of statistical methods as well as on the warrant attributed to the causal claims based on the use of such methods [ 23 ]. In this context, Urbach writes that an "ever-present danger in ... investigations is attributing the outcome of an experiment to the treatment one is interested in when, in reality, it was caused by some extraneous variation in the experimental conditions" [ 24 ]. Expressed in a counterfactual framework, the danger is that while the causal contrast we want to measure is that between a target population under one exposure and, counterfactually, that same population under a different exposure, the observable substitute we use for the target population under the counterfactual condition may be an imperfect substitute [ 25 , 26 ]. When the observable substitute is an imperfect substitute for the target population under the counterfactual condition, the result is confounding, and the measure of the causal contrast is confounded. In order to address this "ever-present danger", many users of statistical methods, especially those of the Neyman-Pearson or Fisher type [ 27 , 28 ], claim that randomization is necessary.

Ideally, what randomization (random allocation to treatment and control or comparison groups) does is two-fold. First, following Greenland, the average of many hypothetical repetitions of a randomized control trial (RCT) will make "our estimate of the true risk difference statistically unbiased, in that the statistical expectation (average) of the estimate over the possible results equals the true value" [ 29 ]. In other words, randomization addresses the problem of statistical bias. However, as pointed out by Greenland [ 29 ], without some additional qualification, an ideally performed RCT does not "prevent the epidemiologic bias known as confounding" [ 29 ]. To reduce the probability of confounding, idealized random allocation must be used to create sufficiently large comparison groups. As Greenland notes, by using "randomization, one can make the probability of severe confounding as small as one likes by increasing the size of the treatment cohorts" [ 29 ]. For example, using the example in Greenland, Robins and Pearl, suppose that "our objective is to determine the effect of applying a treatment or exposure x 1 on a parameter μ of the distribution of the outcome y in population A, relative to applying treatment or exposure x 0 " [ 30 ]. Further, let us suppose that "μ will equal μ A1 if x 1 is applied to population A, and will equal μ A0 if x 0 is applied to that population" [ 30 ]. In this case, we can measure the causal effect of x 1 relative to x 0 by μ A1 -μ A0 . However, we cannot apply both x 1 and x 0 to the same population. Thus, if A is the target population, what we need is some population B for which μ B1 is known to equal (has a high likelihood of equaling) μ A1 , and some population C for which μ C0 is known to equal (has a high likelihood of equaling) μ A0 . To create these two groups, we randomly sample from A. If the randomization is ideal and the treatment cohorts (B and C) are sufficiently large, then we can expect, in probability, that the outcome in B would be the outcome if everyone in A were exposed to x 1 , while the outcome in C would be the outcome if everyone in A were exposed to x 0 . Thus, what idealized randomization does, when the treatment cohorts created by random selection from the target population are sufficiently large, is to create two sample populations that are exchangeable with A under their respective treatments (x 1 and x 0 ). In this way, a sufficiently large, perfectly conducted RCT controls for confounding, in probability, because the randomized allocation into B and C is, in effect, random sampling from the target population A to create reference populations B and C that are exchangeable with A. As Hernán notes, in "ideal randomized experiments, association is causation" [ 31 ].

Hernán's claim that in idealized randomized experiments, "association is causation", is a contemporary restatement of a view presented earlier by the English statistician and geneticist R. A. Fisher. According to Fisher, "to justify the conclusions of the theory of estimation, and the tests of significance as applied to counts or measures arising in the real world, it is logically necessary that they too must be the results of a random process" [ 32 ]. It is this contention, captured succinctly by Hernán, that is the centerpiece of the widely held belief that randomized clinical trials (RCTs) are, and ought to be, the "gold standard" of evaluating the causal efficacy of interventions (treatments) [ 33 – 36 ]. Thus, Machin writes that it is likely that "the single most important contribution to the science of comparative clinical trials was the recognition more than 50 years ago that patients should be allocated the options under consideration at random [ 37 ]. Similarly, while she believes that the value of RCTs depends crucially on the subject matter and the assumptions one is willing to make [ 38 ], Cartwright notes that many evidence-based policies call for scientific evidence of efficacy before being agreed to, and that government and other agencies typically claim that the best evidence for efficacy comes from RCTs [ 39 ].

Although generally considered the gold standard of research whose goal is to make justified causal inferences, it should come as no surprise that there is a variety of limitations associated with the use of RCTs. Some of these limitations are practical. For example, not only are RCTs typically expensive and time-consuming, there are important ethical questions raised when needed resources, that are otherwise limited or scarce, are randomly allocated. Similarly, it seems reasonable to worry about the ethical permissibility of an RCT when its use requires withholding a potentially beneficial treatment from people who might otherwise benefit from being recipients of the treatment. In addition to these practical concerns, there is also a variety of methodological limitations. Even if an idealized RCT is internally valid, generalizations from it to a wider population may be very limited. As noted by Silverman, a "review of epidemiological data and inclusion and exclusion criteria for trials of antipsychotic treatments revealed that only 632 of an estimated 36,000 individuals with schizophrenia would meet basic eligibility requirements for participation in a randomized controlled experiment" [ 40 ]. In such cases, even if there are no problems with differential attrition, the exportation of a finding from the experimental population to a target population may well go beyond what is justified by the use of RCTs. Even more generally, there is no guarantee either that the observable substitute for the target population under the counterfactual condition is a "good" substitute, or that a single RCT will result in a division in which possible confounders of the measured outcome are randomly distributed. Regarding the latter point, Worrall remarks that even for an impeccably designed and carried out RCT, "all agree that in any particular case this may produce a division which is, once we think about it, imbalanced with respect to some factor that plays a significant role in the outcome being measured" [ 41 ]. While it may be possible to reduce the probability of such baseline imbalances by multiple repetitions of the RCT, these repetitions, whose function is to give the limiting average effect [ 42 ], may not be practically feasible. Moreover, at least when the repetitions are "real life" repetitions and not computer simulations, there is no reason to believe that each of the repetitions will be "ideal", and more reasons to believe that they will not all be ideal. For this reason, multiple (real life) repetitions of the RCT are more likely to increase the likelihood of other kinds of bias, such as differential attrition, not controlled for by use of an RCT.

Of course there are a variety of approaches that one can take in attempting to meet these, and other limitations of RCTs. While not intending to downplay the importance of RCTs and the attempts to address the limitations associated with their use, much of the data used by people interested in making causal claims do not come from experiments that use random allocation to control and treatment or comparison groups. Indeed, as Herbert Smith writes, few "pieces of social research outside of social psychology are based on experiments" [ 43 ]. Thus, one of the most important problems in the social and health sciences, as well as in epidemiology, concerns whether it is possible to make warranted causal claims using non-experimental, observational data. The focus on observational data, as opposed to experimental data, leads us away from RCTs and towards an examination of what Weed has called the "most familiar component of the epidemiologist's approach to causal inference", viz., "causal criteria" [ 44 ]. In the context suggested by the quotation from Weed, the argument presented in this paper has three parts. First, I argue that, properly understood, causal inferences that make use of causal criteria, exemplified by the Bradford Hill "criteria", are neither deductive nor inductive in character. Instead, such inferences are best understood as instances of what philosophers call "inference to the best explanation". Second, I argue that even understood as components of an inference to the best explanation (the causal claim being the best explanation), causal criteria have many problems, and that the inferences their use sanctions are, at best, very weak. Finally, I conclude that while the inferential power of causal criteria is weak, they still have a pragmatic value; they are tools, in the toolkits of people interested in making causal claims, for preliminary assessments of statistical associations. To vary a remark by Mazlack about "association rules", while satisfactions of causal criteria (such as the Bradford Hill criteria with which this paper principally deals) do not warrant causal claims, their judicious application is important and, perhaps in many cases, indispensible for identifying interesting statistical relationships that can then be subjected to a further, more analytically rigorous statistical examination [ 45 ].