An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Systems biology primer: the basic methods and approaches

Affiliations.

- 1 Department of Pharmacological Sciences and Systems Biology Center New York, Icahn School of Medicine at Mount Sinai, New York, NY 10029, U.S.A.

- 2 Department of Pharmacological Sciences and Systems Biology Center New York, Icahn School of Medicine at Mount Sinai, New York, NY 10029, U.S.A. [email protected].

- PMID: 30287586

- DOI: 10.1042/EBC20180003

Systems biology is an integrative discipline connecting the molecular components within a single biological scale and also among different scales (e.g. cells, tissues and organ systems) to physiological functions and organismal phenotypes through quantitative reasoning, computational models and high-throughput experimental technologies. Systems biology uses a wide range of quantitative experimental and computational methodologies to decode information flow from genes, proteins and other subcellular components of signaling, regulatory and functional pathways to control cell, tissue, organ and organismal level functions. The computational methods used in systems biology provide systems-level insights to understand interactions and dynamics at various scales, within cells, tissues, organs and organisms. In recent years, the systems biology framework has enabled research in quantitative and systems pharmacology and precision medicine for complex diseases. Here, we present a brief overview of current experimental and computational methods used in systems biology.

Keywords: Systems Biology; bioinformatics; biological networks; computational biology; computational models; personalized medicine.

© 2018 The Author(s). Published by Portland Press Limited on behalf of the Biochemical Society.

PubMed Disclaimer

Similar articles

- Boolean modeling in systems biology: an overview of methodology and applications. Wang RS, Saadatpour A, Albert R. Wang RS, et al. Phys Biol. 2012 Oct;9(5):055001. doi: 10.1088/1478-3975/9/5/055001. Epub 2012 Sep 25. Phys Biol. 2012. PMID: 23011283 Review.

- Deciphering Combinatorial Genetics. Wong AS, Choi GC, Lu TK. Wong AS, et al. Annu Rev Genet. 2016 Nov 23;50:515-538. doi: 10.1146/annurev-genet-120215-034902. Epub 2016 Oct 6. Annu Rev Genet. 2016. PMID: 27732793 Review.

- Systems medicine: evolution of systems biology from bench to bedside. Wang RS, Maron BA, Loscalzo J. Wang RS, et al. Wiley Interdiscip Rev Syst Biol Med. 2015 Jul-Aug;7(4):141-61. doi: 10.1002/wsbm.1297. Epub 2015 Apr 17. Wiley Interdiscip Rev Syst Biol Med. 2015. PMID: 25891169 Free PMC article. Review.

- Systems biology by the rules: hybrid intelligent systems for pathway modeling and discovery. Bosl WJ. Bosl WJ. BMC Syst Biol. 2007 Feb 15;1:13. doi: 10.1186/1752-0509-1-13. BMC Syst Biol. 2007. PMID: 17408503 Free PMC article.

- Introduction: Cancer Gene Networks. Clarke R. Clarke R. Methods Mol Biol. 2017;1513:1-9. doi: 10.1007/978-1-4939-6539-7_1. Methods Mol Biol. 2017. PMID: 27807826

- COVID-19: Recent Insight in Genomic Feature, Pathogenesis, Immunological Biomarkers, Treatment Options and Clinical Updates on SARS-CoV-2. Deshmukh R, Harwansh RK, Garg A, Mishra S, Agrawal R, Jangde R. Deshmukh R, et al. Curr Genomics. 2024 Apr 8;25(2):69-87. doi: 10.2174/0113892029291098240129113500. Curr Genomics. 2024. PMID: 38751601 Review.

- Identification of metabolic pathways and key genes associated with atypical parkinsonism using a systems biology approach. Pasqualotto A, da Silva V, Pellenz FM, Schuh AFS, Schwartz IVD, Siebert M. Pasqualotto A, et al. Metab Brain Dis. 2024 Apr;39(4):577-587. doi: 10.1007/s11011-024-01342-7. Epub 2024 Feb 2. Metab Brain Dis. 2024. PMID: 38305999

- How Can Proteomics Help to Elucidate the Pathophysiological Crosstalk in Muscular Dystrophy and Associated Multi-System Dysfunction? Dowling P, Trollet C, Negroni E, Swandulla D, Ohlendieck K. Dowling P, et al. Proteomes. 2024 Jan 16;12(1):4. doi: 10.3390/proteomes12010004. Proteomes. 2024. PMID: 38250815 Free PMC article.

- Mycobacterium smegmatis , a Promising Vaccine Vector for Preventing TB and Other Diseases: Vaccinomics Insights and Applications. Xie W, Wang L, Luo D, Soni V, Rosenn EH, Wang Z. Xie W, et al. Vaccines (Basel). 2023 Jul 31;11(8):1302. doi: 10.3390/vaccines11081302. Vaccines (Basel). 2023. PMID: 37631870 Free PMC article. Review.

- Sex-Gender-Based Differences in Metabolic Diseases. Campesi I, Ruoppolo M, Franconi F, Caterino M, Costanzo M. Campesi I, et al. Handb Exp Pharmacol. 2023;282:241-257. doi: 10.1007/164_2023_683. Handb Exp Pharmacol. 2023. PMID: 37528324

Publication types

- Search in MeSH

Related information

- Cited in Books

LinkOut - more resources

Full text sources.

- Silverchair Information Systems

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Discover the Top 75 Free Courses for August

Udemy Announces Layoffs Without Saying ‘Layoffs’

Udemy’s latest ‘Strategic Business Update’ uses corporate euphemisms to signal job cuts while pivoting to enterprise clients.

- 7 Best Climate Change Courses for 2024: Exploring the Science

- [2024] 110+ Hours of Free LinkedIn Learning Courses with Free Certification

- 7 Best Free Haskell Courses for 2024

- 11 Best Data Structures & Algorithms Courses for 2024

- 9 Best TensorFlow Courses for 2024

600 Free Google Certifications

Most common

- web development

Popular subjects

Communication Skills

Computer Science

Graphic Design

Popular courses

Paleontology: Theropod Dinosaurs and the Origin of Birds

Competitive Strategy

Comprendere la filosofia

Organize and share your learning with Class Central Lists.

View our Lists Showcase

Class Central is learner-supported. When you buy through links on our site, we may earn an affiliate commission.

Experimental Methods in Systems Biology

Icahn School of Medicine at Mount Sinai via Coursera Help

- Introduction

- Description goes here

- Deep mRNA Sequencing

- Mass Spectrometry-Based Proteomics

- Midterm Exam

- Flow and Mass Cytometry for Single Cell Protein Levels and Cell Fate

- Live-cell Imaging for Single Cell Protein Dynamics

- Integrating and Interpreting Datasets with Network Models and Dynamical Models

Marc Birtwistle

- united states

Related Courses

Dynamical modeling methods for systems biology, introduction to systems biology, related articles, 1700 coursera courses that are still completely free, 250 top free coursera courses of all time, massive list of mooc-based microcredentials.

4.5 rating at Coursera based on 310 ratings

Select rating

Start your review of Experimental Methods in Systems Biology

Never Stop Learning.

Get personalized course recommendations, track subjects and courses with reminders, and more.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Research Highlights

- Published: December 2006

Systems biology for beginners

- Daniel Evanko

Nature Methods volume 3 , pages 964–965 ( 2006 ) Cite this article

588 Accesses

3 Citations

Metrics details

A web and print Focus on systems biology from Nature Publishing Group provides a practical introduction to a field that for all its promise still has many skeptics.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

251,40 € per year

only 20,95 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

You can also search for this author in PubMed Google Scholar

Related links

The Systems biology: a user's guide Focus homepage

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Evanko, D. Systems biology for beginners. Nat Methods 3 , 964–965 (2006). https://doi.org/10.1038/nmeth1206-964b

Download citation

Issue Date : December 2006

DOI : https://doi.org/10.1038/nmeth1206-964b

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Manage cookies

In this window you can switch the placement of cookies on and off. Only the Functional Cookies cannot be switched off. Your cookies preferences will be stored for 9 months, after which they will be set to default again. You can always manage your preferences and revoke your marketing consent via the Cookie Statement page in the lower left corner of the website.

Like you, we love to learn. That is why we and third parties we work with, use functional and analytical cookies (and comparable techniques) to improve your experience. By accepting all cookies you allow us to place cookies for marketing purposes too, in line with our Privacy Policy . These allow us to track your surfing behavior on and outside of Springest so we and third parties can adapt our website, advertisements and communication to you. Find more information and manage your preferences via the Cookie statement .

check Accept all Cookies

cancel Reject optional Cookies

settings Manage cookies

Experimental Methods in Systems Biology

- Price completeness: This price is complete, there are no hidden additional costs.

Need more information? Get more details on the site of the provider.

Description

When you enroll for courses through Coursera you get to choose for a paid plan or for a free plan .

- Free plan: No certicification and/or audit only. You will have access to all course materials except graded items.

- Paid plan: Commit to earning a Certificate—it's a trusted, shareable way to showcase your new skills.

About this course: Learn about the technologies underlying experimentation used in systems biology, with particular focus on RNA sequencing, mass spec-based proteomics, flow/mass cytometry and live-cell imaging. A key driver of the systems biology field is the technology allowing us to delve deeper and wider into how cells respond to experimental perturbations. This in turns allows us to build more detailed quantitative models of cellular function, which can give important insight into applications ranging from biotechnology to human disease. This course gives a broad overview of a variety of current experimental techniques used in modern systems biology, with focus on obtaining the qua…

Read the complete description

Frequently asked questions

There are no frequently asked questions yet. If you have any more questions or need help, contact our customer service .

Didn't find what you were looking for? See also: Biology , Health Management , Anatomy , Palliative Care , and Science .

About this course: Learn about the technologies underlying experimentation used in systems biology, with particular focus on RNA sequencing, mass spec-based proteomics, flow/mass cytometry and live-cell imaging. A key driver of the systems biology field is the technology allowing us to delve deeper and wider into how cells respond to experimental perturbations. This in turns allows us to build more detailed quantitative models of cellular function, which can give important insight into applications ranging from biotechnology to human disease. This course gives a broad overview of a variety of current experimental techniques used in modern systems biology, with focus on obtaining the quantitative data needed for computational modeling purposes in downstream analyses. We dive deeply into four technologies in particular, mRNA sequencing, mass spectrometry-based proteomics, flow/mass cytometry, and live-cell imaging. These techniques are often used in systems biology and range from genome-wide coverage to single molecule coverage, millions of cells to single cells, and single time points to frequently sampled time courses. We present not only the theoretical background upon which these technologies work, but also enter real wet lab environments to provide instruction on how these techniques are performed in practice, and how resultant data are analyzed for quality and content.

Taught by: Marc Birtwistle, PhD, Assistant Professor

Cada curso es como un libro de texto interactivo, con videos pregrabados, cuestionarios y proyectos.

Conéctate con miles de estudiantes y debate ideas y materiales del curso, y obtén ayuda para dominar los conceptos.

Obtén reconocimiento oficial por tu trabajo y comparte tu éxito con amigos, compañeros y empleadores.

- Video: Lecture 1 -Scope and Overview

- Leyendo: Lecture Slides

- Video: Lecture 2 - Biological Model Systems

- Video: Lecture 3 - Experimental Perturbations

- Leyendo: Lecture Slide

- Video: Lecture 4 - Measuring Nucleic Acids

- Video: Lecture 5 - Measuring Protein and Protein States

- Leyendo: Reading List

- Video: Lecture 1 - History of Sequencing

- Video: Lecture 2 - 2nd and 3rd Generation Sequencing

- Video: Lecture 3 - Illumina-Based mRNA Sequencing

- Video: Lecture 4 - Lab-Based Video

- Video: Lecture 5 - mRNA Sequencing Data Analysis

- Video: Lecture 1 - Basics of Mass Spectrometry 1

- Video: Lecture 2 - Basics of Mass Spectrometry 2

- Video: Lecture 3 - Quantification in Proteomics

- Video: Lecture 4 - Lab-Based Video - Proteomics Day 1

- Video: Lecture 5 - Lab-Based Video - Proteomics Day 2

- Video: Lecture 6 - Lab-Based Video - Analysis

- Video: Lecture 1 - Flow Cytometry 1

- Video: Lecture 2 - Flow Cytometry 2

- Video: Lecture 3 - Mass Cytometry

- Video: Lecture 4 - Cytometry Data Analysis

- Video: Lecture 5 - Lab-Based Video - Flow Cytometry - Acquisition

- Video: Lecture 6 - Lab-Based Video - Flow Cytometry - Analysis

- Video: Lecture 7 - Lab-Based Video - Mass Cytometry

- Video: Lecture 1 - Fluorescence Microscopy

- Video: Lecture 2 - Types of Imaging

- Video: Lecture 3 - Visualizing Molecules in Living Cells: Fluorescent Tools

- Video: Lecture 4 - Quantification

- Video: Lecture 5 - Lab-Based Video

- Video: Lecture 1 - Omics data and Network Model Analyses

- Video: Lecture 2 - Single Cell Time Course Data and Dynamical Model Analyses

- Video: Lecture 3 - Dynamical Model Case Study

- View related products with reviews: Biology .

Share your review

- Privacy Policy

- Cookie statement

- Your reference ID is: X8L9F

- The Netherlands

- United Kingdom

Experimental Methods in Systems Biology

- From www.coursera.org

- Free Access

- Fee-based Certificate

- 8 Sequences

- Introductive Level

Their employees are learning daily with

Course details.

- Week 1 - Introduction Description goes here

- Week 2 - Deep mRNA Sequencing Description goes here

- Week 3 - Mass Spectrometry-Based Proteomics Description goes here

- Week 4 - Midterm Exam Description goes here

- Week 5 - Flow and Mass Cytometry for Single Cell Protein Levels and Cell Fate Description goes here

- Week 6 - Live-cell Imaging for Single Cell Protein Dynamics Description goes here

- Week 7 - Integrating and Interpreting Datasets with Network Models and Dynamical Models Description goes here

- Week 8 - Final Exam

Prerequisite

Instructors.

Marc Birtwistle, PhD Assistant Professor Department of Pharmacology and Systems Therapeutics, Systems Biology Center New York (SBCNY)

Coursera is a digital company offering massive open online course founded by computer teachers Andrew Ng and Daphne Koller Stanford University, located in Mountain View, California.

Coursera works with top universities and organizations to make some of their courses available online, and offers courses in many subjects, including: physics, engineering, humanities, medicine, biology, social sciences, mathematics, business, computer science, digital marketing, data science, and other subjects.

Get certified in

10,000+ Free Udemy Courses to Start Today

- Free Courses

- Universities

- Best Courses

- Submit Course

Coursesity is supported by learner community. We may earn affiliate commission when you make purchase via links on Coursesity.

Experimental Methods in Systems Biology

Learn experimental methods in systems biology from icahn school of medicine at mount sinai..

total enrollments

Total ratings

- Course Overview

Description

In this course, you will :

- Learn about the technologies underlying experimentation used in systems biology, with particular focus on RNA sequencing, mass spec-based proteomics, flow/mass cytometry and live-cell imaging.

- A key driver of the systems biology field is the technology allowing us to delve deeper and wider into how cells respond to experimental perturbations.

- This course gives a broad overview of a variety of current experimental techniques used in modern systems biology, with focus on obtaining the quantitative data needed for computational modeling purposes in downstream analyses.

Similar Courses

Women in environmental biology

Plant Cell Bioprocessing

Introduction to Biology: Ecology, Evolution, & Biodiversity

Essential Human Biology: Cells and Tissues

Biology101: Nervous, Endocrine and Skeletal System

Science Matters: Let's Talk About COVID-19

Biology 101: Introduction to Digestion and Excretion

Free Biology Tutorial - A-Level Biology - An introduction to key concepts.

Introductory Biology

Course features.

- Certificate on purchase

- Coursera Icahn School of Medicine at Mount Sinai

- Intermediate

- Science ,Biology

Enrollment options

- Course Material

- Graded Assignment

- Practice Quizzes

- Certificate on completion

- 7 - days Free access

- $49/month (after free trial)

- Cancel subscription anytime

Coursera Plus - Monthly

- 7 - days free access

- $59/month (after trial ends)

- Unlimited Access to 3000+ courses

Coursera Plus - Annual

- $399/year (43% saving)

- 14 - days refund

Looking for the financial Help? Apply

- Methodology Article

- Open access

- Published: 01 December 2014

A Bayesian active learning strategy for sequential experimental design in systems biology

- Edouard Pauwels 1 , 2 ,

- Christian Lajaunie 3 , 4 , 5 &

- Jean-Philippe Vert 3 , 4 , 5

BMC Systems Biology volume 8 , Article number: 102 ( 2014 ) Cite this article

4104 Accesses

16 Citations

Metrics details

Dynamical models used in systems biology involve unknown kinetic parameters. Setting these parameters is a bottleneck in many modeling projects. This motivates the estimation of these parameters from empirical data. However, this estimation problem has its own difficulties, the most important one being strong ill-conditionedness. In this context, optimizing experiments to be conducted in order to better estimate a system’s parameters provides a promising direction to alleviate the difficulty of the task.

Borrowing ideas from Bayesian experimental design and active learning, we propose a new strategy for optimal experimental design in the context of kinetic parameter estimation in systems biology. We describe algorithmic choices that allow to implement this method in a computationally tractable way and make it fully automatic. Based on simulation, we show that it outperforms alternative baseline strategies, and demonstrate the benefit to consider multiple posterior modes of the likelihood landscape, as opposed to traditional schemes based on local and Gaussian approximations.

This analysis demonstrates that our new, fully automatic Bayesian optimal experimental design strategy has the potential to support the design of experiments for kinetic parameter estimation in systems biology.

Systems biology emerged a decade ago as the study of biological systems where interactions between relatively simple biological species generate overall complex phenomena [ 1 ]. Quantitative mathematical models, coupled with experimental work, now play a central role to analyze, simulate and predict the behavior of biological systems. For example, ordinary differential equation- (ODE) based models, which are the focus of this work, have proved very useful to model numerous regulatory, signaling and metabolic pathways [ 2 ]-[ 4 ], including for example the cell cycle in budding yeast [ 5 ], the regulatory module of nuclear factor θ B (NF- θ B) signaling pathway [ 6 ],[ 7 ], the MAP kinase signaling pathways [ 8 ] or the caspase function in apoptosis [ 9 ].

Such dynamical models involve unknown parameters, such as kinetic parameters, that one must guess from prior knowledge or estimate from experimental data in order to analyze and simulate the model. Setting these parameters is often challenging, and constitutes a bottleneck in many modeling project [ 3 ],[ 10 ]. On the one hand, fixing parameters from estimates obtained in vitro with purified proteins may not adequately reflect the true activity in the cell, and is usually only feasible for a handful of parameters. On the other hand, optimizing parameters to reflect experimental data on how some observables behave under various experimental conditions is also challenging, since some parameters may not be identifiable, or may only be estimated with a large errors, due to the frequent lack of systematic quantitative measurements covering all variables involved in the system; many authors found, for example, that finding parameters to fit experimental observations in nonlinear models is a very ill-conditioned and multimodal problem, a phenomenon sometimes referred to as sloppiness [ 11 ]-[ 17 ], a concept closely related to that of identifiability in system identification theory [ 18 ],[ 19 ], see also [ 20 ] for a recent review. When the system has more than a few unknown parameters, computational issues also arise to efficiently sample the space of parameters [ 21 ],[ 22 ], which has been found to be very rugged and sometimes misleading in the sense that many sets of parameters that have a good fit to experimental data are meaningless from a biological point of view [ 23 ].

Optimizing the experiments to be conducted in order to alleviate non-identifiabilities and better estimate a system’s parameters therefore provides a promising direction to alleviate the difficulty of the task, and has already been the subject of much research in systems biology [ 20 ],[ 24 ]. Some authors have proposed strategies involving random sampling of parameters near the optimal one, or at least coherent with available experimental observations, and systematic simulations of the model with these parameters in order to identify experiments that would best reduce the uncertainty about the parameters [ 25 ]-[ 27 ]. A popular way to formalize and implement this idea is to follow the theory of Bayesian optimal experimental design (OED) [ 28 ],[ 29 ]. In this framework, approximating the model by a linear model (and the posterior distribution by a normal distribution) leads to the well-known A-optimal [ 30 ],[ 31 ] or D-optimal [ 32 ]-[ 36 ] experimental designs, which optimize a property of the Fisher information matrix (FIM) at the maximum likelihood estimator. FIM-based methods have the advantage to be simple and computationally efficient, but the drawback is that the assumption that the posterior probability is well approximated by a unimodal, normal distribution is usually too strong. To overcome this difficulty at the expense of computational burden, other methods involving a sampling of the posterior distribution by Monte-Carlo Markov chain (MCMC) techniques have also been proposed [ 37 ],[ 38 ]. When the goal of the modeling approach is not to estimate the parameters per se , but to understand and simulate the system, other authors have also considered the problem of experimental design to improve the predictions made by the model [ 39 ]-[ 41 ], or to discriminate between different candidate models [ 42 ]-[ 45 ].

In this work we propose a new general strategy for Bayesian OED, and study its relevance for kinetic parameter estimation in the context of systems biology. As opposed to classical Bayesian OED strategies which select the experiment that most reduces the uncertainty in parameter estimation, itself quantified by the variance or the entropy of the posterior parameter distribution, we formulate the problem in a decision-theoretic framework where we wish to minimize an error function quantifying how far the estimated parameters are from the true ones. For example, if we focus on the squared error between the estimated and true parameters, our methods attempts to minimize not only the variance of the estimates, as in standard A-optimal designs [ 30 ],[ 31 ], but also a term related to the bias of the estimate. This idea is similar to an approach that was proposed for active learning [ 46 ], where instead of just reducing the size of the version space (i.e., the amount of models coherent with observed data) the authors propose to directly optimize a loss function relevant for the task at hand. Since the true parameter needed to define the error function is unknown, we follow an approach similar to [ 46 ] and average the error function according to the current prior on the parameters. This results in a unique, well-defined criterion that can be evaluated and used to select an optimal experiment.

In the rest of this paper, we provide a rigorous derivation of this criterion, and discuss different computational strategies to evaluate it efficiently. The criterion involves an average over the parameter space according to a prior distribution, for wich we designed an exploration strategy that proved to be efficient in our experiments. We implemented the criterion in the context of an iterative experimental design problem, where a succession of experiments with different costs is allowed and the goal is to reach the best final parameter estimation given a budget to be spent, a problem that was made popular by the DREAM 6 and DREAM 7 Network Topology and Parameter Inference Challenge [ 47 ]-[ 49 ]. We demonstrate the relevance of our new OED strategy on a small simulated network in this context, and illustrate its behavior on the DREAM7 challenge. The method is fully automated, and we provide an R R package to reproduce all simulations.

A new criterion for Bayesian OED

In this section we propose a new, general criterion for Bayesian OED. We consider a system whose behavior and observables are controlled by an unknown parameter θ * ∈ ⊂ ℝ p that we wish to estimate. For that purpose, we can design an experiment e 2, which in our application will include which observables we observe, when, and under which experimental conditions. The completion of the experiment will lead to an observation o , which we model as a random variable generated according to the distribution o ~ P ( o | θ * ; e ). Note that although θ * is unknown, the distribution P ( o | θ ; e ) is supposed to be known for any θ and e , and amenable to simulations; in our case, P ( o | θ ; e ) typically involves the dynamical equations of the system if the parameters are known, and the noise model of the observations.

Our goal is to propose a numerical criterion to quantify how "good" the choice of the experiment e is for the purpose of evaluating θ θ . For that purpose, we assume given a loss function θ such that ` ( θ , θ * ) measures the loss associated to an estimate θ when the true value is θ * . A typical loss function is the squared Euclidean distance l ( θ , θ * )=║ θ - θ * ║ 2 , or the squared Euclidean distance in after a log transform for positive parameters l ( θ , θ * ) = ∑ i = 1 p log ( θ i / θ i * ) 2 . We place ourselves in a Bayesian setting, where instead of a single point estimate the knowledge about θ * at a given stage of the analysis is represented by a probability distribution π over θ . The quality of the information it provides can be quantified by the average loss, or risk:

Once we choose an experiment e and observe o , the knowledge about θ * is updated and encoded in the posterior distribution

whose risk is now:

The above expression is for a particular observation o . This observation is actually generated according to P ( o | θ * ; e ). Accordingly, the average risk of the experiment e (if the true parameter is θ * ) is:

Finally, θ * being unknown, we average the risk by taking account of the current state of knowledge, and thus according to π . The expected risk associated to the choice of e when the current knowledge about θ * is encoded in the distribution π is thus:

The expected risk R ( e ; π ) of a candidate experiment e given our current estimate of the parameter distribution π is the criterion we propose in order to assess the relevance of performing e . In other words, given a current estimate π , we propose to select the best experiment to perform as the one that minimizes R ( e ; π ). We describe in the next section more precisely how to use this criterion in the context of sequential experimental design where each experiment has a cost.

Note that the criterion R ( e ; π ) is similar but different from classical Bayesian OED criteria, like the variance criterion used in A-optimal design. Indeed, taking for example the square Euclidean loss as loss function l ( θ , θ * )=║ θ - θ * ║ 2 , and denoting by π e the mean posterior distribution that we expect if we perform experiment e , standard A-optimal design tries to minimize the variance of π e , while our criterion focuses on:

In other words, our criterion attempts to control both the bias and the variance of the posterior distribution, while standard Bayesian OED strategies only focus on the variance terms. While both criteria coincide with unbiased estimators, there is often no reason to believe that the estimates used are unbiased.

Sequential experimental design

In sequential experimental design, we sequentially choose an experiment to perform, and observe the resulting outcome. Given the past experiments e 1 ,. . ., e k and corresponding observations o 1 ,. . ., o k , we therefore need to choose what is the best next experiment e k +1 to perform, assuming in addition that each possible experiment e has an associated cost C e and we have a limited total budget to spend.

We denote by π k the distribution on π representing our knowledge about θ * after the k -th experiment and observation, with π 0 representing the prior knowledge we may have about the parameters before the first experiment. According to Bayes’ rule ( 1 ), the successive posteriors are related to each other according to:

Although a global optimization problem could be written to optimize the choice of the k -th experiment based on possible future observations and total budget constraint, we propose a simple, greedy formulation where at each step we choose the experiment that most decreases the estimation risk per cost unit. If the cost of all experiments were the same, this would simply translate to:

To take into account the different costs associated with different experiments, we consider as a baseline the mean risk when the knowledge about θ * is encoded in a distribution π over θ :

and choose the experiment that maximally reduces the expected risk per cost unit according to:

Evaluating the risk

The expected risk of an experiment R ( e ; π ) ( 2 ) involves a double integral over the parameter space and an integral over the possible observations, a challenging setting for practical evaluation. Since no analytical formula can usually be derived to compute it exactly, we now present a numerical scheme that we found efficient in practice. Since the distribution π k over the parameter space after the k -th experiment can not be manipulated analytically, we resort on sampling to approximate it and estimate the integrals by Monte-Carlo simulations.

Let us suppose that we can generate a sample θ 1 ,. . ., θ N distributed according to π . Obtaining such a sample itself requires careful numerical considerations discussed in the next section, but we assume for the moment that it can be obtained and show how we can estimate R ( e ; θ ) from it for a given experiment e . For that purpose, we write

for 0≤ i , j ≤ N , as a discrete estimate of the second integral in equation ( 2 ). Since

are independantly drawn from π the prior terms disappear. Moreover, the denominator is a discretization of the denominator in equation ( 2 ), and the likelihood P is supposed to be given. We have the standard estimate of ( 2 ) by an empirical average:

We see that the quantity w ij ( e ) measures how similar the observation profiles are under the two alternatives θ i and θ j . A good experiment produces dissimilar profiles and thus low values of w ij ( e ) when θ i and θ j are far appart. The resulting risk is thus reduced accordingly.

For each i and j , the quantity w ij ( e ) can in turn be estimated by Monte-Carlo simulations. For each θ i , a sample of the conditionnal distribution P ( o | θ i ; e ), denoted by o u i ( u =1,· · ·, M ) is generated. The corresponding approximation is:

which can be interpreted as a weighted likelihood of the alternative when the observation is generated according to θ i .

In most settings, generating a sample o u i involves running a deterministic model, to be performed once for each θ i , and degrading the output according to a noise model independently for each u . In our case, we used the solver proposed in [ 50 ] provided in the package [ 51 ] to simulate the ODE systems. Thus, a large number M can be used if necessary at minimal cost. Based on these samples, the approximated weights w ij M can be computed from ( 5 ), from which the expected risk of experiment e can be derived from ( 4 ).

Note that an appealing property of this scheme is that the same sample θ i can be used to evaluate all experiments. We now need to discuss how to obtain this sample.

Sampling the parameter space

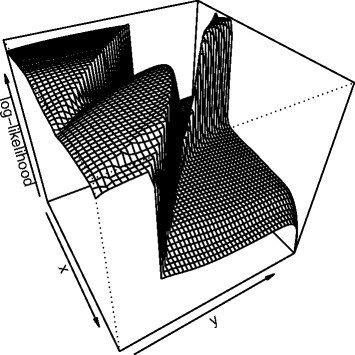

Sampling the parameter space according to π k , the posterior distribution of parameters after the k -th experiment, is challenging because the likelihood function can exhibit multi-modality, plateaus and abrupt changes as illustrated in Figure 1 . Traditional sampling techniques tend to get stuck in local optima, not accounting for the diversity of high likelihood areas of the parameter space [ 52 ]. In order to speed up the convergence of sampling algorithm to high posterior density regions, we implemented a Broyden-Fletcher-Goldfarb-Shanno (BFGS) quasi-Newton optimization algorithm using finite difference approximation for gradient estimation [ 53 ] in order to identify several modes of the posterior distribution, and used these local maxima as initial values for a Metropolis Hastings sampler, combining isotropic Gaussian proposal and single parameter modifications [ 52 ]. We then use a Gaussian mixture model approximation to estimate a weighting scheme of in order to account for the initialization process when recombining samples from different modes. Annex B, given in the Additional file 1 provides computational details for this procedure.

Log likelihood surface. Log likelihood surface for parameters living on a restricted area of a two dimensional plane. For clarity, scale is not shown. Areas with low log-likelihood correspond to dynamics that do not fit the data at all, while areas with high log-likelihood fit the data very well. The surface shows multi-modality, plateaus and abrupt jumps which makes it difficult to sample from this density. When parameters do not live on a plane, these curses have even higher effect.

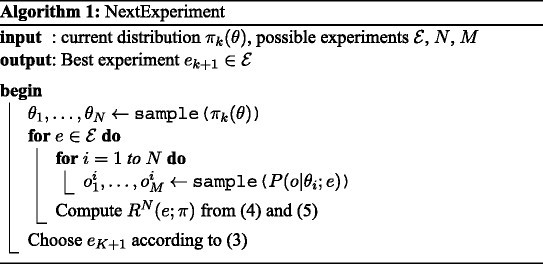

The method described in Algorithm 1 is independant of the sampling scheme used. However, convergence of posterior samples is essential to ensure a good behaviour of the method. First, it is known that improper (or "flat") priors may lead to improper posterior distributions when the model contains non identifiabilities. Such issues should be avoided since MCMC based sampling schemes are known not to converge in these cases. Therefore, proper prior distributions are essential in this context and improper priors should not be used in order to avoid improper posteriors. The second important element for posterior samples is numerical convergence of the sampling scheme, usually guaranteed asymptotically. Fine tuning parameters that drive the scheme is necessary to ensure that one is close to convergence in a reasonable amount of time. To check appropriate sampling behaviour, we use a graphical heuristic. We draw ten different samples from the same posterior distribution, using different initialization seeds. For each model parameter, we compare the dispersion within each sample to the total dispersion obtained by concatenating the ten samples. This value should be close to one. Such an heuristic can be used to tune parameters of the sampler, such as sample size or proposal distribution. More details and numerical results are given in Additional file 1 : Annex B.

Enforcing regularity through the prior distribution

The prior distribution π 0 plays a crucial role at early stages of the design, as it can penalize parameters leading to dynamical behaviors that we consider unlikely. In addition to a large variance log normal prior, we considered penalizing parameters leading to non smooth time trajectories. This is done by adding to the prior log density a factor that depends on the maximum variation of time course trajectories as follows. To each parameter value θ are associated trajectories, Y i , t , which represent concentration values of the i -th species at time t . In the evaluation of the log prior density at θ , we add a term proportional to

The advantage of this is twofold. First, it is reasonable to assume that variables we do not observe in a specific design vary smoothly with time. Second, this penalization allows to avoid regions of the parameter space corresponding to very stiff systems, which are poor numerical models of reality, and which simulation are computationally demanding or simply make the solver fail. This penalty term is only used in the local optimization phase not during the Monte Carlo exploration of the posterior. The main reason for adopting such a scheme is numerical stability.

The choice of prior parameters directly affects the posterior disribution, specially when a low amount of data is available. In our experiments, the prior is chosen to be log-normal with large variance. This allows to cover a wide range of potential physical values for each parameter (from 10 -9 to 10 9 ). The weight of the regularity enforcing term has also to be determined. Since the purpose is to avoid regions corresponding to numerically unstable systems, we chose this weight to be relatively small compared to the likelihood term. In practical applications, parameters have to be chosen by considering the physical scale of quantities to be estimated. Indeed, a wrong choice of hyper parameter leads to very biased estimates at the early stages of the design.

Results and discussion

In silico network description.

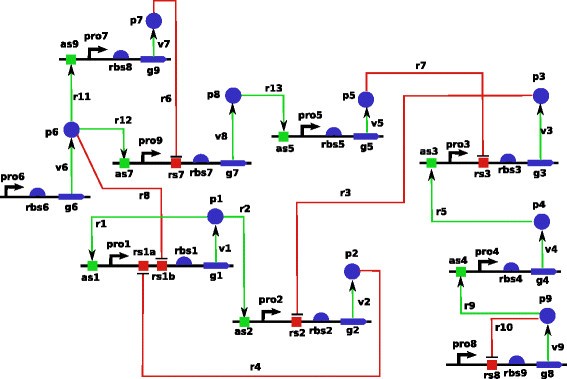

In order to evaluate the relevance of our new sequential Bayesian OED strategy in the context of systems biology, we test it on an in silico network proposed in the DREAM7 Network Topology and Parameter Inference Challenge which we now describe [ 49 ]. The network, represented graphically in Figure 2 , is composed of 9 genes and its dynamics is governed by ordinary differential equations representing kinetic laws involving 45 parameters. Promoting reactions are represented by green arrows and inhibitory reactions are depicted by red arrows. For each of the 9 genes, both protein and messenger RNA are explicitly modelled and therefore the model contained 18 continuous variables. Promoter strength controls the transcription reaction and ribosomal strength controls the protein synthesis reaction. Decay of messenger RNA and protein concentrations is controlled through degradation rates. A complete description of the underlying differential equations is found in Additional file 2 : Annex A. The complete network description and implementations of integrators to simulate its dynamics are available from [ 49 ].

Gene network for DREAM7 Challenge. Gene network for DREAM7 Network Topology and Parameter Inference Challenge. Promoting reactions are represented by green arrows and inhibitory reactions are depicted by red arrows.

Various experiments can be performed on the network producing new time course trajectories in unseen experimental conditions. An experiment consists in choosing an action to perform on the system and deciding which quantity to observe. The possible actions are

do nothing (wild type);

delete a gene (remove the corresponding species);

knock down a gene (increase the messenger RNA degradation rate by ten folds);

decrease gene ribosomal activity (decrease the parameter value by 10 folds).

These actions are coupled with 38 possible observable quantities

messenger RNA concentration for all genes, at two possible time resolutions (2 possible choices);

protein concentration for a single pair of proteins, at a single resolution (resulting in 9-8/2=36 possible choices).

Purchasing data consists in selecting an action and an observable quantities. In addition, it is possible to estimate the constants (binding affinity and hill coefficient) of one of the 13 reactions in the system. Different experiments and observable quantities have different costs, the objective being to estimate unknown parameters as accurately as possible, given a fixed initial credit budget. The cost of the possible experiments are described in Table S1 in Additional file 2 : Annex A.

For simulation purposes, we fix an unknown parameter value θ θ to control the dynamics of the systems, and the risk of an estimator is defined in terms of the loss function l ( θ , θ * ) = ∑ i = 1 p log θ i / θ i θ 2 .

The noise model used for data corruption is heteroscedastic Gaussian: given the true signal y ∈ ℝ + , the corrupted signal has the form y + z 1 + z 2 , where z 1 and z 2 are centered normal variables with standard deviation 0.1 and (0.2× y ), respectively.

Performance on a 3-gene subnetwork

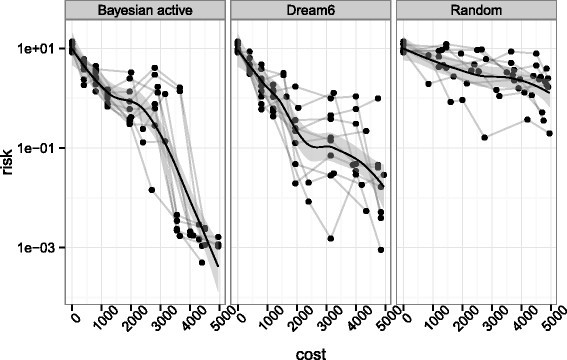

In order to assess the performance of our sequential OED strategy in an easily reproducible setting, we first compare it to other strategies on a small network made of 3 genes. We take the same architecture as in Figure 2 , only considering proteins 6, 7 and 8. The resulting model has 6 variables (the mRNA and protein concentrations of the three genes) whose behavior is governed by 9 parameters. There are 50 possible experiments to choose from for this sub network: 10 perturbations (wildtype and 3 perturbations for each gene) and 5 observables (mRNA concentrations at two different time resolutions and each protein concentration at a single resolution). We compare three ways to sequentially choose experiments in order to estimate the 9 unknown parameters: (i) our new Bayesian OED strategy, including the multimodal sampling of parameter space, (ii) the criterion proposed in equation ( 13 ) in [ 27 ] together with our posterior exploration strategy, and (iii) a random experimental design, where each experiment not done yet is chosen with equal probability. The comparison of (i) and (ii) is meant to compare our strategy with a criterion that proved to be efficient in a similar setting. The comparison to (iii) is meant to assess the benefit, if any, of OED for parameter estimation in systems biology. Since all methods involve randomness, we repeat each experiment 10 times with different pseudo-random number generator seeds.

The results are presented in Figure 3 , where we show, for each of the three methods, the risk of the parameter estimation as a function of budget used. Here the risk is defined as the loss between the true parameter θ * (unknown to the method) and the estimated mean of the posterior distribution. After k rounds of experimental design, one has access to k experimental datasets which define a posterior distribution θ k from which a sample { θ i k } i = 1 N is drawn. The quantities displayed in Figure 3 are computed as

which would be the true risk that one would have to support. We first observe that the random sampling strategy has the worst risk among the three strategies, suggesting that optimizing the experiments to be made for parameter estimation outperforms a naive random choice of experiments. Second, and more importantly, the comparison between the first and second panel suggests that, given the same parameter space exploration heuristic, our proposed strategy outperforms the criterion given in [ 27 ]. It is worth noting that this criterion is part of a strategy that performed best in DREAM6 parameter estimation challenge. Although a large part of their design procedure involved human choice which we did not implement, we reproduced the part of their procedure that could be automatised. A compagnon of Figure 3 is given in Figure S3 in Additional file 1 : Annex B where we illustrate based on parameter samples how lacks of identifiability manifest themselves in a Bayesian context and how the design strategy alleviates them in terms of posterior distribution. In summary, this small experiment validates the relevance of our Bayesian OED strategy.

Comparison of risk evolution between different strategies. Comparison of risk evolution between different strategies on a subnetwork. The figure shows the true risk at each step of the procedure, i.e. the approximate posterior distribution is compared to the true underlying parameter which is unknown during the process. The risk is computed at the center of the posterior sample. The different lines represent 10 repeats of the design procedure given the same initial credit budget and the points represent experiment purchase. The first panel represents our strategy, the second panel implements the criterion of the best performing team on DREAM6 challenge while random design consists in choosing experiments randomly.

Results on the full DREAM7 network

To illustrate the behavior of our OED strategy in a more realistic context, we then apply it to the full network of Figure 2 following the setup of the DREAM7 challenge. At the beginning of the experiment, we already have at hand low resolution mRNA time courses for the wild type system. The first experiments chosen by the method are wild-type protein concentration time courses for all genes. The detailed list of purchased experiments is found Table S2 in Additional file 2 : Annex A. This makes sense since we have enormous uncertainty about proteins time courses, given that we do not know anything about them. Once these protein time series are purchased, the suggestion for the next experiment to carry out is illustrated in Table 1 . Interestingly, the perturbations with the lowest risk are related to gene 7 which is on the top of the cascade (see Figure 2 ). Moreover it seemed obvious from Table 1 that we have to observe protein 8 concentration. Indeed, Figure 4 shows that there is a lot of uncertainty about protein 8 evolution when we remove gene 7.

Trajectories from posterior sample. Corresponds to Table 1 figures. We plot trajectories from our posterior sample (protein 8 concentration was divided by 2 and we do not plot concentrations higher than 100). The quantities with the highest variability are protein 8 and 3 concentrations. This is consistent with the estimated risks in Table 1 . There is quite a bit of uncertainty in protein 5 concentration, however this is related to protein 8 uncertainty as protein 8 is an inhibitor of protein 5. Moreover, mRNA concentration have much lower values and are not as informative as proteins concentrations. Red dots shows the data we purchased for this experiment after seeing these curve and in accordance with results in Table 1 .

Moreover, our criterion determines that it is better to observe protein 3 than protein 5, which makes sense since the only protein which affects protein 5 evolution is protein 8 (see Figure 2 ). Therefore uncertainty about protein 5 time course is tightly linked to protein 8 time course, and observing protein 3 brings more information than observing protein 5. This might not be obvious when looking at the graph in Figure 4 and could not have been foreseen by a method that considers uncertainty about each protein independently. At this point, we purchase protein 3 and 8 time courses for gene 7 deletion experiment and highlight in red in Figure 4 the profiles of proteins 3 and 8 obtained from the system.

In addition to parameter estimation, one may be interested in the ability of the model with the inferred parameters to correctly simulate time series under different experimental conditions. Figure 5 represents a sample from the posterior distribution after all credits have been spent (unseen experiment description is given in Table S3 Additional file 2 : Annex A). Both parameter values and protein time course for the unseen experiment are presented.

Comparison of parameter and trajectory variability. Comparison of parameter variability and time course trajectory variability. This is a sample from the posterior distribution after spending all the credits in the challenge. The top of the figure shows parameter values on log scale, while the bottom shows prediction of protein time courses for an unseen experiment. The range of some parameter values is very wide while all these very different values lead to very similar protein time course predictions.

Some parameters, like p _ d e g r a d a t i o n _ r a t e or p r o 3_ s r e n g h t , clearly concentrate around a single value while others, like p r o 1_ s t r e n g t h or p r o 2_ s t r e n g t h , have very wide ranges with multiple accumulation points. Despite this variability in parameter values, the protein time course trajectories are very similar. It appears that protein 5 time course is less concentrated than the two others. This is due to the hetroscedasticity of the noise model which was reflected in the likelihood. Indeed, the noise model is Gaussian with standard deviation increasing with the value of the corresponding concentration. Higher concentrations are harder to estimate due to larger noise standard deviation.

Computational systems biology increasingly relies on the heavy use of computational resources to improve the understanding of the complexity underlying cell biology. A widespread approach in computational systems biology is to specify a dynamical model of the biological process under investigation based on biochemical knowledge, and consider that the real system follows the same dynamics for some kinetic parameter values. Recent reports suggest that this has benefits in practical applications ( e.g. [ 54 ]). Systematic implementations of the approach requires to deal with the fact that most kinetic parameters are often unknown, raising the issue of estimating these parameters from experimental data as efficiently as possible. An obvious sanity check is to recover kinetic parameters from synthetic data where dynamic and noise model are well specified, which is already quite a challenge.

In this paper we proposed a new general Bayesian OED strategy, and illustrated its relevance on an in silico biological network. The method takes advantage of the Bayesian framework to sequentially choose experiments to be performed, in order to estimate these parameters subject to cost constraints. The method relies on a single numerical criterion and does not depend on a specific instance of this problem. This is in our opinion a key point in order to reproducibly be able to deal with large scale networks of size comparable to of a cell for example. Experimental results suggest that the strategy has the potential to support experimental design in systems biology.

As noted by others [ 11 ],[ 12 ],[ 15 ]-[ 17 ], the approach focusing on kinetic parameter estimation is questionable. We also give empirical evidence that very different parameter values can produce very similar dynamical behaviors, potentially leading to non-identifiability issues. Moreover, focusing on parameter estimation supposes that the dynamical model represents the true underlying chemical process. In some cases, this might simply be false. For example, hypotheses underlying the law of mass action are not satisfied in the gene transcription process. However, simplified models might still be good proxies to characterize dynamical behaviors we are interested in. The real problem of interest is often to reproduce the dynamics of a system in terms of observable quantities, and to predict the system behavior for unseen manipulations. Parameters can be treated as latent variables which impact the dynamics of the system but cannot be observed. In this framework, the Bayesian formalism described here is well suited to tackle the problem of experimental design.

The natural continuity of this work is to adapt the method to treat larger problems. This raises computational issues and requires to develop numerical methods that scale well with the size of the problem. Sampling strategies that adapt to the local geometry and to multimodal aspects of the posterior, such as described e.g. in [ 55 ],[ 56 ] are interesting directions to investigae in this context. The main bottlenecks are the cost of simulating large dynamical systems, and the need for large sample size in higher dimension for accurate posterior estimation. Posterior estimation in high dimensions is known to be hard and is an active subject of research. Although our Bayesian OED criterion is independent of the model investigated, it is likely that a good sampling strategy to implement may benefit from specific tuning in order to perform well on specific problem instances. As for reducing the computational burden of simulating large dynamical systems, promising research directions are parameter estimation methods that do not involve dynamical system simulation such as [ 57 ] or differential equation simulation methods that take into account both parameter uncertainty and numerical uncertainty such as the probabilistic integrator of [ 58 ].

Availability of supporting data

An R R package that allows to reproduce our results and simulations is available at the following URL: https://doi.org/cran.r-project.org/package=pauwels2014 .

Additional files

Kitano H: Computational systems biology. Nature 2002, 420:206-210.

Article CAS PubMed Central Google Scholar

Tyson JJ, Chen KC, Novak B: Sniffers, buzzers, toggles and blinkers: dynamics of regulatory and signaling pathways in the cell. Curr Opin Cell Biol. 2003, 15 (2): 221–231.

Wilkinson DJ: Stochastic modelling for quantitative description of heterogeneous biological systems. Nat Rev Genet. 2009, 10 (2): 122–133.

Barillot E, Calzone L, Vert J-P, Zynoviev A: Computational Systems Biology of Cancer. 2012, CRC Press, London

Book Google Scholar

Chen KC, Csikasz-Nagy A, Gyorffy B, Val J, Novak B, Tyson JJ: Kinetic analysis of a molecular model of the budding yeast cell cycle. Mol Biol Cell. 2000, 11 (1): 369–391.

Hoffmann A, Levchenko A, Scott ML, Baltimore D: The i k b-nf- k b signaling module: temporal control and selective gene activation. Science. 2002, 298 (5596): 1241–1245.

Lipniacki T, Paszek P, Brasier AR, Luxon B, Kimmel M: Mathematical model of nf-kappab regulatory module. J Theor Biol. 2004, 228 (2): 195–215.

Schoeberl B, Eichler-Jonsson C, Gilles ED, Müller G: Computational modeling of the dynamics of the map kinase cascade activated by surface and internalized egf receptors. Nat Biotechnol. 2002, 20 (4): 370–375.

Article PubMed Central Google Scholar

Fussenegger M, Bailey J, Varner J: A mathematical model of caspase function in apoptosis. Nat Biotechnol 2000, 18:768–774.

Jaqaman K, Danuser G: Linking data to models: data regression. Nat Rev Mol Cell Biol. 2006, 7 (11): 813–819.

Battogtokh D, Asch DK, Case ME, Arnold J, Schuttler H-B: An ensemble method for identifying regulatory circuits with special reference to the qa gene cluster of neurospora crassa. Proc Natl Acad Sci U S A. 2002, 99 (26): 16904–16909.

Brown KS, Sethna JP: Statistical mechanical approaches to models with many poorly known parameters. Phys Rev E 2003, 68:021904.

Brown KS, Hill CC, Calero GA, Myers CR, Lee KH, Sethna JP, Cerione RA: The statistical mechanics of complex signaling networks: nerve growth factor signaling. Phys Biol. 2004, 1 (3–4): 184–195.

Article CAS Google Scholar

Waterfall JJ, Casey FP, Gutenkunst RN, Brown KS, Myers CR, Brouwer PW, Elser V, Sethna JP: Sloppy-model universality class and the Vandermonde matrix. Phys Rev Lett. 2006, 97 (15): 150601

Article Google Scholar

Achard P, De Schutter E: Complex parameter landscape for a complex neuron model. PLoS Comput Biol. 2006, 2 (7): 94

Gutenkunst RN, Waterfall JJ, Casey FP, Brown KS, Myers CR, Sethna JP: Universally sloppy parameter sensitivities in systems biology models. PLoS Comput Biol. 2007, 3 (10): 1871–1878.

Piazza M, Feng X-J, Rabinowitz JD, Rabitz H: Diverse metabolic model parameters generate similar methionine cycle dynamics. J Theor Biol. 2008, 251 (4): 628–639.

Bellman R, Åström KJ: On structural identifiability. Math Biosci. 1970, 7 (3): 329–339.

Cobelli C, DiStefano JJ: Parameter and structural identifiability concepts and ambiguities: a critical review and analysis. Am J Physiol Regul Integr Comp Physiol. 1980, 239 (1): R7–R24.

Villaverde AF, Banga JR: Reverse engineering and identification in systems biology: strategies, perspectives and challenges. J R Soc Interface 2014, 11(91).

Mendes P, Kell D: Non-linear optimization of biochemical pathways: applications to metabolic engineering and parameter estimation. Bioinformatics. 1998, 14 (10): 869–883.

Moles CG, Mendes P, Banga JR: Parameter estimation in biochemical pathways: a comparison of global optimization methods. Genome Res. 2003, 13 (11): 2467–2474.

Fernández Slezak D, Suárez C, Cecchi GA, Marshall G, Stolovitzky G: When the optimal is not the best: parameter estimation in complex biological models. PLoS One. 2010, 5 (10): 13283

Kreutz C, Timmer J: Systems biology: experimental design. FEBS J. 2009, 276 (4): 923–942.

Cho K-H, Shin S-Y, Kolch W, Wolkenhauer O: Experimental design in systems biology, based on parameter sensitivity analysis using a monte carlo method: a case study for the TNF α -mediated NF- k B signal transduction pathway. Simulation. 2003, 79 (12): 726–739.

Feng X-J, Rabitz H: Optimal identification of biochemical reaction networks. Biophys J. 2004, 86 (3): 1270–1281.

Steiert B, Raue A, Timmer J, Kreutz C: Experimental design for parameter estimation of gene regulatory networks. PLoS One. 2012, 7 (7): 40052

Chaloner K, Verdinelli I: Bayesian experimental design: a review. Stat Sci. 1995, 10 (3): 273–304.

Lindley DV: On a measure of the information provided by an experiment. Ann Math Stat. 1956, 27 (4): 986–1005.

Bandara S, Schlöder JP, Eils R, Bock HG, Meyer T: Optimal experimental design for parameter estimation of a cell signaling model. PLoS Comput Biol. 2009, 5 (11): 1000558

Transtrum MK, Qiu P: Optimal experiment selection for parameter estimation in biological differential equation models. BMC Bioinformatics 2012, 13:181.

Faller D, KlingMüller U, Timmer J: Simulation methods for optimal experimental design in systems biology. Simulation 2003, 79:717–725.

Kutalik Z, Cho K-H, Wolkenhauer O: Optimal sampling time selection for parameter estimation in dynamic pathway modeling. Biosystems. 2004, 75 (1–3): 43–55.

Gadkar KG, Gunawan R, Doyle FJ 3rd: Iterative approach to model identification of biological networks. BMC Bioinformatics 2005, 6:155.

Balsa-Canto E, Alonso AA, Banga JR: Computational procedures for optimal experimental design in biological systems. IET Syst Biol. 2008, 2 (4): 163–172.

Hagen DR, White JK, Tidor B: Convergence in parameters and predictions using computational experimental design. Interface Focus. 2013, 3 (4).

Kramer A, Radde N: Towards experimental design using a bayesian framework for parameter identification in dynamic intracellular network models. Procedia Comput Sci. 2010, 1 (1): 1645–1653.

Liepe J, Filippi S, Komorowski M, Stumpf MPH: Maximizing the information content of experiments in systems biology. PLoS Comput Biol. 2013, 9 (1): 1002888

Casey FP, Baird D, Feng Q, Gutenkunst RN, Waterfall JJ, Myers CR, Brown KS, Cerione RA, Sethna JP: Optimal experimental design in an epidermal growth factor receptor signalling and down-regulation model. IET Syst Biol. 2007, 1 (3): 190–202.

Weber P, Kramer A, Dingler C, Radde N: Trajectory-oriented bayesian experiment design versus Fisher A-optimal design: an in depth comparison study. Bioinformatics. 2012, 28 (18): 535–541.

Vanlier J, Tiemann CA, Hilbers PAJ: A bayesian approach to targeted experiment design. Bioinformatics. 2012, 28 (8): 1136–1142.

Kremling A, Fischer S, Gadkar K, Doyle FJ, Sauter T, Bullinger E, Allgöwer F, Gilles ED: A benchmark for methods in reverse engineering and model discrimination: problem formulation and solutions. Genome Res. 2004, 14 (9): 1773–1785.

Apgar JF, Toettcher JE, Endy D, White FM, Tidor B: Stimulus design for model selection and validation in cell signaling. PLoS Comput Biol. 2008, 4 (2): e30

Busetto AG, Hauser A, Krummenacher G, Sunnåker M, Dimopoulos S, Ong CS, Stelling J, Buhmann JM: Near-optimal experimental design for model selection in systems biology. Bioinformatics. 2013, 29 (20): 2625–2632.

Skanda D, Lebiedz D: An optimal experimental design approach to model discrimination in dynamic biochemical systems. Bioinformatics. 2010, 26 (7): 939–945.

Roy N, McCallum A: Toward optimal active learning through sampling estimation of error reduction. Proceedings of the Eighteenth International Conference on Machine Learning. 2001, Morgan Kaufmann Publishers Inc, San Francisco, 441–448.

Google Scholar

Dialogue for Reverse Engineering Assessments and Methods (DREAM) website. []. Accessed 2013–1228., [ https://doi.org/www.the-dream-project.org ]

DREAM6 Estimation of Model Parameters Challenge website. [] Accessed 2013–1228., [ https://doi.org/www.the-dream-project.org/challenges/dream6-estimation-model-parameters-challenge ]

DREAM7 Estimation of Model Parameters Challenge website. []. Accessed 2013–1228., [ https://doi.org/www.the-dream-project.org/challenges/network-topology-and-parameter-inference-challenge ]

Bogacki P, Shampine LF: A 3(2) pair of Runge — Kutta formulas. Appl Math Lett 1989, 2:321–325.

Soetaert K, Petzoldt T, Setzer RW: Solving differential equations in R: package deSolve. J Stat Softw. 2010, 33 (9): 1–25.

Andrieu C, De Freitas N, Doucet A, Jordan MI: An introduction to MCMC for machine learning. Mach Learn. 2003, 50 (1–2): 5–43.

Nocedal J, Wright S: Numerical Optimization. 2006, Springer, New York

Karr JR, Sanghvi JC, Macklin DN, Gutschow MV, Jacobs JM, Bolival B, Assad-Garcia N, Glass JI, Covert MW: A whole-cell computational model predicts phenotype from genotype. Cell. 2012, 150 (2): 389–401.

Girolami M, Calderhead B: Riemann manifold langevin and hamiltonian monte carlo methods. J Roy Stat Soc B Stat Meth. 2011, 73 (2): 123–214.

Calderhead B, Girolami M: Estimating Bayes factors via thermodynamic integration and population MCMC. Comput Stat Data Anal. 2009, 53 (12): 4028–4045.

Calderhead B, Girolami M, Lawrence ND: Accelerating Bayesian inference over nonlinear differential equations with Gaussian processes. In Adv. Neural. Inform. Process Syst : MIT Press; 2008:217–224.

Chkrebtii O, Campbell DA, Girolami MA, Calderhead B: Bayesian uncertainty quantification for differential equations 2013. Technical Report 1306.2365, arXiv.

Download references

Acknowledgements

The authors would like to thank Gautier Stoll for insightful discussions. This work was supported by the European Research Council (SMAC-ERC-280032). Most of this work was carried out during EP’s PhD at Mines ParisTech.

Author information

Authors and affiliations.

CNRS, LAAS, 7 Avenue du Colonel Roche, Toulouse, F-31400, France

Edouard Pauwels

Univ de Toulouse LAAS, Toulouse, F-31400, France

MINES ParisTech, PSL-Research University, CBIO-Centre for Computational Biology, 35 rue Saint-Honoré, Fontainebleau, 77300, France

Christian Lajaunie & Jean-Philippe Vert

Institut Curie, 26 rue d’Ulm, F-75248, Paris, France

INSERM U900, Paris, F-75248, France

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Edouard Pauwels .

Additional information

Competing interests.

The authors declare that they have no competing interests.

Authors' contributions

EP was responsible for the implementation and drafting the manuscript. CL and JPV supervised the work. All three authors took part in the design of the method and the writing of the manuscript. All authors read and approved the final manuscript.

Electronic supplementary material

12918_2014_102_moesm1_esm.pdf.

Additional file 1: Annex B. Supplementary details regarding the sampling strategy used in our numerical experiments. The note also contains diagnosis information and marginal distribution samples to illustrate the efficacy of the sampling strategy in the setting of this paper. (PDF 229 KB)

12918_2014_102_MOESM2_ESM.pdf

Additional file 2: Annex A. PDF file. Description of the DREAM7 challenge network represented in Figure 2 and experimental design setting. (PDF 102 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Authors’ original file for figure 1

Authors’ original file for figure 2, authors’ original file for figure 3, authors’ original file for figure 4, authors’ original file for figure 5, authors’ original file for figure 6, rights and permissions.

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Pauwels, E., Lajaunie, C. & Vert, JP. A Bayesian active learning strategy for sequential experimental design in systems biology. BMC Syst Biol 8 , 102 (2014). https://doi.org/10.1186/s12918-014-0102-6

Download citation

Received : 10 February 2014

Accepted : 14 August 2014

Published : 01 December 2014

DOI : https://doi.org/10.1186/s12918-014-0102-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Systems biology

- Kinetic parameter estimation

- Active learning

- Bayesian experimental design

BMC Systems Biology

ISSN: 1752-0509

- General enquiries: [email protected]

Loading metrics

Open Access

Peer-reviewed

Research Article

Model Selection in Systems Biology Depends on Experimental Design

Affiliation Centre for Integrative Systems Biology at Imperial College London, London, United Kingdom

* E-mail: [email protected]

- Daniel Silk,

- Paul D. W. Kirk,

- Chris P. Barnes,

- Tina Toni,

- Michael P. H. Stumpf

- Published: June 12, 2014

- https://doi.org/10.1371/journal.pcbi.1003650

- Reader Comments

Experimental design attempts to maximise the information available for modelling tasks. An optimal experiment allows the inferred models or parameters to be chosen with the highest expected degree of confidence. If the true system is faithfully reproduced by one of the models, the merit of this approach is clear - we simply wish to identify it and the true parameters with the most certainty. However, in the more realistic situation where all models are incorrect or incomplete, the interpretation of model selection outcomes and the role of experimental design needs to be examined more carefully. Using a novel experimental design and model selection framework for stochastic state-space models, we perform high-throughput in-silico analyses on families of gene regulatory cascade models, to show that the selected model can depend on the experiment performed. We observe that experimental design thus makes confidence a criterion for model choice, but that this does not necessarily correlate with a model's predictive power or correctness. Finally, in the special case of linear ordinary differential equation (ODE) models, we explore how wrong a model has to be before it influences the conclusions of a model selection analysis.

Author Summary

Different models of the same process represent distinct hypotheses about reality. These can be decided between within the framework of model selection, where the evidence for each is given by their ability to reproduce a set of experimental data. Even if one of the models is correct, the chances of identifying it can be hindered by the quality of the data, both in terms of its signal to measurement error ratio and the intrinsic discriminatory potential of the experiment undertaken. This potential can be predicted in various ways, and maximising it is one aim of experimental design. In this work we present a computationally efficient method of experimental design for model selection. We exploit the efficiency to consider the implications of the realistic case where all models are more or less incorrect, showing that experiments can be chosen that, considered individually, lead to unequivocal support for opposed hypotheses.

Citation: Silk D, Kirk PDW, Barnes CP, Toni T, Stumpf MPH (2014) Model Selection in Systems Biology Depends on Experimental Design. PLoS Comput Biol 10(6): e1003650. https://doi.org/10.1371/journal.pcbi.1003650

Editor: Burkhard Rost, Tum, Germany

Received: May 30, 2013; Accepted: April 10, 2014; Published: June 12, 2014

Copyright: © 2014 Silk et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: This work was funded by the Biotechnology and Biological Science Research Council ( www.bbsrc.ac.uk ) grant BB/K003909/1 (to DS and MPHS, the Human Frontiers Science Programme (ww.hfsp.org) grant RG0061/2011 (to PDWK and MPHS), and a Wellcome Trust-MIT fellowship ( www.wellcome.ac.uk ) to TT. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Mathematical models provide a rich framework for biological investigation. Depending upon the questions posed, the relevant existing knowledge and alternative hypotheses may be combined and conveniently encoded, ready for analysis via a wealth of computational techniques. The consequences of each hypothesis can be understood through the model behaviour, and predictions made for experimental validation. Values may be inferred for unknown physical parameters and the actions of unobserved components can be predicted via model simulations. Furthermore, a well-designed modelling study allows conclusions to be probed for their sensitivity to uncertainties in any assumptions made, which themselves are necessarily made explicit.

While the added value of a working model is clear, how to create one is decidedly not. Choosing an appropriate formulation (e.g. mechanistic, phenomenological or empirical), identifying the important components to include (and those that may be safely ignored), and defining the laws of interaction between them remains highly challenging, and requires a combination of experimentation, domain knowledge and, at times, a measure of luck. Even the most sophisticated models will still be subject to an unknown level of inaccuracy – how this affects the modelling process, and in particular experimental design for Bayesian inference, will be the focus of this study.

Both the time and financial cost of generating data, and a growing understanding of the data dependancy of model and parameter identifiability [1] , [2] , has driven research into experimental design. In essence, experimental design seeks experiments that maximise the expected information content of the data with respect to some modelling task. Recent developments include the work of Liepe et. al [2] that builds upon existing methods [3] – [8] , by utilising a sequential approximate Bayesian computation framework to choose the experiment that maximises the expected mutual information between prior and posterior parameter distributions. In so doing, they are able to optimally narrow the resulting posterior parameter or predictive distributions, incorporate preliminary experimental data and provide sensitivity and robustness analyses. In a markedly different approach, Apgar et. al [8] use control theoretic principles to distinguish between competing models; here the favoured model is that which is best able to inform a controller to drive the experimental system through a target trajectory.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pcbi.1003650.g001

The contributions of this article are threefold; firstly, we extend a promising and computationally efficient experimental design framework for model selection to the stochastic setting, with non-Gaussian prior distributions; secondly, we utilise this efficiency to explore the robustness of model selection outcomes to experimental choices; and finally, we observe that experimental design can give rise to levels of confidence in selected models that may be misleading as a guide to their predictive power or correctness. The latter two points are undertaken via high-throughput in-silico analyses (at a scale completely beyond the Monte Carlo based approaches mentioned above) on families of gene regulatory cascade models and various existing models of the JAK STAT pathway.

Identifying crosstalk connections between signalling pathways

We first illustrate the experimental design and model selection framework in the context of crosstalk identification. After observing how the choice of experiment can be crucial for a positive model selection outcomes, the example will be used to illustrate and explore the inconsistency of selection between misspecified models.

https://doi.org/10.1371/journal.pcbi.1003650.g002

https://doi.org/10.1371/journal.pcbi.1003650.g003

https://doi.org/10.1371/journal.pcbi.1003650.g004

The robustness of model selection to choice of experiment