Assignment 4: Word Embeddings

Welcome to the fourth (and last) programming assignment of Course 2!

In this assignment, you will practice how to compute word embeddings and use them for sentiment analysis.

- To implement sentiment analysis, you can go beyond counting the number of positive words and negative words.

- You can find a way to represent each word numerically, by a vector.

- The vector could then represent syntactic (i.e. parts of speech) and semantic (i.e. meaning) structures.

In this assignment, you will explore a classic way of generating word embeddings or representations.

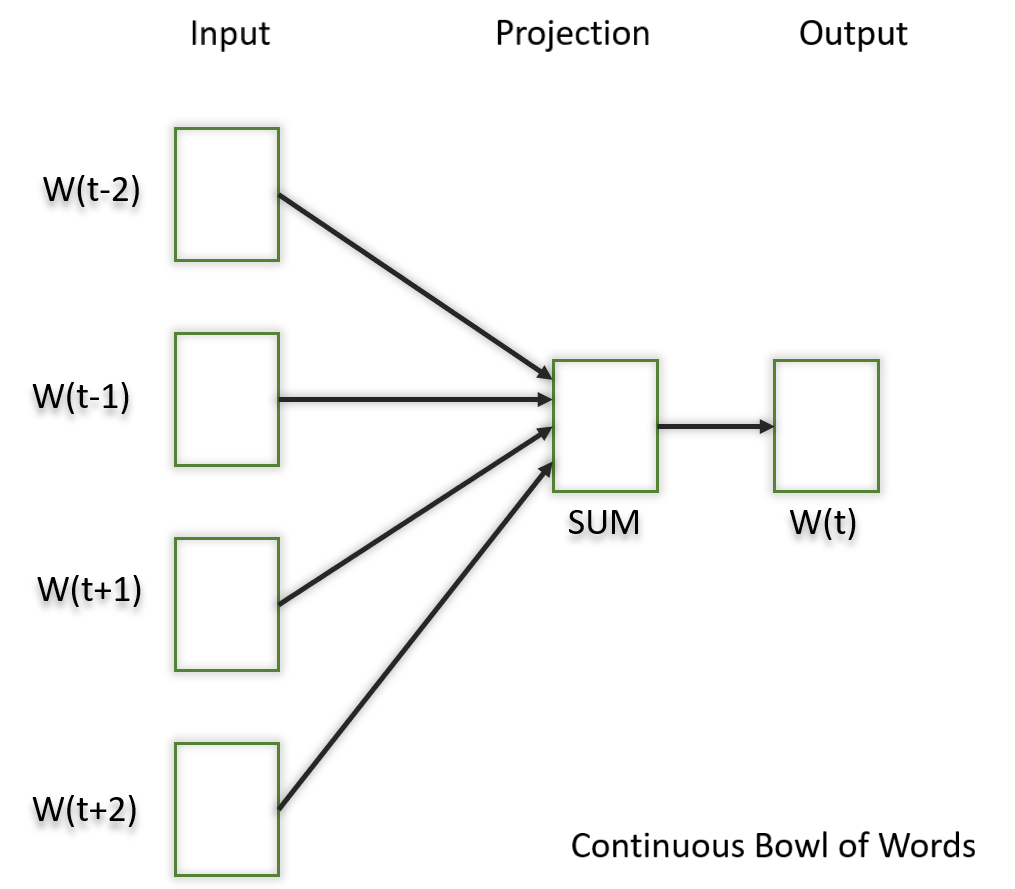

- You will implement a famous model called the continuous bag of words (CBOW) model.

By completing this assignment you will:

- Train word vectors from scratch.

- Learn how to create batches of data.

- Understand how backpropagation works.

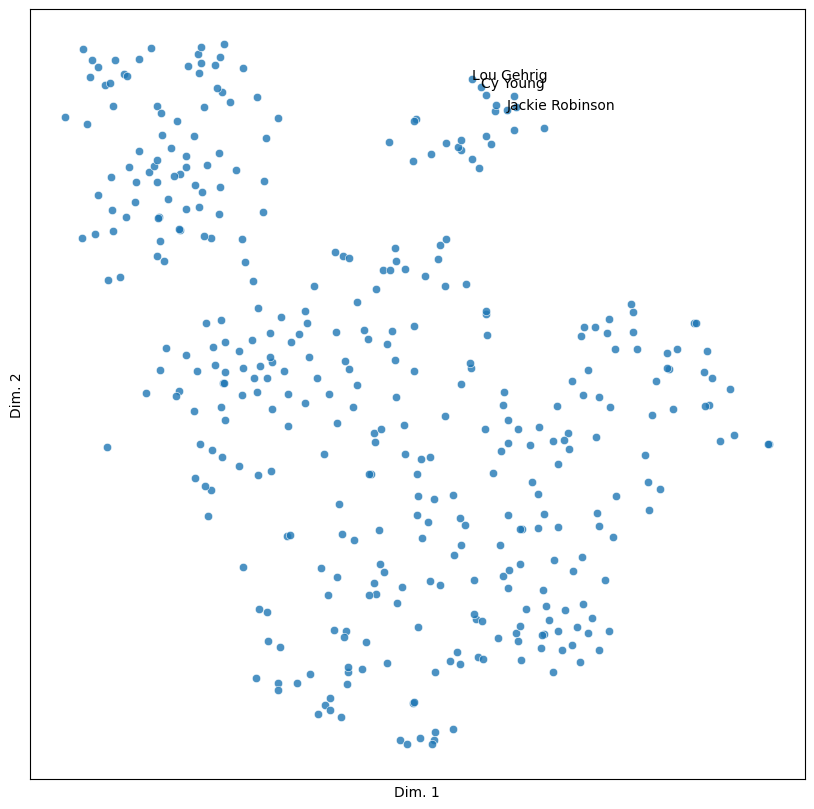

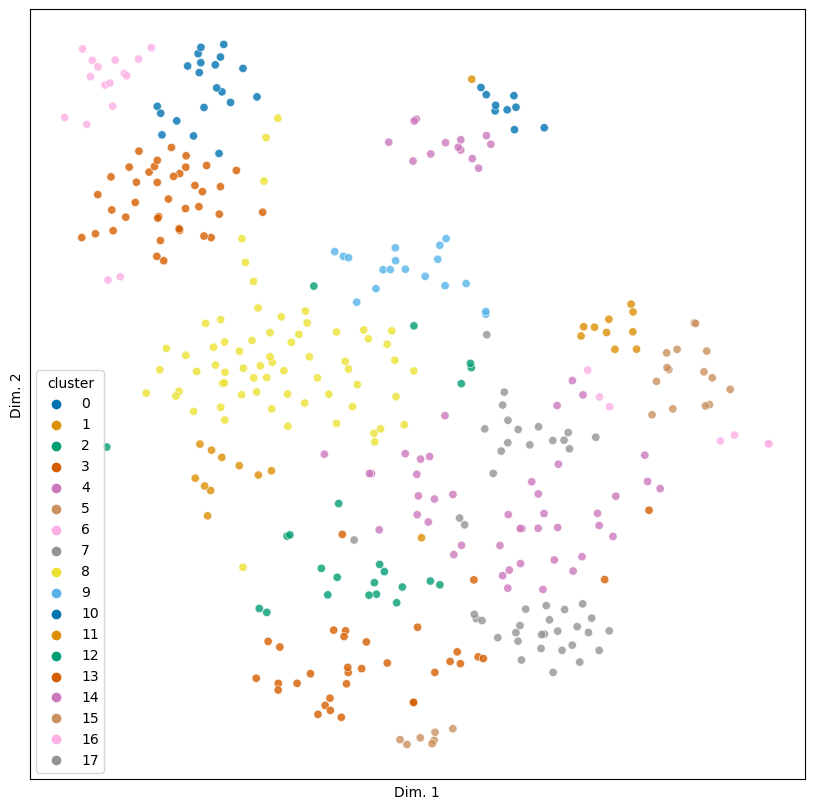

- Plot and visualize your learned word vectors.

Knowing how to train these models will give you a better understanding of word vectors, which are building blocks to many applications in natural language processing.

- 1 The Continuous bag of words model

- Exercise 01

- Exercise 02

- Exercise 03

- 2.3 Cost Function

- Exercise 04

- Exercise 05

- 3 Visualizing the word vectors

1. The Continuous bag of words model

Let's take a look at the following sentence:

'I am happy because I am learning' .

- In continuous bag of words (CBOW) modeling, we try to predict the center word given a few context words (the words around the center word).

- For example, if you were to choose a context half-size of say C = 2 C = 2 C = 2 , then you would try to predict the word happy given the context that includes 2 words before and 2 words after the center word:

C C C words before: [I, am]

C C C words after: [because, I]

- In other words:

c o n t e x t = [ I , a m , b e c a u s e , I ] context = [I,am, because, I] co n t e x t = [ I , am , b ec a u se , I ] t a r g e t = h a p p y target = happy t a r g e t = ha pp y

The structure of your model will look like this:

Where x ˉ \bar x x ˉ is the average of all the one hot vectors of the context words.

Once you have encoded all the context words, you can use x ˉ \bar x x ˉ as the input to your model.

The architecture you will be implementing is as follows:

\begin{align} h &= W_1 \ X + b_1 \tag{1} \ a &= ReLU(h) \tag{2} \ z &= W_2 \ a + b_2 \tag{3} \ \hat y &= softmax(z) \tag{4} \ \end{align}

Assignment #4 Solutions ¶

Deep Learning / Spring 1398, Iran University of Science and Technology

Please pay attention to these notes:

- Assignment Due: 1398/03/17 23:59

- If you need any additional information, please review the assignment page on the course website.

- The items you need to answer are highlighted in red and the coding parts you need to implement are denoted by: ######################################## # Put your implementation here # ########################################

- We always recommend co-operation and discussion in groups for assignments. However, each student has to finish all the questions by him/herself. If our matching system identifies any sort of copying, you'll be responsible for consequences. So, please mention his/her name if you have a team-mate.

- Students who audit this course should submit their assignments like other students to be qualified for attending the rest of the sessions.

- Finding any sort of copying will zero down that assignment grade and also will be counted as two negative assignment for your final score.

- When you are ready to submit, please follow the instructions at the end of this notebook.

- If you have any questions about this assignment, feel free to drop us a line. You may also post your questions on the course's forum page.

- You must run this notebook on Google Colab platform; there are some dependencies to Google Colab VM for some of the libraries.

- Before starting to work on the assignment please fill your name in the next section AND Remember to RUN the cell.

Assignment Page: https://iust-deep-learning.github.io/972/assignments/04_nlp_intro

Course Forum: https://groups.google.com/forum/#!forum/dl972/

Fill your information here & run the cell

1. Word2vec ¶

In any NLP task with neural networks involved, we need a numerical representation of our input (which are mainly words). A naive solution would be to use a huge one-hot vector with the same size as our vocabulary, each element representing one word. But this sparse representation is a poor usage of a huge multidimentional space as it does not contain any usefull information about the meaning and semantics of a word. This is where word embedding comes in handy.

1.1 What is word embedding? ¶

Embeddings are another way of representing vocabulary in a lower dimentional (compared to one-hot representation) continuous space. The goal is to have similar vectors for the words with similar meanings (so the elements of the vector actually carry some information about the meaning of the words). The question is, how are we going to achieve such representations? The idea is simple but elegant: The words appearing in the same context are likely to have similar meanings.

So how can we use this idea to learn word vectors?

1.2 How to train? ¶

We are going to train a simple neural network with a single hidden layer to perform a certain task, but then we’re not actually going to use that neural network for the task we trained it on! Instead, the goal is actually just to learn the weights of the hidden layer and use this hidden layer as our word representation vector.

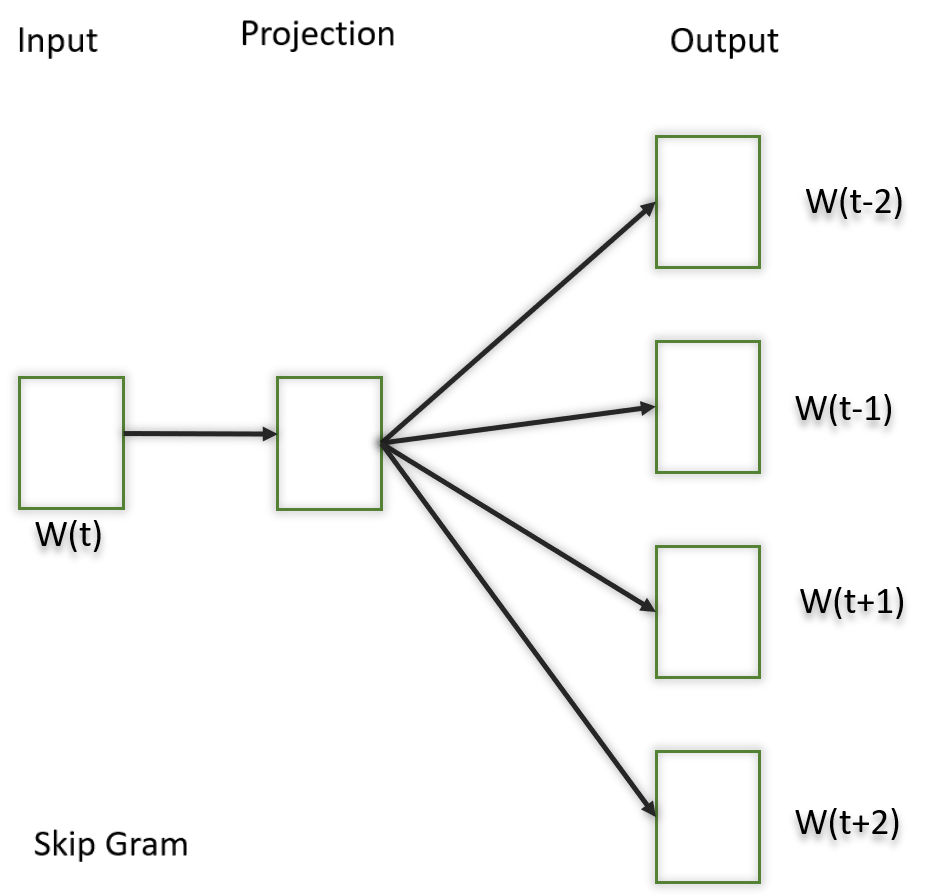

So lets talk about this "fake" task. We’re going to train the neural network to do the following: given a specific word (the input word), the network is going to tell us the probability for every word in our vocabulary of being near to this given word (be one of its context words). So the network is going to look somthing like this (considering that our vocabulary size is 10000):

By training the network on this task, the words which appear in similar contexts are forced to have similar values in the hidden layer since they are going to give similar outputs, so we can use this hidden layer values as our word representation.

- This approach is called skip-gram . There is another similar but slightly different approach called CBOW . Read about CBOW and explain its general idea:

$\color{red}{\text{Write your answer here}}$ </br> CBOW is very similar to skip-gram, the difference is in the task we are training our model on. In skip-gram we ask model for the context words given the center word, but in CBOW we ask model for the center word given context words! Skip-gram works well with small amount of the training data and represents well even rare words or phrases. On the other hand CBOW is several times faster to train than the skip-gram and has slightly better accuracy for the frequent words.

1.3 A practical challenge with softmax activation ¶

Softmax is a very handy tool when it comes to probability distribution prediction problems, but it has its downsides when the number of the nodes grows too large. Let's look at softmax activation in our output layer:

As you can see, every single output is dependent on the other outputs, so in order to compute the derivative with respect to any weight, all the other weights play a role! For a 10000 output size this results in milions of mathematical operations for a single weight update, which is not practical at all!

- There are various techniques to solve this issue, like using hierarchical softmax or NCE (Noise Contrastive Estimation) . The original Word2vec paper proposes a technique called Negative sampling . Read about this technique and explain its general idea:

$\color{red}{\text{Write your answer here}}$ </br> Recall that the desired ouput was consisting of some few number of 1 values (the words in the context) and lots of 0 values (other irrelevant words), in other words with each training sample, we were trying to make the embedding vectors of our target word and the context words become closer while making our target embedding and all irrelevant word embeddings become less similar. This is actually the main issue, because using all irrelevant words is unnecessary, causing soft max activation computations be too heavy. Negative sampling is one of the ways of addressing this problem with just selecting a couple of irrelevant words at random (instead of all). The end result is that for example if cat appears in the context of food , then the vector of food is more similar to the vector of cat than the vectors of several other randomly chosen words (e.g. democracy, greed, Freddy) , instead of all other words in language. This makes word2vec much much faster to train.

- Explain why is it called Negative sampling ? What are these Negative samples?

$\color{red}{\text{Write your answer here}}$ </br> These randomly choosen irrelevant words are called Negative samples and they are called this way because we are trying to seprate their embeddings from our target word's.

1.4 Word2vec in code ¶

There is a very good library called gensim for using word2vec in python. You can train your own word vectors on your own corpora or use available pretrained models. For example the following model is word vectors for a vocabulary of 3 million words and phrases trained on roughly 100 billion words from a Google News dataset with vector length of 300 features:

Lets load this model in python:

As you can see it requires a huge amount of memory!

- Use gensim library, find the 3 most similar words to each given following target word using similar_by_word method, find all these words embeddings, reduce their dimension to 2 using a dimension reduction algorithm (eg. t-SNE or PCA) and plot the results in a 2d-scatterplot:

You can find the cosine similarity between two word vectors using similarity method:

- As you can see there is a meaningfull similarity between the word logitech (a provider company of personal computer and mobile peripherals) and the word cat , even though they shouldn't have this much similarity. Explain why do you think this happens? Find more examples for this phenomenon.

$\color{red}{\text{Write your answer here}}$ </br> This phenomenon is one of the most important current research trends in the field of word sense disambiguation. The problem occurs when there are two words with the same spellings but different meanings. For example in this case, the word mouse causes the problem. Since Logitech company is a computer peripherals provider, it is likely to appear in the same context as the word mouse (meaning a computer I/O device). On the other hand the words cat and mouse (meaning an animal) are likely to appear in the same context too. The result is the embeddings of words Logitech and cat being close to eachother because of the word mouse . More examples:

- It seems that words like criminal and offensive are more similar to the word black rather than white . It is claimed that word2vec model trained on Google News suffers from gender, racial and religious biases. Explain why do you think this happens and find 4 more examples:

$\color{red}{\text{Write your answer here}}$ </br> This happens because any bias in the articles that make up the Word2vec corpus is inevitably captured in the geometry of the vector space. As a matter of fact the model does not learn anything unless we teach it! This type of biases happen because the training set is biased in this way (e.g. the news about dark skinned people doing crime are more covered.

Word vectors have some other cool properties, for example we know the relation between the meanings of the two words "man" and "woman" is similar to the relation between words "king" and "queen". So we expect $e_{queen} - e_{king} = e_{women} - e_{man}$ or $e_{queen} = e_{king} + e_{women} - e_{man}$ .

- Show whether the above equation holds or not by following these steps:

- Extract the embedding vectors for these words.

- Subtract the vector of "man" from vector of "woman" and add the vector of "king"

- Find the cosine similarity of the resulting vector with the vector for the word "queen"

2. Context representation using a window-based neural network ¶

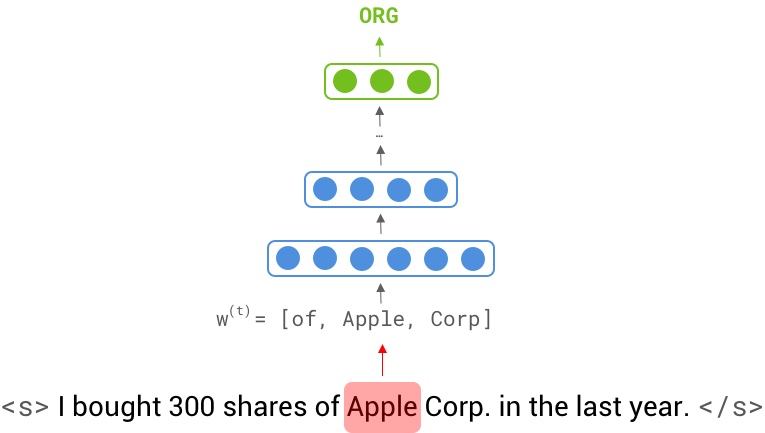

From the previous section, we saw that word vectors can store a lot of semantic information in themselves. But can we solve an NLP task by just feeding them through a simple neural network? Assume we want to find all named entities in a given sentence (aka Named Entity recognition). For example, In "I bought 300 shares of Apple Corp. in the last year". We want to locate the word "Apple" and categorize it as an Organization entity.

Obviously, a neural network cannot guess the type entirely based on a single word. We need to provide an extra piece of information to help the decision. This piece of information is called "Context" . We can decide if the word Apple is referring to the company or fruit by seeing it in a sentence (context). However, feeding a complete sentence through a network is inefficient as it makes the input layer really big even for a 10-word sentence (10 * 300 = 3000, assuming an embedding size of 300).

To make training such network possible, we make the input only by including K surrounding neighbor words. hence, apple can be easily classified as a company by looking at the context window [ the, apple, corporation ]

In a window-based classifier, every input sentence $X = [\mathbf{x^{(1)}}, ... , \mathbf{x^{(T)}}]$ with a label sequence $Y = [\mathbf{y^{(1)}}, ..., \mathbf{y^{(T)}}]$ is split into $T$ <context window, center word label> data points. We create a context window $\mathbf{w^{(t)}}$ for every token $\mathbf{x^{(t)}}$ in the original sentence by concatenating its k surrounding neighbors: $\mathbf{w^{(t)}} = [\mathbf{x^{(t-k)}}; ...; \mathbf{x^{(t)}}; ...; \mathbf{x^{(t+k)}}]$, therefore our new data point is created as $\langle \mathbf{w^{(t)}} , \mathbf{y^{(t)}} \rangle$.

Having word case information might also help the neural network to find name entities with higher confidence. To incorporate casing, every token $\mathbf{x^{(t)}}$ is augmented with feature vector $\mathbf{c}$ representing such information: $\mathbf{x^{(t)}} = [\mathbf{e^{(t)}};\mathbf{c^{(t)}}]$ where $\mathbf{e^{(t)}}$ is the corresponding embedding.

In this section, we aim to build a window based feedforward neural network on the NER task, and then analyze its limitations through a case study.

Let's import some depencecies.

And define the model's hyperparameters:

2.1 Preprocessing ¶

As discussed earlier, we want to include the word casing information. Here's our desired function to encode the casing detail in d-dimensional vector. Words "Hello", "hello", "HELLO" and "hELLO" have four different casings. Your encoding should support all of them; In other words, the implemented function must return 4 different vectors for these inputs, but the same output for "Bye" and "Hello", "bye" and "hello", "bYe" and "hEllo", etc.

Describe two other features that would help the window-based model to perform better (apart from word casing).

$\color{red}{\text{Write your answer here}}$

- POS Tags (e.g. Verb, Adj)

- NER Tag of previous token

CONLL 2003[1] is a classic NER dataset; It has five tags per each word: [PER, ORG, LOC, MISC, O] , where the label O is for words that have no named entities. We use this dataset to train our window-based model. Note that our split is different from the original one.

Download and construct pre-trained embedding matrix using Glove word vectors.

2.2 Implementation ¶

Let's build the model. we recommend Keras functional API. Number of layer as well as their dimensions is totally up to you.

2.3 Training ¶

2.4 analysis ¶.

Now, It's time to analyze the model behavior. Here is an interactive shell that will enable us to explore the model's limitations and capabilities. Note that the sentences should be entered with spaces between tokens, and Use "do n't" instead of "don't".

To further understand and analyze mistakes made by the model, let's see the confusion matrix:

Describe the window-based network modeling limitations by exploring its outputs. You need to support your conclusion by showing us the errors your model makes. You can either use validation set samples or a manually entered sentence to force the model to make an error. Remember to copy and paste input/output from the interactive shell here.

Model knows nothing about previous neighboring word predicted tag. Thus it is unable to correctly guess the label of multi-word named entites

Model cannot look at other parts of the sentence.

- Model cannot look at the feature. x : New York State University y': LOC ORG ORG ORG

3. BOW Sentence Representation ¶

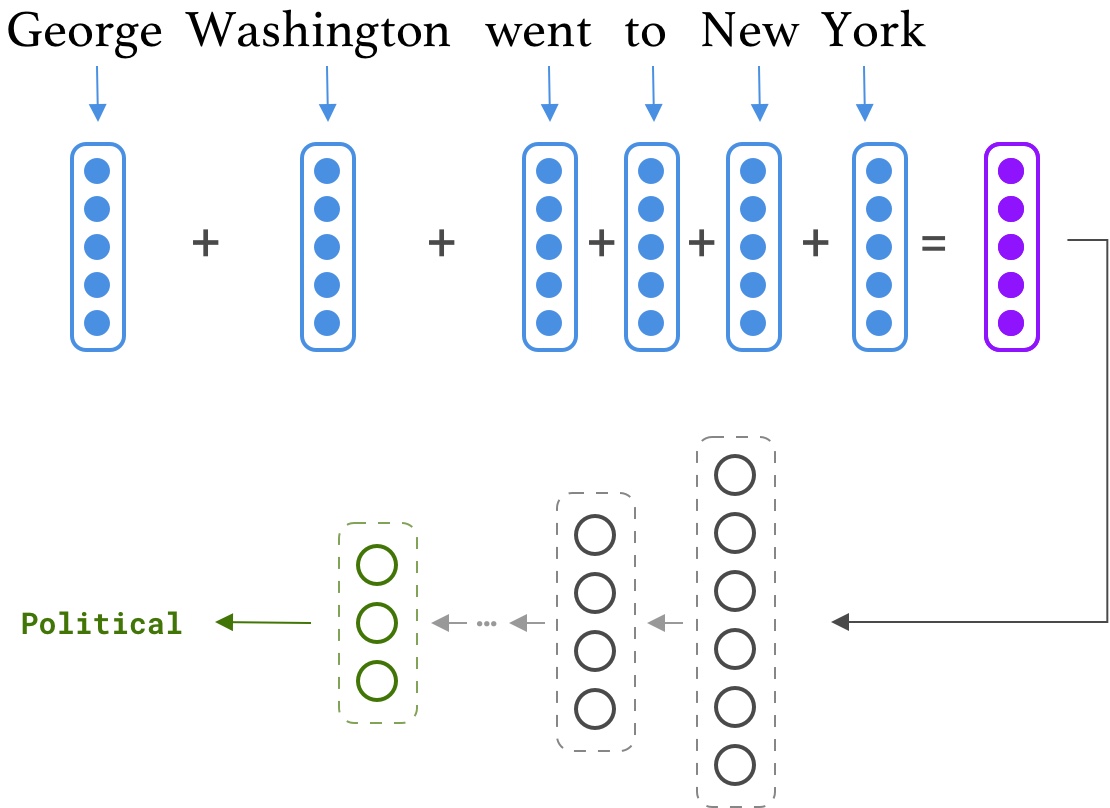

We have shown arithmetic relations are present in the embedding space. For example $e_{queen} = e_{king} + e_{women} - e_{man}$ . But are they strong enough for building a rich representation of a sentence? Can we classify a sentence according to the mean of its word's embeddings? In this section, we will find the answers to the above questions.

Assume sentence $X = [\mathbf{x^{(1)}}, ..., \mathbf{x^{(N)}}]$ is given, then a sentence representation $\mathbf{R}$ can be calculated as following:

where $e_{x^{(i)}}$ is an embedding vector for the token $x^{(i)}$.

Having such a simple model will enable us to analyze and understand its capabilities more easily. In addition, we will try one of the state-of-the-art text processing tools, called Flair, which can be run on GPUs. The task is text classification on the AG News corpus, which consists of news articles from more than 2000 news sources. Our split has 110K samples for the training and 10k for the validation set. Dataset examples are labeled with 4 major labels: {World, Sports, Business, Sci/Tech}

3.1 Preprocessing ¶

Often, datasets in NLP come with unprocessed sentences. As a deep learning expert, you should be familiar with popular text processing tools such as NLTK, Spacy, Stanford CoreNLP, and Flair. Generally, text pre-processing in deep learning includes Tokenization, Vocabulary creation, and Padding. But here we want to do one more step, NER replacement. Basically, we want to replace named entities with their corresponding tags. For example "George Washington went to New York" will be converted to "\ went to \ "

The purpose of this step is to reduce the size of vocabulary and support more words. This strategy is proved to be most beneficial when our dataset contains a large number of named entities, e.g. News dataset.

Most pre-processing parts are implemented for you. You only need to fill the following function. Be sure to read the Flair documentations first.

Test your implementation:

Define model's hyperparameters

Process the entire corpus. It will approximately take 50 minutes. Please be patient. You may want to go for the next sections.

Create the embedding matrix

3.2 Implementation ¶

Let's build the model. As always Keras functional API is recommended. Numeber of layer as well as their dimensionality is totally up to you.

3.3 Training ¶

3.4 analysis ¶.

Same as the previous section, an interactive shell is provided. You can enter an input sequence to get the predicted label. The preprocessing functions will do the tokenization, thus don't worry about the spacing.

It is always helpful to see the confusion matrix:

Obviously, this is a relatively simple model. Hence it has limited modeling capabilities; Now it's time to find its mistakes. Can you fool the model by feeding a toxic example? Can you see the bag-of-word effect in its behavior? Write down the model limitation, Answers to the above questions, and keep in mind that you need to support each of your thoughts with an input/output example

Here is some finding from our students

bellow we see effect of BOW, its seems that the correct label is business. but by avoiding relations of words and their sequence it made mistake.

Credits: Mohammad hasan Shamgholi

4. RNN Intuition ¶

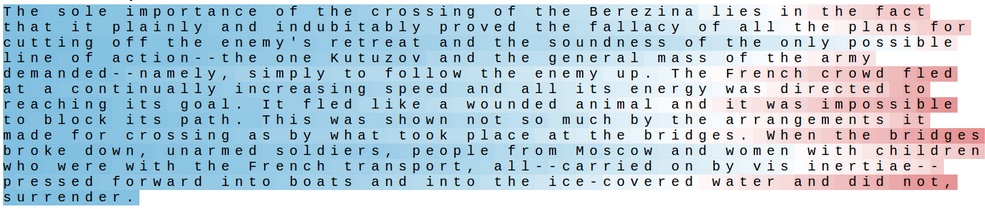

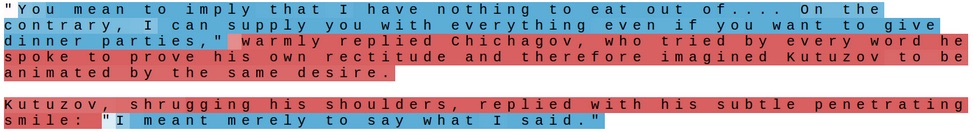

Up to now, we've investigated window-based neural networks and the bag-of-words model. Given their simple architectures, the representation power of these models mainly relies on the pre-trained embeddings. For example, a window-based model cannot understand the previous token's label which makes it struggle in identifying multi-word entities. While, adding a single word " not " can entirely change the meaning of a sentence, the BoW model is not sensitive to this as it ignores the order and computes the average embedding (in which single words do not play big roles).

In contrast, RNNs read sentences word by word. At each step, the softmax classifier is forced to predict the label not only by using the input word but also using its context information. If we see the context information as a working memory for RNNs, it will be interesting to find what kind of information is stored in them while it parses a sentence.

To visualize an RNN memory, we will train a language model on a huge chunk of text, and use the validation set to analyze its brain. Then, we will watch each context neuron activation to see if it shows a meaningful pattern while it goes through a sentence. The following figure illustrates a random neuron in the memory which captures the concept of line length. It gradually turns off by reach the sentence end. Probably our model uses this neuron to handle "\n" generation.

Here is another neuron which is sensitive when it's inside a quote.

Here, our goal is to find other meaningful patterns in the RNN hidden states. There is an open source library called LSTMVIs which provides pre-trained models and a great visualization tool. First, watch its tutorial and then answer the following questions:

For each model, find at least two meaningful patterns, and support your hypothesis with screenshots of LSTMVis.

1- Character Model (Wall Street Journal)

Here are some patterns found by our students.

- A neuron which activates on spaces (Credits: Mohsen Tabasi)

This one is activated after seeing "a" and deactivates after reads "of"! (Credits: Mohsen Tabasi)

A combination of neurons which activate on plural nouns with ending "s"

2- Word Model (Wall Street Journal)

A pattern when the model is referring to some kind of porpotion

A set of neurons which get triggered on pronouns

3- Can you spot the difference between a character-based and a word-based language model?

Char-based models have to learn the concept of word in the first place, and then they can go for much more complex pattern such as gender and grammars. However, given the fact that char-based models parse the sentence one character at a time, they can find patterns in words. i.e., They can identify frequent character n-grams in words which can help them to guess the meaning of unknown words.

References ¶

- Erik F. Tjong Kim Sang and Fien De Meulder. 2003. Introduction to the CoNLL-2003 shared task: Language independent named entity recognition. In Proceedings of the Seventh Conference on Natural Language Learning at HLT-NAACL 2003.

- Zhang, Zhao, and LeCun, “Character-Level Convolutional Networks for Text Classification.”

- Stanford CS224d Course

- “The Unreasonable Effectiveness of Recurrent Neural Networks.” Accessed May 26, 2019. http://karpathy.github.io/2015/05/21/rnn-effectiveness/ .

Run PyTorch locally or get started quickly with one of the supported cloud platforms

Whats new in PyTorch tutorials

Familiarize yourself with PyTorch concepts and modules

Bite-size, ready-to-deploy PyTorch code examples

Master PyTorch basics with our engaging YouTube tutorial series

Learn about the tools and frameworks in the PyTorch Ecosystem

Join the PyTorch developer community to contribute, learn, and get your questions answered

A place to discuss PyTorch code, issues, install, research

Find resources and get questions answered

Award winners announced at this year's PyTorch Conference

Build innovative and privacy-aware AI experiences for edge devices

End-to-end solution for enabling on-device inference capabilities across mobile and edge devices

Explore the documentation for comprehensive guidance on how to use PyTorch

Read the PyTorch Domains documentation to learn more about domain-specific libraries

Catch up on the latest technical news and happenings

Stories from the PyTorch ecosystem

Learn about the latest PyTorch tutorials, new, and more

Learn how our community solves real, everyday machine learning problems with PyTorch

Find events, webinars, and podcasts

Learn more about the PyTorch Foundation

- Become a Member

- Tutorials >

- Word Embeddings: Encoding Lexical Semantics

Click here to download the full example code

Word Embeddings: Encoding Lexical Semantics ¶

Word embeddings are dense vectors of real numbers, one per word in your vocabulary. In NLP, it is almost always the case that your features are words! But how should you represent a word in a computer? You could store its ascii character representation, but that only tells you what the word is , it doesn’t say much about what it means (you might be able to derive its part of speech from its affixes, or properties from its capitalization, but not much). Even more, in what sense could you combine these representations? We often want dense outputs from our neural networks, where the inputs are \(|V|\) dimensional, where \(V\) is our vocabulary, but often the outputs are only a few dimensional (if we are only predicting a handful of labels, for instance). How do we get from a massive dimensional space to a smaller dimensional space?

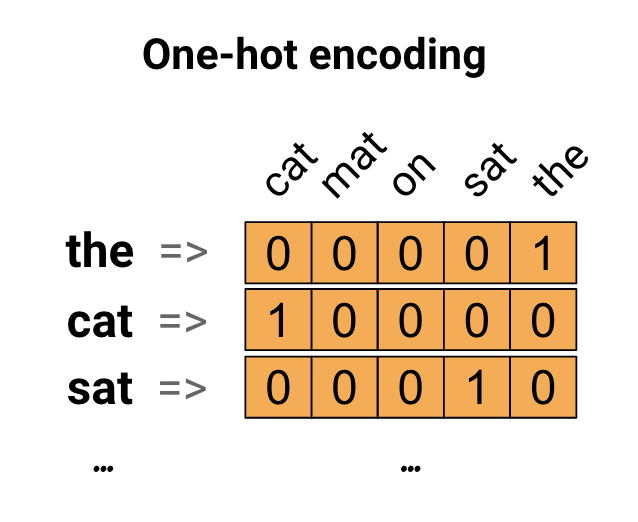

How about instead of ascii representations, we use a one-hot encoding? That is, we represent the word \(w\) by

where the 1 is in a location unique to \(w\) . Any other word will have a 1 in some other location, and a 0 everywhere else.

There is an enormous drawback to this representation, besides just how huge it is. It basically treats all words as independent entities with no relation to each other. What we really want is some notion of similarity between words. Why? Let’s see an example.

Suppose we are building a language model. Suppose we have seen the sentences

The mathematician ran to the store.

The physicist ran to the store.

The mathematician solved the open problem.

in our training data. Now suppose we get a new sentence never before seen in our training data:

The physicist solved the open problem.

Our language model might do OK on this sentence, but wouldn’t it be much better if we could use the following two facts:

We have seen mathematician and physicist in the same role in a sentence. Somehow they have a semantic relation.

We have seen mathematician in the same role in this new unseen sentence as we are now seeing physicist.

and then infer that physicist is actually a good fit in the new unseen sentence? This is what we mean by a notion of similarity: we mean semantic similarity , not simply having similar orthographic representations. It is a technique to combat the sparsity of linguistic data, by connecting the dots between what we have seen and what we haven’t. This example of course relies on a fundamental linguistic assumption: that words appearing in similar contexts are related to each other semantically. This is called the distributional hypothesis .

Getting Dense Word Embeddings ¶

How can we solve this problem? That is, how could we actually encode semantic similarity in words? Maybe we think up some semantic attributes. For example, we see that both mathematicians and physicists can run, so maybe we give these words a high score for the “is able to run” semantic attribute. Think of some other attributes, and imagine what you might score some common words on those attributes.

If each attribute is a dimension, then we might give each word a vector, like this:

Then we can get a measure of similarity between these words by doing:

Although it is more common to normalize by the lengths:

Where \(\phi\) is the angle between the two vectors. That way, extremely similar words (words whose embeddings point in the same direction) will have similarity 1. Extremely dissimilar words should have similarity -1.

You can think of the sparse one-hot vectors from the beginning of this section as a special case of these new vectors we have defined, where each word basically has similarity 0, and we gave each word some unique semantic attribute. These new vectors are dense , which is to say their entries are (typically) non-zero.

But these new vectors are a big pain: you could think of thousands of different semantic attributes that might be relevant to determining similarity, and how on earth would you set the values of the different attributes? Central to the idea of deep learning is that the neural network learns representations of the features, rather than requiring the programmer to design them herself. So why not just let the word embeddings be parameters in our model, and then be updated during training? This is exactly what we will do. We will have some latent semantic attributes that the network can, in principle, learn. Note that the word embeddings will probably not be interpretable. That is, although with our hand-crafted vectors above we can see that mathematicians and physicists are similar in that they both like coffee, if we allow a neural network to learn the embeddings and see that both mathematicians and physicists have a large value in the second dimension, it is not clear what that means. They are similar in some latent semantic dimension, but this probably has no interpretation to us.

In summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information that might be relevant to the task at hand . You can embed other things too: part of speech tags, parse trees, anything! The idea of feature embeddings is central to the field.

Word Embeddings in Pytorch ¶

Before we get to a worked example and an exercise, a few quick notes about how to use embeddings in Pytorch and in deep learning programming in general. Similar to how we defined a unique index for each word when making one-hot vectors, we also need to define an index for each word when using embeddings. These will be keys into a lookup table. That is, embeddings are stored as a \(|V| \times D\) matrix, where \(D\) is the dimensionality of the embeddings, such that the word assigned index \(i\) has its embedding stored in the \(i\) ’th row of the matrix. In all of my code, the mapping from words to indices is a dictionary named word_to_ix.

The module that allows you to use embeddings is torch.nn.Embedding, which takes two arguments: the vocabulary size, and the dimensionality of the embeddings.

To index into this table, you must use torch.LongTensor (since the indices are integers, not floats).

An Example: N-Gram Language Modeling ¶

Recall that in an n-gram language model, given a sequence of words \(w\) , we want to compute

Where \(w_i\) is the ith word of the sequence.

In this example, we will compute the loss function on some training examples and update the parameters with backpropagation.

Exercise: Computing Word Embeddings: Continuous Bag-of-Words ¶

The Continuous Bag-of-Words model (CBOW) is frequently used in NLP deep learning. It is a model that tries to predict words given the context of a few words before and a few words after the target word. This is distinct from language modeling, since CBOW is not sequential and does not have to be probabilistic. Typically, CBOW is used to quickly train word embeddings, and these embeddings are used to initialize the embeddings of some more complicated model. Usually, this is referred to as pretraining embeddings . It almost always helps performance a couple of percent.

The CBOW model is as follows. Given a target word \(w_i\) and an \(N\) context window on each side, \(w_{i-1}, \dots, w_{i-N}\) and \(w_{i+1}, \dots, w_{i+N}\) , referring to all context words collectively as \(C\) , CBOW tries to minimize

where \(q_w\) is the embedding for word \(w\) .

Implement this model in Pytorch by filling in the class below. Some tips:

Think about which parameters you need to define.

Make sure you know what shape each operation expects. Use .view() if you need to reshape.

Total running time of the script: ( 0 minutes 0.755 seconds)

Download Python source code: word_embeddings_tutorial.py

Download Jupyter notebook: word_embeddings_tutorial.ipynb

Gallery generated by Sphinx-Gallery

- Getting Dense Word Embeddings

- Word Embeddings in Pytorch

- An Example: N-Gram Language Modeling

- Exercise: Computing Word Embeddings: Continuous Bag-of-Words

Access comprehensive developer documentation for PyTorch

Get in-depth tutorials for beginners and advanced developers

Find development resources and get your questions answered

To analyze traffic and optimize your experience, we serve cookies on this site. By clicking or navigating, you agree to allow our usage of cookies. As the current maintainers of this site, Facebook’s Cookies Policy applies. Learn more, including about available controls: Cookies Policy .

- Get Started

- Learn the Basics

- PyTorch Recipes

- Introduction to PyTorch - YouTube Series

- Developer Resources

- Contributor Awards - 2023

- About PyTorch Edge

- PyTorch Domains

- Blog & News

- PyTorch Blog

- Community Blog

- Community Stories

- PyTorch Foundation

- Governing Board

CoCalc’s goal is to provide the best real-time collaborative environment for Jupyter Notebooks , LaTeX documents , and SageMath , scalable from individual use to large groups and classes.

Assignment 4: word embeddings.

Welcome to the fourth (and last) programming assignment of Course 2!

In this assignment, you will practice how to compute word embeddings and use them for sentiment analysis.

To implement sentiment analysis, you can go beyond counting the number of positive words and negative words.

You can find a way to represent each word numerically, by a vector.

The vector could then represent syntactic (i.e. parts of speech) and semantic (i.e. meaning) structures.

In this assignment, you will explore a classic way of generating word embeddings or representations.

You will implement a famous model called the continuous bag of words (CBOW) model.

By completing this assignment you will:

Train word vectors from scratch.

Learn how to create batches of data.

Understand how backpropagation works.

Plot and visualize your learned word vectors.

Knowing how to train these models will give you a better understanding of word vectors, which are building blocks to many applications in natural language processing.

1 The Continuous bag of words model

2 Training the Model

2.0 Initialize the model

Exercise 01

2.1 Softmax Function

Exercise 02

2.2 Forward Propagation

Exercise 03

2.3 Cost Function

2.4 Backproagation

Exercise 04

2.5 Gradient Descent

Exercise 05

3 Visualizing the word vectors

1. The Continuous bag of words model

Let's take a look at the following sentence:

'I am happy because I am learning' .

In continuous bag of words (CBOW) modeling, we try to predict the center word given a few context words (the words around the center word).

For example, if you were to choose a context half-size of say C = 2 C = 2 C = 2 , then you would try to predict the word happy given the context that includes 2 words before and 2 words after the center word:

C C C words before: [I, am]

C C C words after: [because, I]

In other words:

The structure of your model will look like this:

Where x ˉ \bar x x ˉ is the average of all the one hot vectors of the context words.

Once you have encoded all the context words, you can use x ˉ \bar x x ˉ as the input to your model.

The architecture you will be implementing is as follows:

Mapping words to indices and indices to words

We provide a helper function to create a dictionary that maps words to indices and indices to words.

Initializing the model

You will now initialize two matrices and two vectors.

The first matrix ( W 1 W_1 W 1 ) is of dimension N × V N \times V N × V , where V V V is the number of words in your vocabulary and N N N is the dimension of your word vector.

The second matrix ( W 2 W_2 W 2 ) is of dimension V × N V \times N V × N .

Vector b 1 b_1 b 1 has dimensions N × 1 N\times 1 N × 1

Vector b 2 b_2 b 2 has dimensions V × 1 V\times 1 V × 1 .

b 1 b_1 b 1 and b 2 b_2 b 2 are the bias vectors of the linear layers from matrices W 1 W_1 W 1 and W 2 W_2 W 2 .

The overall structure of the model will look as in Figure 1, but at this stage we are just initializing the parameters.

Please use numpy.random.rand to generate matrices that are initialized with random values from a uniform distribution, ranging between 0 and 1.

Note: In the next cell you will encounter a random seed. Please DO NOT modify this seed so your solution can be tested correctly.

Expected Output

2.1 softmax.

Before we can start training the model, we need to implement the softmax function as defined in equation 5:

Array indexing in code starts at 0.

V V V is the number of words in the vocabulary (which is also the number of rows of z z z ).

i i i goes from 0 to |V| - 1.

Instructions : Implement the softmax function below.

Assume that the input z z z to softmax is a 2D array

Each training example is represented by a column of shape (V, 1) in this 2D array.

There may be more than one column, in the 2D array, because you can put in a batch of examples to increase efficiency. Let's call the batch size lowercase m m m , so the z z z array has shape (V, m)

When taking the sum from i = 1 ⋯ V − 1 i=1 \cdots V-1 i = 1 ⋯ V − 1 , take the sum for each column (each example) separately.

numpy.sum (set the axis so that you take the sum of each column in z)

Expected Ouput

2.2 forward propagation.

Implement the forward propagation z z z according to equations (1) to (3).

For that, you will use as activation the Rectified Linear Unit (ReLU) given by:

- You can use numpy.maximum(x1,x2) to get the maximum of two values

- Use numpy.dot(A,B) to matrix multiply A and B

Expected output

2.3 cost function.

We have implemented the cross-entropy cost function for you.

2.4 Training the Model - Backpropagation

Now that you have understood how the CBOW model works, you will train it. You created a function for the forward propagation. Now you will implement a function that computes the gradients to backpropagate the errors.

Gradient Descent

Now that you have implemented a function to compute the gradients, you will implement batch gradient descent over your training set.

Hint: For that, you will use initialize_model and the back_prop functions which you just created (and the compute_cost function). You can also use the provided get_batches helper function:

for x, y in get_batches(data, word2Ind, V, C, batch_size):

Also: print the cost after each batch is processed (use batch size = 128)

Your numbers may differ a bit depending on which version of Python you're using.

3.0 Visualizing the word vectors

In this part you will visualize the word vectors trained using the function you just coded above.

You can see that man and king are next to each other. However, we have to be careful with the interpretation of this projected word vectors, since the PCA depends on the projection -- as shown in the following illustration.

- Español – América Latina

- Português – Brasil

- Tiếng Việt

Word embeddings

This tutorial contains an introduction to word embeddings. You will train your own word embeddings using a simple Keras model for a sentiment classification task, and then visualize them in the Embedding Projector (shown in the image below).

Representing text as numbers

Machine learning models take vectors (arrays of numbers) as input. When working with text, the first thing you must do is come up with a strategy to convert strings to numbers (or to "vectorize" the text) before feeding it to the model. In this section, you will look at three strategies for doing so.

One-hot encodings

As a first idea, you might "one-hot" encode each word in your vocabulary. Consider the sentence "The cat sat on the mat". The vocabulary (or unique words) in this sentence is (cat, mat, on, sat, the). To represent each word, you will create a zero vector with length equal to the vocabulary, then place a one in the index that corresponds to the word. This approach is shown in the following diagram.

To create a vector that contains the encoding of the sentence, you could then concatenate the one-hot vectors for each word.

Encode each word with a unique number

A second approach you might try is to encode each word using a unique number. Continuing the example above, you could assign 1 to "cat", 2 to "mat", and so on. You could then encode the sentence "The cat sat on the mat" as a dense vector like [5, 1, 4, 3, 5, 2]. This approach is efficient. Instead of a sparse vector, you now have a dense one (where all elements are full).

There are two downsides to this approach, however:

The integer-encoding is arbitrary (it does not capture any relationship between words).

An integer-encoding can be challenging for a model to interpret. A linear classifier, for example, learns a single weight for each feature. Because there is no relationship between the similarity of any two words and the similarity of their encodings, this feature-weight combination is not meaningful.

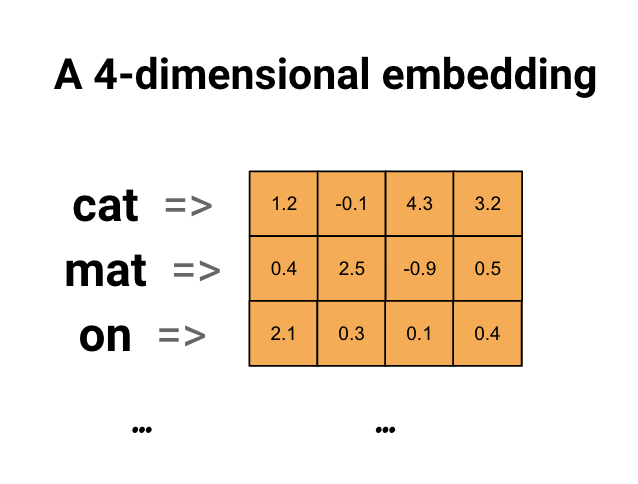

Word embeddings give us a way to use an efficient, dense representation in which similar words have a similar encoding. Importantly, you do not have to specify this encoding by hand. An embedding is a dense vector of floating point values (the length of the vector is a parameter you specify). Instead of specifying the values for the embedding manually, they are trainable parameters (weights learned by the model during training, in the same way a model learns weights for a dense layer). It is common to see word embeddings that are 8-dimensional (for small datasets), up to 1024-dimensions when working with large datasets. A higher dimensional embedding can capture fine-grained relationships between words, but takes more data to learn.

Above is a diagram for a word embedding. Each word is represented as a 4-dimensional vector of floating point values. Another way to think of an embedding is as "lookup table". After these weights have been learned, you can encode each word by looking up the dense vector it corresponds to in the table.

Download the IMDb Dataset

You will use the Large Movie Review Dataset through the tutorial. You will train a sentiment classifier model on this dataset and in the process learn embeddings from scratch. To read more about loading a dataset from scratch, see the Loading text tutorial .

Download the dataset using Keras file utility and take a look at the directories.

Take a look at the train/ directory. It has pos and neg folders with movie reviews labelled as positive and negative respectively. You will use reviews from pos and neg folders to train a binary classification model.

The train directory also has additional folders which should be removed before creating training dataset.

Next, create a tf.data.Dataset using tf.keras.utils.text_dataset_from_directory . You can read more about using this utility in this text classification tutorial .

Use the train directory to create both train and validation datasets with a split of 20% for validation.

Take a look at a few movie reviews and their labels (1: positive, 0: negative) from the train dataset.

Configure the dataset for performance

These are two important methods you should use when loading data to make sure that I/O does not become blocking.

.cache() keeps data in memory after it's loaded off disk. This will ensure the dataset does not become a bottleneck while training your model. If your dataset is too large to fit into memory, you can also use this method to create a performant on-disk cache, which is more efficient to read than many small files.

.prefetch() overlaps data preprocessing and model execution while training.

You can learn more about both methods, as well as how to cache data to disk in the data performance guide .

Using the Embedding layer

Keras makes it easy to use word embeddings. Take a look at the Embedding layer.

The Embedding layer can be understood as a lookup table that maps from integer indices (which stand for specific words) to dense vectors (their embeddings). The dimensionality (or width) of the embedding is a parameter you can experiment with to see what works well for your problem, much in the same way you would experiment with the number of neurons in a Dense layer.

When you create an Embedding layer, the weights for the embedding are randomly initialized (just like any other layer). During training, they are gradually adjusted via backpropagation. Once trained, the learned word embeddings will roughly encode similarities between words (as they were learned for the specific problem your model is trained on).

If you pass an integer to an embedding layer, the result replaces each integer with the vector from the embedding table:

For text or sequence problems, the Embedding layer takes a 2D tensor of integers, of shape (samples, sequence_length) , where each entry is a sequence of integers. It can embed sequences of variable lengths. You could feed into the embedding layer above batches with shapes (32, 10) (batch of 32 sequences of length 10) or (64, 15) (batch of 64 sequences of length 15).

The returned tensor has one more axis than the input, the embedding vectors are aligned along the new last axis. Pass it a (2, 3) input batch and the output is (2, 3, N)

When given a batch of sequences as input, an embedding layer returns a 3D floating point tensor, of shape (samples, sequence_length, embedding_dimensionality) . To convert from this sequence of variable length to a fixed representation there are a variety of standard approaches. You could use an RNN, Attention, or pooling layer before passing it to a Dense layer. This tutorial uses pooling because it's the simplest. The Text Classification with an RNN tutorial is a good next step.

Text preprocessing

Next, define the dataset preprocessing steps required for your sentiment classification model. Initialize a TextVectorization layer with the desired parameters to vectorize movie reviews. You can learn more about using this layer in the Text Classification tutorial.

Create a classification model

Use the Keras Sequential API to define the sentiment classification model. In this case it is a "Continuous bag of words" style model.

- The TextVectorization layer transforms strings into vocabulary indices. You have already initialized vectorize_layer as a TextVectorization layer and built its vocabulary by calling adapt on text_ds . Now vectorize_layer can be used as the first layer of your end-to-end classification model, feeding transformed strings into the Embedding layer.

The Embedding layer takes the integer-encoded vocabulary and looks up the embedding vector for each word-index. These vectors are learned as the model trains. The vectors add a dimension to the output array. The resulting dimensions are: (batch, sequence, embedding) .

The GlobalAveragePooling1D layer returns a fixed-length output vector for each example by averaging over the sequence dimension. This allows the model to handle input of variable length, in the simplest way possible.

The fixed-length output vector is piped through a fully-connected ( Dense ) layer with 16 hidden units.

The last layer is densely connected with a single output node.

Compile and train the model

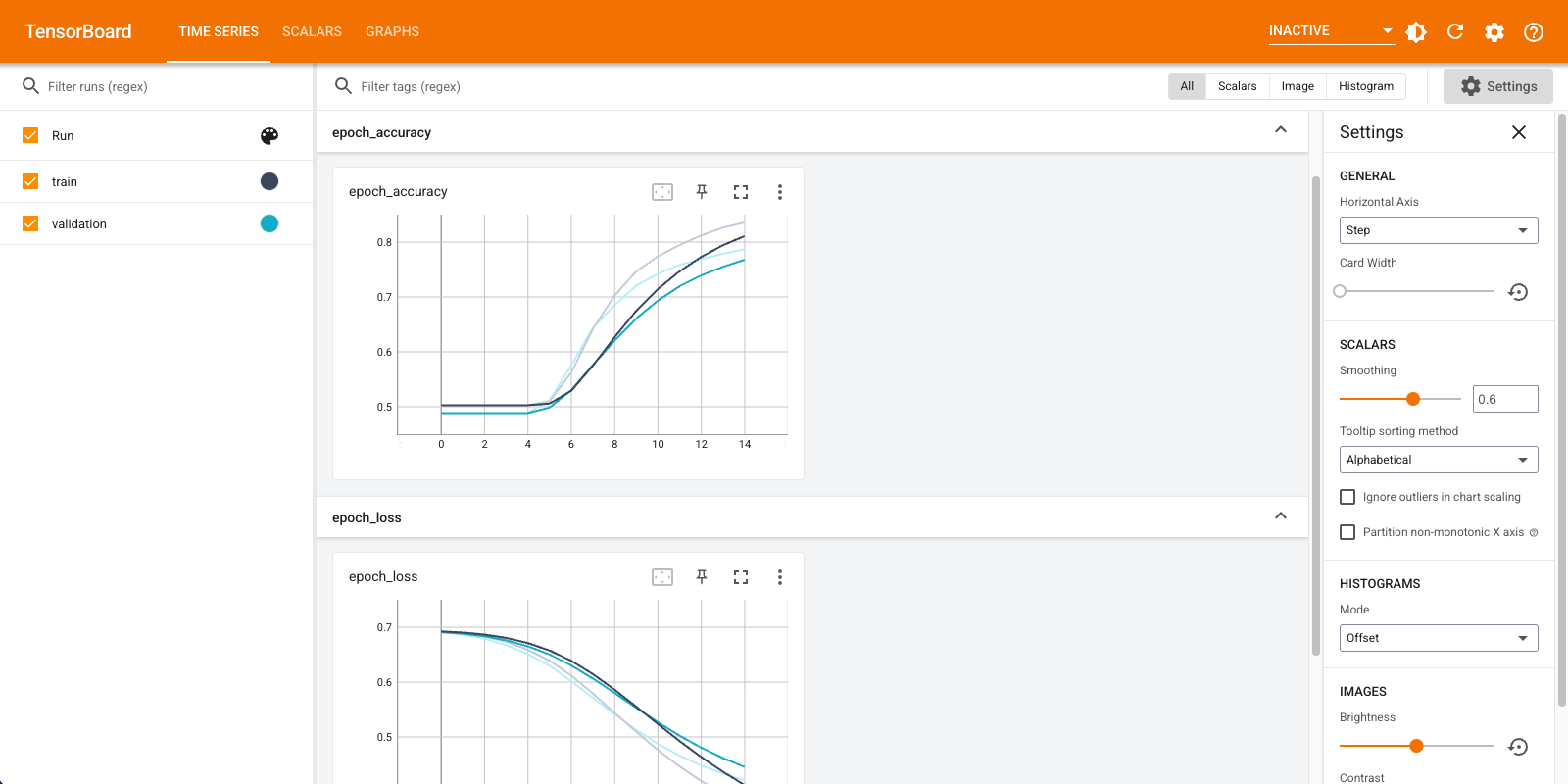

You will use TensorBoard to visualize metrics including loss and accuracy. Create a tf.keras.callbacks.TensorBoard .

Compile and train the model using the Adam optimizer and BinaryCrossentropy loss.

With this approach the model reaches a validation accuracy of around 78% (note that the model is overfitting since training accuracy is higher).

You can look into the model summary to learn more about each layer of the model.

Visualize the model metrics in TensorBoard.

Retrieve the trained word embeddings and save them to disk

Next, retrieve the word embeddings learned during training. The embeddings are weights of the Embedding layer in the model. The weights matrix is of shape (vocab_size, embedding_dimension) .

Obtain the weights from the model using get_layer() and get_weights() . The get_vocabulary() function provides the vocabulary to build a metadata file with one token per line.

Write the weights to disk. To use the Embedding Projector , you will upload two files in tab separated format: a file of vectors (containing the embedding), and a file of meta data (containing the words).

If you are running this tutorial in Colaboratory , you can use the following snippet to download these files to your local machine (or use the file browser, View -> Table of contents -> File browser ).

Visualize the embeddings

To visualize the embeddings, upload them to the embedding projector.

Open the Embedding Projector (this can also run in a local TensorBoard instance).

Click on "Load data".

Upload the two files you created above: vecs.tsv and meta.tsv .

The embeddings you have trained will now be displayed. You can search for words to find their closest neighbors. For example, try searching for "beautiful". You may see neighbors like "wonderful".

This tutorial has shown you how to train and visualize word embeddings from scratch on a small dataset.

To train word embeddings using Word2Vec algorithm, try the Word2Vec tutorial.

To learn more about advanced text processing, read the Transformer model for language understanding .

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2023-05-27 UTC.

- Get Started

- Tutorials >

- Deep Learning for NLP with Pytorch >

- Word Embeddings: Encoding Lexical Semantics

Click here to download the full example code

Word Embeddings: Encoding Lexical Semantics ¶

Word embeddings are dense vectors of real numbers, one per word in your vocabulary. In NLP, it is almost always the case that your features are words! But how should you represent a word in a computer? You could store its ascii character representation, but that only tells you what the word is , it doesn’t say much about what it means (you might be able to derive its part of speech from its affixes, or properties from its capitalization, but not much). Even more, in what sense could you combine these representations? We often want dense outputs from our neural networks, where the inputs are \(|V|\) dimensional, where \(V\) is our vocabulary, but often the outputs are only a few dimensional (if we are only predicting a handful of labels, for instance). How do we get from a massive dimensional space to a smaller dimensional space?

How about instead of ascii representations, we use a one-hot encoding? That is, we represent the word \(w\) by

where the 1 is in a location unique to \(w\) . Any other word will have a 1 in some other location, and a 0 everywhere else.

There is an enormous drawback to this representation, besides just how huge it is. It basically treats all words as independent entities with no relation to each other. What we really want is some notion of similarity between words. Why? Let’s see an example.

Suppose we are building a language model. Suppose we have seen the sentences

- The mathematician ran to the store.

- The physicist ran to the store.

- The mathematician solved the open problem.

in our training data. Now suppose we get a new sentence never before seen in our training data:

- The physicist solved the open problem.

Our language model might do OK on this sentence, but wouldn’t it be much better if we could use the following two facts:

- We have seen mathematician and physicist in the same role in a sentence. Somehow they have a semantic relation.

- We have seen mathematician in the same role in this new unseen sentence as we are now seeing physicist.

and then infer that physicist is actually a good fit in the new unseen sentence? This is what we mean by a notion of similarity: we mean semantic similarity , not simply having similar orthographic representations. It is a technique to combat the sparsity of linguistic data, by connecting the dots between what we have seen and what we haven’t. This example of course relies on a fundamental linguistic assumption: that words appearing in similar contexts are related to each other semantically. This is called the distributional hypothesis .

Getting Dense Word Embeddings ¶

How can we solve this problem? That is, how could we actually encode semantic similarity in words? Maybe we think up some semantic attributes. For example, we see that both mathematicians and physicists can run, so maybe we give these words a high score for the “is able to run” semantic attribute. Think of some other attributes, and imagine what you might score some common words on those attributes.

If each attribute is a dimension, then we might give each word a vector, like this:

Then we can get a measure of similarity between these words by doing:

Although it is more common to normalize by the lengths:

Where \(\phi\) is the angle between the two vectors. That way, extremely similar words (words whose embeddings point in the same direction) will have similarity 1. Extremely dissimilar words should have similarity -1.

You can think of the sparse one-hot vectors from the beginning of this section as a special case of these new vectors we have defined, where each word basically has similarity 0, and we gave each word some unique semantic attribute. These new vectors are dense , which is to say their entries are (typically) non-zero.

But these new vectors are a big pain: you could think of thousands of different semantic attributes that might be relevant to determining similarity, and how on earth would you set the values of the different attributes? Central to the idea of deep learning is that the neural network learns representations of the features, rather than requiring the programmer to design them herself. So why not just let the word embeddings be parameters in our model, and then be updated during training? This is exactly what we will do. We will have some latent semantic attributes that the network can, in principle, learn. Note that the word embeddings will probably not be interpretable. That is, although with our hand-crafted vectors above we can see that mathematicians and physicists are similar in that they both like coffee, if we allow a neural network to learn the embeddings and see that both mathematicians and physicists have a large value in the second dimension, it is not clear what that means. They are similar in some latent semantic dimension, but this probably has no interpretation to us.

In summary, word embeddings are a representation of the *semantics* of a word, efficiently encoding semantic information that might be relevant to the task at hand . You can embed other things too: part of speech tags, parse trees, anything! The idea of feature embeddings is central to the field.

Word Embeddings in Pytorch ¶

Before we get to a worked example and an exercise, a few quick notes about how to use embeddings in Pytorch and in deep learning programming in general. Similar to how we defined a unique index for each word when making one-hot vectors, we also need to define an index for each word when using embeddings. These will be keys into a lookup table. That is, embeddings are stored as a \(|V| \times D\) matrix, where \(D\) is the dimensionality of the embeddings, such that the word assigned index \(i\) has its embedding stored in the \(i\) ’th row of the matrix. In all of my code, the mapping from words to indices is a dictionary named word_to_ix.

The module that allows you to use embeddings is torch.nn.Embedding, which takes two arguments: the vocabulary size, and the dimensionality of the embeddings.

To index into this table, you must use torch.LongTensor (since the indices are integers, not floats).

An Example: N-Gram Language Modeling ¶

Recall that in an n-gram language model, given a sequence of words \(w\) , we want to compute

Where \(w_i\) is the ith word of the sequence.

In this example, we will compute the loss function on some training examples and update the parameters with backpropagation.

Exercise: Computing Word Embeddings: Continuous Bag-of-Words ¶

The Continuous Bag-of-Words model (CBOW) is frequently used in NLP deep learning. It is a model that tries to predict words given the context of a few words before and a few words after the target word. This is distinct from language modeling, since CBOW is not sequential and does not have to be probabilistic. Typcially, CBOW is used to quickly train word embeddings, and these embeddings are used to initialize the embeddings of some more complicated model. Usually, this is referred to as pretraining embeddings . It almost always helps performance a couple of percent.

The CBOW model is as follows. Given a target word \(w_i\) and an \(N\) context window on each side, \(w_{i-1}, \dots, w_{i-N}\) and \(w_{i+1}, \dots, w_{i+N}\) , referring to all context words collectively as \(C\) , CBOW tries to minimize

where \(q_w\) is the embedding for word \(w\) .

Implement this model in Pytorch by filling in the class below. Some tips:

- Think about which parameters you need to define.

- Make sure you know what shape each operation expects. Use .view() if you need to reshape.

Total running time of the script: ( 0 minutes 0.558 seconds)

Gallery generated by Sphinx-Gallery

- Getting Dense Word Embeddings

- Word Embeddings in Pytorch

- An Example: N-Gram Language Modeling

- Exercise: Computing Word Embeddings: Continuous Bag-of-Words

Access comprehensive developer documentation for PyTorch

Get in-depth tutorials for beginners and advanced developers

Find development resources and get your questions answered

Assignment 4 - Naive Machine Translation and LSH

Assignment 4 - naive machine translation and lsh #.

You will now implement your first machine translation system and then you will see how locality sensitive hashing works. Let’s get started by importing the required functions!

If you are running this notebook in your local computer, don’t forget to download the twitter samples and stopwords from nltk.

Important Note on Submission to the AutoGrader #

Before submitting your assignment to the AutoGrader, please make sure you are not doing the following:

You have not added any extra print statement(s) in the assignment.

You have not added any extra code cell(s) in the assignment.

You have not changed any of the function parameters.

You are not using any global variables inside your graded exercises. Unless specifically instructed to do so, please refrain from it and use the local variables instead.

You are not changing the assignment code where it is not required, like creating extra variables.

If you do any of the following, you will get something like, Grader not found (or similarly unexpected) error upon submitting your assignment. Before asking for help/debugging the errors in your assignment, check for these first. If this is the case, and you don’t remember the changes you have made, you can get a fresh copy of the assignment by following these instructions .

This assignment covers the folowing topics: #

1. The word embeddings data for English and French words

1.1 Generate embedding and transform matrices

2. Translations

2.1 Translation as linear transformation of embeddings

2.2 Testing the translation

3. LSH and document search

3.1 Getting the document embeddings

3.2 Looking up the tweets

3.3 Finding the most similar tweets with LSH

3.4 Getting the hash number for a vector

3.5 Creating a hash table

Exercise 10

3.6 Creating all hash tables

Exercise 11

1. The word embeddings data for English and French words #

Write a program that translates English to French.

The full dataset for English embeddings is about 3.64 gigabytes, and the French embeddings are about 629 megabytes. To prevent the Coursera workspace from crashing, we’ve extracted a subset of the embeddings for the words that you’ll use in this assignment.

The subset of data #

To do the assignment on the Coursera workspace, we’ll use the subset of word embeddings.

Look at the data #

en_embeddings_subset: the key is an English word, and the value is a 300 dimensional array, which is the embedding for that word.

fr_embeddings_subset: the key is a French word, and the value is a 300 dimensional array, which is the embedding for that word.

Load two dictionaries mapping the English to French words #

A training dictionary

and a testing dictionary.

Looking at the English French dictionary #

en_fr_train is a dictionary where the key is the English word and the value is the French translation of that English word.

en_fr_test is similar to en_fr_train , but is a test set. We won’t look at it until we get to testing.

1.1 Generate embedding and transform matrices #

Exercise 01: translating english dictionary to french by using embeddings #.

You will now implement a function get_matrices , which takes the loaded data and returns matrices X and Y .

en_fr : English to French dictionary

en_embeddings : English to embeddings dictionary

fr_embeddings : French to embeddings dictionary

Matrix X and matrix Y , where each row in X is the word embedding for an english word, and the same row in Y is the word embedding for the French version of that English word.

Use the en_fr dictionary to ensure that the ith row in the X matrix corresponds to the ith row in the Y matrix.

Instructions : Complete the function get_matrices() :

Iterate over English words in en_fr dictionary.

Check if the word have both English and French embedding.

- Sets are useful data structures that can be used to check if an item is a member of a group.

- You can get words which are embedded into the language by using keys method.

- Keep vectors in `X` and `Y` sorted in list. You can use np.vstack() to merge them into the numpy matrix.

- numpy.vstack stacks the items in a list as rows in a matrix.

Now we will use function get_matrices() to obtain sets X_train and Y_train of English and French word embeddings into the corresponding vector space models.

2. Translations #

Write a program that translates English words to French words using word embeddings and vector space models.

2.1 Translation as linear transformation of embeddings #

Given dictionaries of English and French word embeddings you will create a transformation matrix R

Given an English word embedding, \(\mathbf{e}\) , you can multiply \(\mathbf{eR}\) to get a new word embedding \(\mathbf{f}\) .

Both \(\mathbf{e}\) and \(\mathbf{f}\) are row vectors .

You can then compute the nearest neighbors to f in the french embeddings and recommend the word that is most similar to the transformed word embedding.

Describing translation as the minimization problem #

Find a matrix R that minimizes the following equation.

Frobenius norm #

The Frobenius norm of a matrix \(A\) (assuming it is of dimension \(m,n\) ) is defined as the square root of the sum of the absolute squares of its elements:

Actual loss function #

In the real world applications, the Frobenius norm loss:

is often replaced by it’s squared value divided by \(m\) :

where \(m\) is the number of examples (rows in \(\mathbf{X}\) ).

The same R is found when using this loss function versus the original Frobenius norm.

The reason for taking the square is that it’s easier to compute the gradient of the squared Frobenius.

The reason for dividing by \(m\) is that we’re more interested in the average loss per embedding than the loss for the entire training set.

The loss for all training set increases with more words (training examples), so taking the average helps us to track the average loss regardless of the size of the training set.

[Optional] Detailed explanation why we use norm squared instead of the norm: #

Exercise 02: implementing translation mechanism described in this section. #, step 1: computing the loss #.

The loss function will be squared Frobenoius norm of the difference between matrix and its approximation, divided by the number of training examples \(m\) .

Its formula is: $ \( L(X, Y, R)=\frac{1}{m}\sum_{i=1}^{m} \sum_{j=1}^{n}\left( a_{i j} \right)^{2}\) $

where \(a_{i j}\) is value in \(i\) th row and \(j\) th column of the matrix \(\mathbf{XR}-\mathbf{Y}\) .

Instructions: complete the compute_loss() function #

Compute the approximation of Y by matrix multiplying X and R

Compute difference XR - Y

Compute the squared Frobenius norm of the difference and divide it by \(m\) .

- Useful functions: Numpy dot , Numpy sum , Numpy square , Numpy norm

- Be careful about which operation is elementwise and which operation is a matrix multiplication.

- Try to use matrix operations instead of the numpy norm function. If you choose to use norm function, take care of extra arguments and that it's returning loss squared, and not the loss itself.

Expected output:

Exercise 03 #

Step 2: computing the gradient of loss in respect to transform matrix r #.

Calculate the gradient of the loss with respect to transform matrix R .

The gradient is a matrix that encodes how much a small change in R affect the change in the loss function.

The gradient gives us the direction in which we should decrease R to minimize the loss.

\(m\) is the number of training examples (number of rows in \(X\) ).

The formula for the gradient of the loss function \(𝐿(𝑋,𝑌,𝑅)\) is:

Instructions : Complete the compute_gradient function below.

- Transposing in numpy

- Finding out the dimensions of matrices in numpy

- Remember to use numpy.dot for matrix multiplication

Step 3: Finding the optimal R with gradient descent algorithm #

Gradient descent #.

Gradient descent is an iterative algorithm which is used in searching for the optimum of the function.

Earlier, we’ve mentioned that the gradient of the loss with respect to the matrix encodes how much a tiny change in some coordinate of that matrix affect the change of loss function.

Gradient descent uses that information to iteratively change matrix R until we reach a point where the loss is minimized.

Training with a fixed number of iterations #

Most of the time we iterate for a fixed number of training steps rather than iterating until the loss falls below a threshold.

OPTIONAL: explanation for fixed number of iterations #

- You cannot rely on training loss getting low -- what you really want is the validation loss to go down, or validation accuracy to go up. And indeed - in some cases people train until validation accuracy reaches a threshold, or -- commonly known as "early stopping" -- until the validation accuracy starts to go down, which is a sign of over-fitting.

- Why not always do "early stopping"? Well, mostly because well-regularized models on larger data-sets never stop improving. Especially in NLP, you can often continue training for months and the model will continue getting slightly and slightly better. This is also the reason why it's hard to just stop at a threshold -- unless there's an external customer setting the threshold, why stop, where do you put the threshold?

- Stopping after a certain number of steps has the advantage that you know how long your training will take - so you can keep some sanity and not train for months. You can then try to get the best performance within this time budget. Another advantage is that you can fix your learning rate schedule -- e.g., lower the learning rate at 10% before finish, and then again more at 1% before finishing. Such learning rate schedules help a lot, but are harder to do if you don't know how long you're training.

Pseudocode:

Calculate gradient \(g\) of the loss with respect to the matrix \(R\) .

Update \(R\) with the formula: $ \(R_{\text{new}}= R_{\text{old}}-\alpha g\) $

Where \(\alpha\) is the learning rate, which is a scalar.

Learning rate #

The learning rate or “step size” \(\alpha\) is a coefficient which decides how much we want to change \(R\) in each step.

If we change \(R\) too much, we could skip the optimum by taking too large of a step.

If we make only small changes to \(R\) , we will need many steps to reach the optimum.

Learning rate \(\alpha\) is used to control those changes.

Values of \(\alpha\) are chosen depending on the problem, and we’ll use learning_rate \(=0.0003\) as the default value for our algorithm.

Exercise 04 #

Instructions: implement align_embeddings() #.

- Use the 'compute_gradient()' function to get the gradient in each step

Expected Output:

Calculate transformation matrix R #

Using those the training set, find the transformation matrix \(\mathbf{R}\) by calling the function align_embeddings() .

NOTE: The code cell below will take a few minutes to fully execute (~3 mins)

Expected Output #

2.2 testing the translation #, k-nearest neighbors algorithm #.

k-Nearest neighbors algorithm

k-NN is a method which takes a vector as input and finds the other vectors in the dataset that are closest to it.

The ‘k’ is the number of “nearest neighbors” to find (e.g. k=2 finds the closest two neighbors).

Searching for the translation embedding #

Since we’re approximating the translation function from English to French embeddings by a linear transformation matrix \(\mathbf{R}\) , most of the time we won’t get the exact embedding of a French word when we transform embedding \(\mathbf{e}\) of some particular English word into the French embedding space.

This is where \(k\) -NN becomes really useful! By using \(1\) -NN with \(\mathbf{eR}\) as input, we can search for an embedding \(\mathbf{f}\) (as a row) in the matrix \(\mathbf{Y}\) which is the closest to the transformed vector \(\mathbf{eR}\)

Cosine similarity #

Cosine similarity between vectors \(u\) and \(v\) calculated as the cosine of the angle between them. The formula is

\(\cos(u,v)\) = \(1\) when \(u\) and \(v\) lie on the same line and have the same direction.

\(\cos(u,v)\) is \(-1\) when they have exactly opposite directions.

\(\cos(u,v)\) is \(0\) when the vectors are orthogonal (perpendicular) to each other.

Note: Distance and similarity are pretty much opposite things. #

We can obtain distance metric from cosine similarity, but the cosine similarity can’t be used directly as the distance metric.

When the cosine similarity increases (towards \(1\) ), the “distance” between the two vectors decreases (towards \(0\) ).

We can define the cosine distance between \(u\) and \(v\) as $ \(d_{\text{cos}}(u,v)=1-\cos(u,v)\) $

Exercise 05 : Complete the function nearest_neighbor()

A set of possible nearest neighbors candidates

k nearest neighbors to find.

The distance metric should be based on cosine similarity.

cosine_similarity function is already implemented and imported for you. It’s arguments are two vectors and it returns the cosine of the angle between them.

Iterate over rows in candidates , and save the result of similarities between current row and vector v in a python list. Take care that similarities are in the same order as row vectors of candidates .

Now you can use numpy argsort to sort the indices for the rows of candidates .

- numpy.argsort sorts values from most negative to most positive (smallest to largest)

- The candidates that are nearest to 'v' should have the highest cosine similarity

- To reverse the order of the result of numpy.argsort to get the element with highest cosine similarity as the first element of the array you can use tmp[::-1]. This reverses the order of an array. Then, you can extract the first k elements.

Expected Output :

[[2 0 1] [1 0 5] [9 9 9]]

Test your translation and compute its accuracy #

Exercise 06 : Complete the function test_vocabulary which takes in English embedding matrix \(X\) , French embedding matrix \(Y\) and the \(R\) matrix and returns the accuracy of translations from \(X\) to \(Y\) by \(R\) .

Iterate over transformed English word embeddings and check if the closest French word vector belongs to French word that is the actual translation.

Obtain an index of the closest French embedding by using nearest_neighbor (with argument k=1 ), and compare it to the index of the English embedding you have just transformed.

Keep track of the number of times you get the correct translation.

Calculate accuracy as $ \(\text{accuracy}=\frac{\#(\text{correct predictions})}{\#(\text{total predictions})}\) $

Let’s see how is your translation mechanism working on the unseen data:

You managed to translate words from one language to another language without ever seing them with almost 56% accuracy by using some basic linear algebra and learning a mapping of words from one language to another!

3. LSH and document search #

In this part of the assignment, you will implement a more efficient version of k-nearest neighbors using locality sensitive hashing. You will then apply this to document search.

Process the tweets and represent each tweet as a vector (represent a document with a vector embedding).

Use locality sensitive hashing and k nearest neighbors to find tweets that are similar to a given tweet.

3.1 Getting the document embeddings #

Bag-of-words (bow) document models #.

Text documents are sequences of words.

The ordering of words makes a difference. For example, sentences “Apple pie is better than pepperoni pizza.” and “Pepperoni pizza is better than apple pie” have opposite meanings due to the word ordering.

However, for some applications, ignoring the order of words can allow us to train an efficient and still effective model.

This approach is called Bag-of-words document model.

Document embeddings #

Document embedding is created by summing up the embeddings of all words in the document.

If we don’t know the embedding of some word, we can ignore that word.

Exercise 07 : Complete the get_document_embedding() function.

The function get_document_embedding() encodes entire document as a “document” embedding.

It takes in a document (as a string) and a dictionary, en_embeddings

It processes the document, and looks up the corresponding embedding of each word.

It then sums them up and returns the sum of all word vectors of that processed tweet.

- You can handle missing words easier by using the `get()` method of the python dictionary instead of the bracket notation (i.e. "[ ]"). See more about it here

- The default value for missing word should be the zero vector. Numpy will broadcast simple 0 scalar into a vector of zeros during the summation.

- Alternatively, skip the addition if a word is not in the dictonary.

- You can use your `process_tweet()` function which allows you to process the tweet. The function just takes in a tweet and returns a list of words.

Expected output :

Exercise 08 #

Store all document vectors into a dictionary #.

Now, let’s store all the tweet embeddings into a dictionary. Implement get_document_vecs()

3.2 Looking up the tweets #

Now you have a vector of dimension (m,d) where m is the number of tweets (10,000) and d is the dimension of the embeddings (300). Now you will input a tweet, and use cosine similarity to see which tweet in our corpus is similar to your tweet.

3.3 Finding the most similar tweets with LSH #

You will now implement locality sensitive hashing (LSH) to identify the most similar tweet.

Instead of looking at all 10,000 vectors, you can just search a subset to find its nearest neighbors.

Let’s say your data points are plotted like this:

You can divide the vector space into regions and search within one region for nearest neighbors of a given vector.

Choosing the number of planes #

Each plane divides the space to \(2\) parts.

So \(n\) planes divide the space into \(2^{n}\) hash buckets.

We want to organize 10,000 document vectors into buckets so that every bucket has about \(~16\) vectors.

For that we need \(\frac{10000}{16}=625\) buckets.

We’re interested in \(n\) , number of planes, so that \(2^{n}= 625\) . Now, we can calculate \(n=\log_{2}625 = 9.29 \approx 10\) .

3.4 Getting the hash number for a vector #

For each vector, we need to get a unique number associated to that vector in order to assign it to a “hash bucket”.

Hyperplanes in vector spaces #