- Number System and Arithmetic

- Probability

- Mensuration

- Trigonometry

- Mathematics

Experimental Design

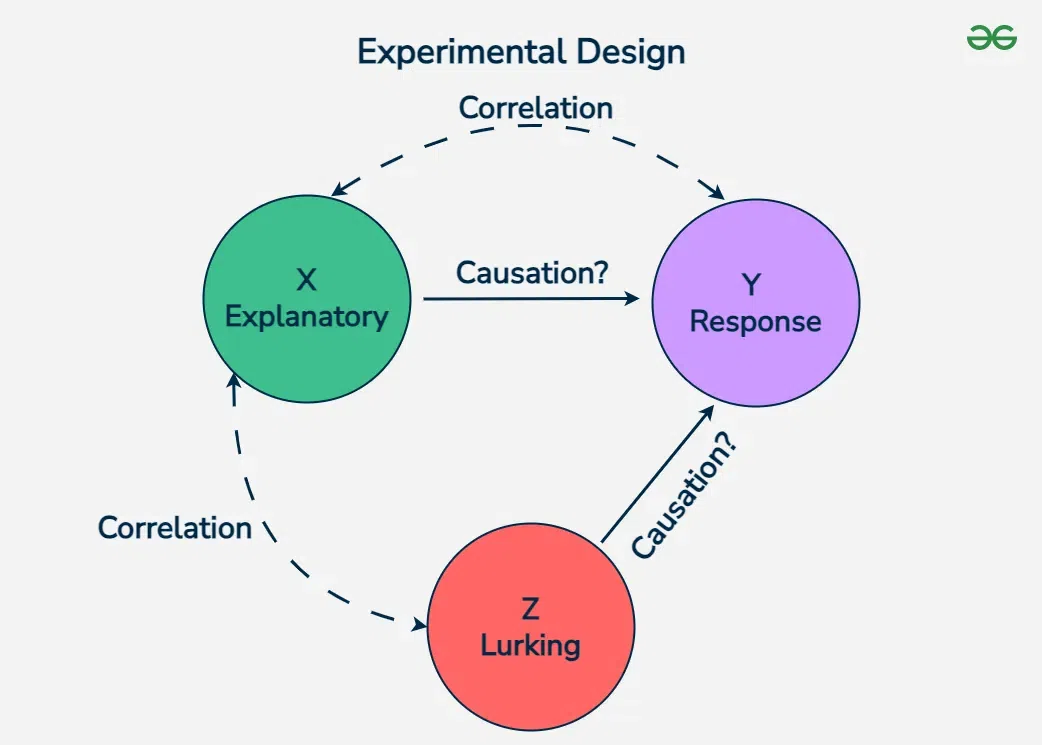

Experimental design is reviewed as an important part of the research methodology with an implication for the confirmation and reliability of the scientific studies. This is the scientific, logical and planned way of arranging tests and how they may be conducted so that hypotheses can be tested with the possibility of arriving at some conclusions. It refers to a procedure followed in order to control variables and conditions that may influence the outcome of a given study to reduce bias as well as improve the effectiveness of data collection and subsequently the quality of the results.

What is Experimental Design?

Experimental design simply refers to the strategy that is employed in conducting experiments to test hypotheses and arrive at valid conclusions. The process comprises firstly, the formulation of research questions, variable selection, specifications of the conditions for the experiment, and a protocol for data collection and analysis. The importance of experimental design can be seen through its potential to prevent bias, reduce variability, and increase the precision of results in an attempt to achieve high internal validity of studies. By using experimental design, the researchers can generate valid results which can be generalized in other settings which helps the advancement of knowledge in various fields.

Definition of Experimental Design

Experimental design is a systematic method of implementing experiments in which one can manipulate variables in a structured way in order to analyze hypotheses and draw outcomes based on empirical evidence.

Types of Experimental Design

Experimental design encompasses various approaches to conducting research studies, each tailored to address specific research questions and objectives. The primary types of experimental design include:

Pre-experimental Research Design

- True Experimental Research Design

- Quasi-Experimental Research Design

Statistical Experimental Design

A preliminary approach where groups are observed after implementing cause and effect factors to determine the need for further investigation. It is often employed when limited information is available or when researchers seek to gain initial insights into a topic. Pre-experimental designs lack random assignment and control groups, making it difficult to establish causal relationships.

Classifications:

- One-Shot Case Study

- One-Group Pretest-Posttest Design

- Static-Group Comparison

True-experimental Research Design

The true-experimental research design involves the random assignment of participants to experimental and control groups to establish cause-and-effect relationships between variables. It is used to determine the impact of an intervention or treatment on the outcome of interest. True-experimental designs satisfy the following factors:

Factors to Satisfy:

- Random Assignment

- Control Group

- Experimental Group

- Pretest-Posttest Measures

Quasi-Experimental Design

A quasi-experimental design is an alternative to the true-experimental design when the random assignment of participants to the groups is not possible or desirable. It allows for comparisons between groups without random assignment, providing valuable insights into causal relationships in real-world settings. Quasi-experimental designs are used typically in conditions wherein the random assignment of the participants cannot be done or it may not be ethical, for example, an educational or community-based intervention.

Statistical experimental design, also known as design of experiments (DOE), is a branch of statistics that focuses on planning, conducting, analyzing, and interpreting controlled tests to evaluate the factors that may influence a particular outcome or process. The primary goal is to determine cause-and-effect relationships and to identify the optimal conditions for achieving desired results. The detailed is discussed below:

Design of Experiments: Goals & Settings

The goals and settings for design of experiments are as follows:

- Identifying Research Objectives: Clearly defining the goals and hypotheses of the experiment is crucial for designing an effective study.

- Selecting Appropriate Variables: Determining the independent, dependent, and control variables based on the research question.

- Considering Experimental Conditions: Identifying the settings and constraints under which the experiment will be conducted.

- Ensuring Validity and Reliability: Designing the experiment to minimize threats to internal and external validity.

Developing an Experimental Design

Developing an experimental design involves a systematic process of planning and structuring the study to achieve the research objectives. Here are the key steps:

- Define the research question and hypotheses

- Identify the independent and dependent variables

- Determine the experimental conditions and treatments

- Select the appropriate experimental design (e.g., completely randomized, randomized block, factorial)

- Determine the sample size and sampling method

- Establish protocols for data collection and analysis

- Conduct a pilot study to test the feasibility and refine the design

- Implement the experiment and collect data

- Analyze the data using appropriate statistical methods

- Interpret the results and draw conclusions

Preplanning, Defining, and Operationalizing for Design of Experiments

Preplanning, defining, and operationalizing are crucial steps in the design of experiments. Preplanning involves identifying the research objectives, selecting variables, and determining the experimental conditions. Defining refers to clearly stating the research question, hypotheses, and operational definitions of the variables. Operationalizing involves translating the conceptual definitions into measurable terms and establishing protocols for data collection.

For example, in a study investigating the effect of different fertilizers on plant growth, the researcher would preplan by selecting the independent variable (fertilizer type), dependent variable (plant height), and control variables (soil type, sunlight exposure). The research question would be defined as "Does the type of fertilizer affect the height of plants?" The operational definitions would include specific methods for measuring plant height and applying the fertilizers.

Randomized Block Design

Randomized block design is an experimental approach where subjects or units are grouped into blocks based on a known source of variability, such as location, time, or individual characteristics. The treatments are then randomly assigned to the units within each block. This design helps control for confounding factors, reduce experimental error, and increase the precision of estimates. By blocking, researchers can account for systematic differences between groups and focus on the effects of the treatments being studied

Consider a study investigating the effectiveness of two teaching methods (A and B) on student performance. The steps involved in a randomized block design would include:

- Identifying blocks based on student ability levels.

- Randomly assigning students within each block to either method A or B.

- Conducting the teaching interventions.

- Analyzing the results within each block to account for variability.

Completely Randomized Design

A completely randomized design is a straightforward experimental approach where treatments are randomly assigned to experimental units without any specific blocking. This design is suitable when there are no known sources of variability that need to be controlled for. In a completely randomized design, all units have an equal chance of receiving any treatment, and the treatments are distributed independently. This design is simple to implement and analyze but may be less efficient than a randomized block design when there are known sources of variability

Between-Subjects vs Within-Subjects Experimental Designs

Here is a detailed comparison among Between-Subject and Within-Subject is tabulated below:

Design of Experiments Examples

The examples of design experiments are as follows:

Between-Subjects Design Example:

In a study comparing the effectiveness of two teaching methods on student performance, one group of students (Group A) is taught using Method 1, while another group (Group B) is taught using Method 2. The performance of both groups is then compared to determine the impact of the teaching methods on student outcomes.

Within-Subjects Design Example:

In a study assessing the effects of different exercise routines on fitness levels, each participant undergoes all exercise routines over a period of time. Participants' fitness levels are measured before and after each routine to evaluate the impact of the exercises on their fitness levels.

Application of Experimental Design

The applications of Experimental design are as follows:

- Product Testing: Experimental design is used to evaluate the effectiveness of new products or interventions.

- Medical Research: It helps in testing the efficacy of treatments and interventions in controlled settings.

- Agricultural Studies: Experimental design is crucial in testing new farming techniques or crop varieties.

- Psychological Experiments: It is employed to study human behavior and cognitive processes.

- Quality Control: Experimental design aids in optimizing processes and improving product quality.

In scientific research, experimental design is a crucial procedure that helps to outline an effective strategy for carrying out a meaningful experiment and making correct conclusions. This means that through proper control and coordination in conducting experiments, increased reliability and validity can be attained, and expansion of knowledge can take place generally across various fields. Using proper experimental design principles is crucial in ensuring that the experimental outcomes are impactful and valid.

Also, Check

- What is Hypothesis

- Null Hypothesis

- Real-life Applications of Hypothesis Testing

FAQs on Experimental Design

What is experimental design in math.

Experimental design refers to the aspect of planning experiments to gather data, decide the way in which to control the variable and draw sensible conclusions from the outcomes.

What are the advantages of the experimental method in math?

The advantages of the experimental method include control of variables, establishment of cause-and-effector relationship and use of statistical tools for proper data analysis.

What is the main purpose of experimental design?

The goal of experimental design is to describe the nature of variables and examine how changes in one or more variables impact the outcome of the experiment.

What are the limitations of experimental design?

Limitations include potential biases, the complexity of controlling all variables, ethical considerations, and the fact that some experiments can be costly or impractical.

What are the statistical tools used in experimental design?

Statistical tools utilized include ANOVA, regression analysis, t-tests, chi-square tests and factorial designs to conduct scientific research.

- School Learning

- Math-Statistics

Similar Reads

- Experimental Design Experimental design is reviewed as an important part of the research methodology with an implication for the confirmation and reliability of the scientific studies. This is the scientific, logical and planned way of arranging tests and how they may be conducted so that hypotheses can be tested with 8 min read

- Pre Experimental Design Pre-Experimental Design: Statistics is about collecting, observing, calculating, and interpreting numerical data. It involves lots of experiments and research. A statistical experiment is a planned procedure to test and verify a hypothesis. Before starting an experiment, clear questions need to be i 9 min read

- Davisson-Germer Experiment Davisson Germer Experiment established the wave nature of electrons and validated the de Broglie equation for the first time. De Broglie proposed the dual nature of the matter in 1924, but it wasn't until later that Davisson and Germer's experiment confirmed the findings. The findings provided the f 8 min read

- Urey-Miller Experiment -Diagram The Miller-Urey Experiment diagram explains the set-up of this experiment conducted in 1952 by chemists Stanley Miller and Harold Urey. This Miller-Urey experiments aimed to simulate the conditions of early Earth's atmosphere and oceans to investigate the possibility of abiogenesis, or the spontaneo 4 min read

- Controlled Experiment Controlled experiment is simple and a basic approach in the research process employed to determine the relationship, if any between two variables. This way of studying is quite advantageous because by controlling an independent variable and maintaining a record of changes to a dependent one, researc 8 min read

- Experimental Probability Experimental probability, also known as empirical probability, is a concept in mathematics that deals with estimating the likelihood of an event occurring based on actual experimental results. Unlike theoretical probability, which predicts outcomes based on known possibilities, experimental probabil 8 min read

- Miller Urey Experiment The Miller-Urey experiment was a 1952 chemical synthesis experiment that simulated the conditions of the early Earth's atmosphere. The experiment showed that organic molecules could have formed from simple chemical reactions. The Miller-Urey Experiment Class 12 is an important concept in the biology 9 min read

- Random Experiment - Probability In a cricket match, before the game begins. Two captains go for a toss. Tossing is an activity of flipping a coin and checking the result as either “Head” or “Tail”. Similarly, tossing a die gives us a number from 1 to 6. All these activities are examples of experiments. An activity that gives us a 12 min read

- The Experimental Proof Of DNA Replication The process by which cells duplicate their genetic material during cell division—the replication of DNA—was still largely a mystery. This sparked a race to understand how DNA replication happens among several well-known experts. The experimental evidence of DNA replication, which showed that DNA rep 5 min read

- Random Assignment Random assignment is a fundamental technique used in experimental design and statistical research to ensure that participants or subjects are assigned to different groups or conditions in a way that is entirely by chance. This method is critical for minimizing bias and ensuring the validity and reli 9 min read

- DNA as Genetic Material - Hershey And Chase Experiment The Hershey and Chase Experiment, conducted in 1952 by Alfred Hershey and Martha Chase, demonstrated that DNA contains genetic information. They accomplished this by investigating viruses that infect bacteria, known as bacteriophages. In these tests, scientists labelled the virus's DNA with a radioa 7 min read

- Independent Sample t Test in R Statistical hypothesis testing is a fundamental tool in data analysis to draw meaningful conclusions from data. The independent sample t-test is one of the widely used statistical tests that compares the mean (average) of two independent groups and determines whether there is a significant differenc 6 min read

- Experimental Section in GRE General The GRE experimental section is the most popular and highly discussed section among all the aspirants. It has gained massive popularity from the fact that no marks are awarded for this particular section. The experimental section can be either verbal, quantitative, or analytical writing task. This s 2 min read

- 7 steps to running an MVP experiment Running a successful MVP experiment is crucial for product managers aiming to create products that meet user needs and drive business growth. An MVP experiment involves testing the core functionalities of a product or feature to gather valuable insights and validate assumptions. By following a struc 8 min read

- Difference Between Correlational and Experimental-Research Non-experimental research methods like correlational research are used to look at correlations between two or more variables. Positive or negative correlations suggest that as one measure rises, the other either rises or falls. To study the cause-and-effect relationship between various variables, ex 5 min read

- Hypothesis | Definition, Meaning and Examples Hypothesis is a hypothesis is fundamental concept in the world of research and statistics. It is a testable statement that explains what is happening or observed. It proposes the relation between the various participating variables. Hypothesis is also called Theory, Thesis, Guess, Assumption, or Sug 12 min read

- Protein and Test for Protein Test for Protein deals with the details of protein including its structure, properties, classification, and function as well as the details of different tests to detect proteins in a sample. The identification test for protein explains the principle and theory of each test, along with the materials 7 min read

- Lassaigne Test Lassaigne Test is a set of procedures used to detect the presence of nitrogen, sulphur and halogens in an organic compound. In the theoretical approach, we use different symbols in the molecular formula of a compound to show the presence of a particular element in it but in the practical approach, w 8 min read

- What is Usability Testing in UX Design If you’re a UX designer, you’ve likely encountered the term "usability testing" or participated in usability tests within your company. Usability testing is a crucial component of the UX design process. It allows designers to verify and understand how their product performs with the primary target u 7 min read

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Privacy Policy

Home » Experimental Design – Types, Methods, Guide

Experimental Design – Types, Methods, Guide

Table of Contents

Experimental design is a structured approach used to conduct scientific experiments. It enables researchers to explore cause-and-effect relationships by controlling variables and testing hypotheses. This guide explores the types of experimental designs, common methods, and best practices for planning and conducting experiments.

Experimental Design

Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes. By carefully controlling conditions, researchers can determine whether specific factors cause changes in a dependent variable.

Key Characteristics of Experimental Design :

- Manipulation of Variables : The researcher intentionally changes one or more independent variables.

- Control of Extraneous Factors : Other variables are kept constant to avoid interference.

- Randomization : Subjects are often randomly assigned to groups to reduce bias.

- Replication : Repeating the experiment or having multiple subjects helps verify results.

Purpose of Experimental Design

The primary purpose of experimental design is to establish causal relationships by controlling for extraneous factors and reducing bias. Experimental designs help:

- Test Hypotheses : Determine if there is a significant effect of independent variables on dependent variables.

- Control Confounding Variables : Minimize the impact of variables that could distort results.

- Generate Reproducible Results : Provide a structured approach that allows other researchers to replicate findings.

Types of Experimental Designs

Experimental designs can vary based on the number of variables, the assignment of participants, and the purpose of the experiment. Here are some common types:

1. Pre-Experimental Designs

These designs are exploratory and lack random assignment, often used when strict control is not feasible. They provide initial insights but are less rigorous in establishing causality.

- Example : A training program is provided, and participants’ knowledge is tested afterward, without a pretest.

- Example : A group is tested on reading skills, receives instruction, and is tested again to measure improvement.

2. True Experimental Designs

True experiments involve random assignment of participants to control or experimental groups, providing high levels of control over variables.

- Example : A new drug’s efficacy is tested with patients randomly assigned to receive the drug or a placebo.

- Example : Two groups are observed after one group receives a treatment, and the other receives no intervention.

3. Quasi-Experimental Designs

Quasi-experiments lack random assignment but still aim to determine causality by comparing groups or time periods. They are often used when randomization isn’t possible, such as in natural or field experiments.

- Example : Schools receive different curriculums, and students’ test scores are compared before and after implementation.

- Example : Traffic accident rates are recorded for a city before and after a new speed limit is enforced.

4. Factorial Designs

Factorial designs test the effects of multiple independent variables simultaneously. This design is useful for studying the interactions between variables.

- Example : Studying how caffeine (variable 1) and sleep deprivation (variable 2) affect memory performance.

- Example : An experiment studying the impact of age, gender, and education level on technology usage.

5. Repeated Measures Design

In repeated measures designs, the same participants are exposed to different conditions or treatments. This design is valuable for studying changes within subjects over time.

- Example : Measuring reaction time in participants before, during, and after caffeine consumption.

- Example : Testing two medications, with each participant receiving both but in a different sequence.

Methods for Implementing Experimental Designs

- Purpose : Ensures each participant has an equal chance of being assigned to any group, reducing selection bias.

- Method : Use random number generators or assignment software to allocate participants randomly.

- Purpose : Prevents participants or researchers from knowing which group (experimental or control) participants belong to, reducing bias.

- Method : Implement single-blind (participants unaware) or double-blind (both participants and researchers unaware) procedures.

- Purpose : Provides a baseline for comparison, showing what would happen without the intervention.

- Method : Include a group that does not receive the treatment but otherwise undergoes the same conditions.

- Purpose : Controls for order effects in repeated measures designs by varying the order of treatments.

- Method : Assign different sequences to participants, ensuring that each condition appears equally across orders.

- Purpose : Ensures reliability by repeating the experiment or including multiple participants within groups.

- Method : Increase sample size or repeat studies with different samples or in different settings.

Steps to Conduct an Experimental Design

- Clearly state what you intend to discover or prove through the experiment. A strong hypothesis guides the experiment’s design and variable selection.

- Independent Variable (IV) : The factor manipulated by the researcher (e.g., amount of sleep).

- Dependent Variable (DV) : The outcome measured (e.g., reaction time).

- Control Variables : Factors kept constant to prevent interference with results (e.g., time of day for testing).

- Choose a design type that aligns with your research question, hypothesis, and available resources. For example, an RCT for a medical study or a factorial design for complex interactions.

- Randomly assign participants to experimental or control groups. Ensure control groups are similar to experimental groups in all respects except for the treatment received.

- Randomize the assignment and, if possible, apply blinding to minimize potential bias.

- Follow a consistent procedure for each group, collecting data systematically. Record observations and manage any unexpected events or variables that may arise.

- Use appropriate statistical methods to test for significant differences between groups, such as t-tests, ANOVA, or regression analysis.

- Determine whether the results support your hypothesis and analyze any trends, patterns, or unexpected findings. Discuss possible limitations and implications of your results.

Examples of Experimental Design in Research

- Medicine : Testing a new drug’s effectiveness through a randomized controlled trial, where one group receives the drug and another receives a placebo.

- Psychology : Studying the effect of sleep deprivation on memory using a within-subject design, where participants are tested with different sleep conditions.

- Education : Comparing teaching methods in a quasi-experimental design by measuring students’ performance before and after implementing a new curriculum.

- Marketing : Using a factorial design to examine the effects of advertisement type and frequency on consumer purchase behavior.

- Environmental Science : Testing the impact of a pollution reduction policy through a time series design, recording pollution levels before and after implementation.

Experimental design is fundamental to conducting rigorous and reliable research, offering a systematic approach to exploring causal relationships. With various types of designs and methods, researchers can choose the most appropriate setup to answer their research questions effectively. By applying best practices, controlling variables, and selecting suitable statistical methods, experimental design supports meaningful insights across scientific, medical, and social research fields.

- Campbell, D. T., & Stanley, J. C. (1963). Experimental and Quasi-Experimental Designs for Research . Houghton Mifflin Company.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference . Houghton Mifflin.

- Fisher, R. A. (1935). The Design of Experiments . Oliver and Boyd.

- Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics . Sage Publications.

- Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences . Routledge.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Focus Groups – Steps, Examples and Guide

Survey Research – Types, Methods, Examples

Transformative Design – Methods, Types, Guide

Case Study – Methods, Examples and Guide

Phenomenology – Methods, Examples and Guide

Correlational Research – Methods, Types and...

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

Lesson 1: introduction to design of experiments, overview section .

In this course we will pretty much cover the textbook - all of the concepts and designs included. I think we will have plenty of examples to look at and experience to draw from.

Please note: the main topics listed in the syllabus follow the chapters in the book.

A word of advice regarding the analyses. The prerequisite for this course is STAT 501 - Regression Methods and STAT 502 - Analysis of Variance . However, the focus of the course is on the design and not on the analysis. Thus, one can successfully complete this course without these prerequisites, with just STAT 500 - Applied Statistics for instance, but it will require much more work, and for the analysis less appreciation of the subtleties involved. You might say it is more conceptual than it is math oriented.

Text Reference: Montgomery, D. C. (2019). Design and Analysis of Experiments , 10th Edition, John Wiley & Sons. ISBN 978-1-119-59340-9

What is the Scientific Method? Section

Do you remember learning about this back in high school or junior high even? What were those steps again?

Decide what phenomenon you wish to investigate. Specify how you can manipulate the factor and hold all other conditions fixed, to insure that these extraneous conditions aren't influencing the response you plan to measure.

Then measure your chosen response variable at several (at least two) settings of the factor under study. If changing the factor causes the phenomenon to change, then you conclude that there is indeed a cause-and-effect relationship at work.

How many factors are involved when you do an experiment? Some say two - perhaps this is a comparative experiment? Perhaps there is a treatment group and a control group? If you have a treatment group and a control group then, in this case, you probably only have one factor with two levels.

How many of you have baked a cake? What are the factors involved to ensure a successful cake? Factors might include preheating the oven, baking time, ingredients, amount of moisture, baking temperature, etc.-- what else? You probably follow a recipe so there are many additional factors that control the ingredients - i.e., a mixture. In other words, someone did the experiment in advance! What parts of the recipe did they vary to make the recipe a success? Probably many factors, temperature and moisture, various ratios of ingredients, and presence or absence of many additives. Now, should one keep all the factors involved in the experiment at a constant level and just vary one to see what would happen? This is a strategy that works but is not very efficient. This is one of the concepts that we will address in this course.

- understand the issues and principles of Design of Experiments (DOE),

- understand experimentation is a process,

- list the guidelines for designing experiments, and

- recognize the key historical figures in DOE.

JMP | Statistical Discovery.™ From SAS.

Statistics Knowledge Portal

A free online introduction to statistics

Design of experiments

What is design of experiments.

Design of experiments (DOE) is a systematic, efficient method that enables scientists and engineers to study the relationship between multiple input variables (aka factors) and key output variables (aka responses). It is a structured approach for collecting data and making discoveries.

When to use DOE?

- To determine whether a factor, or a collection of factors, has an effect on the response.

- To determine whether factors interact in their effect on the response.

- To model the behavior of the response as a function of the factors.

- To optimize the response.

Ronald Fisher first introduced four enduring principles of DOE in 1926: the factorial principle, randomization, replication and blocking. Generating and analyzing these designs relied primarily on hand calculation in the past; until recently practitioners started using computer-generated designs for a more effective and efficient DOE.

Why use DOE?

DOE is useful:

- In driving knowledge of cause and effect between factors.

- To experiment with all factors at the same time.

- To run trials that span the potential experimental region for our factors.

- In enabling us to understand the combined effect of the factors.

To illustrate the importance of DOE, let’s look at what will happen if DOE does NOT exist.

Experiments are likely to be carried out via trial and error or one-factor-at-a-time (OFAT) method.

Trial-and-error method

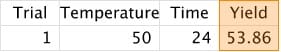

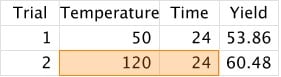

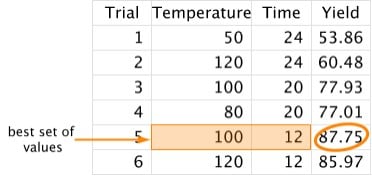

Test different settings of two factors and see what the resulting yield is.

Say we want to determine the optimal temperature and time settings that will maximize yield through experiments.

How the experiment looks like using trial-and-error method:

1. Conduct a trial at starting values for the two variables and record the yield:

2. Adjust one or both values based on our results:

3. Repeat Step 2 until we think we've found the best set of values:

As you can tell, the cons of trial-and-error are:

- Inefficient, unstructured and ad hoc (worst if carried out without subject matter knowledge).

- Unlikely to find the optimum set of conditions across two or more factors.

One factor at a time (OFAT) method

Change the value of the one factor, then measure the response, repeat the process with another factor.

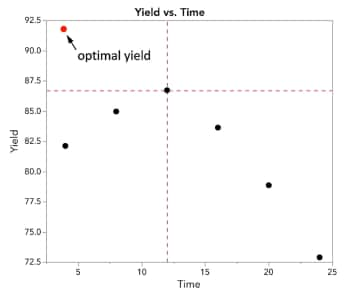

In the same experiment of searching optimal temperature and time to maximize yield, this is how the experiment looks using an OFAT method:

1. Start with temperature: Find the temperature resulting in the highest yield, between 50 and 120 degrees.

1a. Run a total of eight trials. Each trial increases temperature by 10 degrees (i.e., 50, 60, 70 ... all the way to 120 degrees).

1b. With time fixed at 20 hours as a controlled variable.

1c. Measure yield for each batch.

2. Run the second experiment by varying time, to find the optimal value of time (between 4 and 24 hours).

2a. Run a total of six trials. Each trial increases temperature by 4 hours (i.e., 4, 8, 12… up to 24 hours).

2b. With temperature fixed at 90 degrees as a controlled variable.

2c. Measure yield for each batch.

3. After a total of 14 trials, we’ve identified the max yield (86.7%) happens when:

- Temperature is at 90 degrees; Time is at 12 hours.

As you can already tell, OFAT is a more structured approach compared to trial and error.

But there’s one major problem with OFAT : What if the optimal temperature and time settings look more like this?

We would have missed out acquiring the optimal temperature and time settings based on our previous OFAT experiments.

Therefore, OFAT’s con is:

- We’re unlikely to find the optimum set of conditions across two or more factors.

How our trial and error and OFAT experiments look:

Notice that none of them has trials conducted at a low temperature and time AND near optimum conditions.

What went wrong in the experiments?

- We didn't simultaneously change the settings of both factors.

- We didn't conduct trials throughout the potential experimental region.

The result was a lack of understanding on the combined effect of the two variables on the response. The two factors did interact in their effect on the response!

A more effective and efficient approach to experimentation is to use statistically designed experiments (DOE).

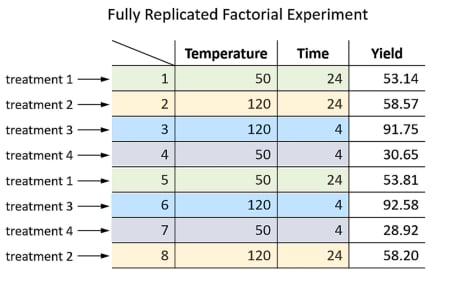

Apply Full Factorial DOE on the same example

1. Experiment with two factors, each factor with two values.

These four trials form the corners of the design space:

2. Run all possible combinations of factor levels, in random order to average out effects of lurking variables .

3. (Optional) Replicate entire design by running each treatment twice to find out experimental error :

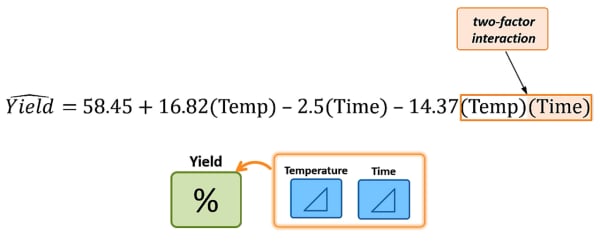

4. Analyzing the results enable us to build a statistical model that estimates the individual effects (Temperature & Time), and also their interaction.

It enables us to visualize and explore the interaction between the factors. An illustration of what their interaction looks like at temperature = 120; time = 4:

You can visualize, explore your model and find the most desirable settings for your factors using the JMP Prediction Profiler .

Summary: DOE vs. OFAT/Trial-and-Error

- DOE requires fewer trials.

- DOE is more effective in finding the best settings to maximize yield.

- DOE enables us to derive a statistical model to predict results as a function of the two factors and their combined effect.

Design of Experiments - DoE

Design of Experiments, or DoE, is a systematic approach to planning, conducting, and analyzing experiments.

The goal of Design of Experiments is to explore how various input variables, called factors, affect an output variable, known as the response. In more complex systems, there may also be multiple responses to analyze.

Depending on the field of study, the system being investigated could be a process, a machine, or a product. But it can also be a human being, for example, when studying the effects of medication.

Each factor has multiple levels. For example, the factor 'lubrication' might have levels such as oil and grease, while the factor 'temperature' could have levels like low, medium, and high.

These are the input variables or parameters that are changed or manipulated in the experiment. Each factor can have different levels, which represent the different values it can take.

Examples: temperature, pressure, material type, machine speed.

These are the specific values that a factor can take in an experiment.

Examples: For the factor "temperature," the levels could be 100°C, 150°C, and 200°C.

Response (or Output)

This is the measured outcome or result that changes in response to the factors being manipulated.

Examples: yield, strength, time to failure, customer satisfaction.

When is a DoE used?

There are two primary applications of Design of Experiments (DOE): the first is to identify the key influencing factors, determining which factors have a significant impact on the outcome. The second is to optimize the input variables, aiming to either minimize or maximize the response, depending on the desired result.

Different experimental designs are selected based on the objective: screening designs are used to identify significant factors, while optimization designs are employed to determine the optimal input variables.

Screening Designs

Screening Designs are used early in an experiment to identify the most important factors from a larger set of potential variables. These designs, such as Fractional Factorial Designs or Plackett-Burman Designs, focus on determining which factors have a significant effect on the response, often with a reduced number of runs.

Optimization Designs

Optimization Designs, on the other hand, are used after the important factors have been identified. These designs are used to refine and optimize the levels of the significant factors to achieve an ideal response. Common examples include: full factorial designs , central composite designs (CCD), and Box-Behnken designs (BBD).

The DoE Process

Of course, both steps can be carried out sequentially. Let's take a look at the process of a DoE project: planning, screening, optimization, and verification.

The first step, planning, involves three key tasks:

- 1) Gaining a clear understanding of the problem or system.

- 2) Identifying one or more responses.

- 3) Determining the factors that could significantly influence the response.

Identifying potential factors that influence the response can be quite complex and time-consuming. For this, an Ishikawa diagram can be created by the team.

Screening Design

The second step is Screening. If there are many factors that could have an influence (typically more than 4-6 factors), screening experiments should be conducted to reduce the number of factors.

Why is this important? The number of factors to be studied has a major impact on the number of experiments required.

In a full factorial design, the number of experiments is determined by n = 2 raised to the power of k, where n is the number of experiments, and k is the number of factors. Here's a small overview: if we have three factors, at least 8 experiments are required; for 7 factors, at least 128 experiments are needed, and for 10 factors, at least 1024 experiments are required.

Note that this table applies to a design where each factor has only two levels; otherwise, more experiments will be needed.

Depending on how complex each experiment is, it may be worthwhile to choose screening designs when there are 4 or more factors. Screening designs include fractional factorial designs and the Plackett-Burman design.

Once significant factors have been identified using screening experiments, and the number of factors has hopefully been reduced, further experiments can be conducted.

The obtained data can then be used to create a regression model, which helps determine the input variables that optimize the response.

Verification

After optimization, the final step is verification. Here, it is tested once again whether the calculated optimal input variables actually have the desired effect on the response!

Detailed Steps in Conducting DOE

Problem Definition: Clearly define the objective of the experiment. Identify the response variable and the factors that may affect it.

Select Factors, Levels, and Ranges: Determine the factors that will be studied and the specific levels at which each factor will be set. Consider practical constraints and prior knowledge.

Choose an Experimental Design: Select an appropriate design based on the number of factors, the complexity of the interactions, and resource availability (time, cost).

Conduct the Experiment: Perform the experiment according to the design. It is essential to randomize the order of the experimental runs to avoid systematic errors.

Collect Data: Gather data on the response variable for each experimental run.

Analyze the Data: Use statistical methods such as analysis of variance (ANOVA), regression, or specialized DOE software to analyze the results. The goal is to understand the effects of factors and their interactions on the response.

Draw Conclusions and Make Decisions: Based on the analysis, draw conclusions about which factors are significant, how they interact, and how the process or product can be optimized.

Validate the Results: Confirm the findings by conducting additional experiments or applying the findings to real-world situations. Validation ensures that the conclusions are generalizable.

Examples of Experimental Designs

There are various experimental designs, and here are some of the most common ones, which can easily be calculated using the DoE software DATAtab .

Full Factorial Designs : These designs test all possible combinations of the factors and provide detailed information about main effects and interactions.

Fractional Factorial Designs : These designs use only a fraction of the possible combinations to increase efficiency while still obtaining essential information.

Plackett-Burman Designs: A screening design that aims to quickly identify which factors have the greatest effect.

Response Surface Designs: These include, for example, Central Composite Designs (CCD), which are used to find optimal settings, especially in cases where there are nonlinear relationships between factors and the response.

Key Aspects of Experimental Design

Efficiency: DoE helps gather as much information as possible with a minimal number of experiments. This is especially important when experiments are expensive or time-consuming. Instead of testing all possible combinations of factors (as in full factorial designs), statistical methods can significantly reduce the number of experiments without losing essential information.

Factor Effects and Interactions: In an experiment, multiple factors often influence the result simultaneously. Experimental design allows for the analysis of isolated effects of these factors and their interactions. Interactions occur when the simultaneous change of several factors has a greater effect on the outcome than the sum of their individual effects.

Creating a DoE with DATAtab

Of course you can create a test plan with DATAtab. To do this, simply click here: Create DoE online .

You can choose from a variety of designs and then specify the number of factors. The experimental plan created is then displayed.

Statistics made easy

- many illustrative examples

- ideal for exams and theses

- statistics made easy on 412 pages

- 5rd revised edition (April 2024)

- Only 8.99 €

"Super simple written"

"It could not be simpler"

"So many helpful examples"

Cite DATAtab: DATAtab Team (2025). DATAtab: Online Statistics Calculator. DATAtab e.U. Graz, Austria. URL https://datatab.net

- ASQ® CQA Exam

- ASQ® CQE Exam

- ASQ® CSQP Exam

- ASQ® CSSYB Exam

- ASQ® CSSGB Exam

- ASQ® CSSBB Exam

- ASQ® CMQ/OE Exam

- ASQ® CQT Exam

- ASQ® CQPA Exam

- ASQ® CQIA Exam

- 7 Quality Tools

- Quality Gurus

- ISO 9001:2015

- Quality Cost

- Six Sigma Basics

- Risk Management

- Lean Manufacturing

- Design of Experiments

- Quality Acronyms

- Quality Awareness

- Quality Circles

- Acceptance Sampling

- Measurement System

- APQP + PPAP

- GD&T Symbols

- Project Quality (PMP)

- Full List of Quizzes >>

- Reliability Engineering

- Statistics with Excel

- Statistics with Minitab

- Multiple Regression

- Quality Function Deployment

- Benchmarking

- Statistical Process Control

- Measurement Scales

- Quality Talks >> New

- Six Sigma White Belt

- Six Sigma Yellow Belt

- Six Sigma Green Belt

- Six Sigma Black Belt

- Minitab 17 for Six Sigma

- Casio fx-991MS Calculator

- CSSYB/LSSYB Mock Exam

- CSSGB/LSSGB Mock Exam

- CSSBB/LSSBB Mock Exam

- ASQ® CCQM Preparation

- ASQ® CQA Preparation

- ASQ® CQE Preparation

- ASQ® CQPA Preparation

- ASQ® CQIA Preparation

- CQE Mock Exams

- CMQ/OE Mock Exams

- CQA Mock Exams

- CQIA Mock Exams

- CQPA Mock Exam

- CQT Mock Exam

- CQI Mock Exam

- CSQP Mock Exam

- CCQM Mock Exam

- Design of Experiments (DoE)

- Measurement System Analysis

- Statistics Using R

- Data Visualization with R

- Statistics Using Python

- Data Visualization with Python

- Regression with Minitab

- Logistic Regression

- Data Analysis Using Excel

- The Git Mindset

- Statistics Quiz

- Root Cause Analysis

- Kano Analysis

- Lean Management

- QMS Lead Auditor

- Quality Management

- ISO 9001:2015 Transition

- Project Quality Manager

- गुणवत्ता.org

- Summary Sheets

- Practice Tests

- QG Hall of Fame

- Testimonials – ASQ Exams Preparation

Six Sigma , Statistics

- Design of Experiments (DoE) – 5 Phases

** Unlock Your Full Potential **

A designed experiment is a type of scientific research where researchers control variables (factors) and observe their effect on the outcome variable (dependent variable).

Design of Experiments Steps

Five phases or steps of experimental design ( DoE , Design of experiments ) include:

1. Planning

Careful planning and attention to detail can help you avoid any pitfalls along your path. In most situations, you would have limited resources to conduct experiments. You would want to get the best results by conducting the minimum number of runs.

You start with a clear understanding of the problem and a well-defined purpose of the experiment.

At this stage, you would identify the potential factors (independent variables) that could be significantly affecting the response. Here you can use your past experience and subject matter expert knowledge to define relevant factors and their levels for the experiment.

In addition, you would need to ensure that the process being analyzed is under statistical control ( Statistical Process Control ) and that the measurement system variation is acceptable.

2. Screening

If the number of factors to be studied is large (typically more than 5), then as the first step, you would conduct screening experiments to reduce them.

The number of factors to be studied significantly impacts the number of runs. For example, if you decided to study 10 factors that could impact the response in the planning, then for a full factorial design , you would need to conduct 2^10 or 1024 experimental treatments (runs). In almost all situations conducting an experiment with these numbers of runs is impossible. In these situations, you would want to do some screening experiments to reduce the number of factors to be studied in the next step.

The following designs are typically used during the screening phase:

a) Fractional Factorial Design

b) Plackett-Burman Design

c) Definitive Screening Designs

3. Modelling

Once you have identified the significant factors using screening experiments, you will model the relationship between significant factors and the response. This is done using regression analysis.

The following designs are typically used during the modelling phase:

b) Full Factorial Design

4. Optimizing

After identifying the significant factors and modelling the relationship between factors and response, you would optimize the process conditions to achieve the desired result. This phase involves finding the best combination of factors and levels to produce the optimum output.

The following designs may be used during the optimization phase:

a) Central Composite Design

b) Box-Behnken Design

5. Verifying

Verification is the final phase of the experiment. It is conducted after the optimized condition has been achieved. The verification helps you confirm whether the optimized condition was indeed optimal. If not, then you would modify the experimental plan accordingly.

A good experiment should always begin with a clearly defined objective. A well-designed experiment will ensure that the experiment meets its objectives.

Related Posts:

DMAIC Tollgate Reviews

CMQOE , CQA , CQE , ISO 9001 , Lean , Six Sigma

Seven Quality Tools – Check Sheet

Blogs , Six Sigma

Defects per million opportunities (DPMO)

Comparing DMAIC, DMADV, and DFSS

Blogs , Six Sigma , Software

Causes of Poor Software Quality

CQE , CRE , Six Sigma , Statistics

Analysis of Contingency Tables

Lean , Six Sigma

Lean Six Sigma Yellow Belt Practice Exam

CQE , Six Sigma , Statistics

Control Charts Cheat Sheet

49 Courses on SALE!

IMAGES

COMMENTS

The design of experiments, also known as experiment design or experimental design, is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation.

Design of experiments (DOE) is defined as a branch of applied statistics that deals with planning, conducting, analyzing, and interpreting controlled tests to evaluate the factors that control the value of a parameter or group of parameters.

• Have a broad understanding of the role that design of experiments (DOE) plays in the successful completion of an improvement project. • Understand how to construct a design of experiments. • Understand how to analyze a design of experiments. • Understand how to interpret the results of a design of experiments.

Aug 4, 2020 · Using Design of Experiments (DOE) techniques, you can determine the individual and interactive effects of various factors that can influence the output results of your measurements. You can also use DOE to gain knowledge and estimate the best operating conditions of a system, process or product.

May 28, 2024 · Experimental design is a systematic method of implementing experiments in which one can manipulate variables in a structured way in order to analyze hypotheses and draw outcomes based on empirical evidence.

Mar 26, 2024 · This guide explores the types of experimental designs, common methods, and best practices for planning and conducting experiments. Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes.

understand the issues and principles of Design of Experiments (DOE), understand experimentation is a process, list the guidelines for designing experiments, and; recognize the key historical figures in DOE.

Design of experiments (DOE) is a systematic, efficient method that enables scientists and engineers to study the relationship between multiple input variables and key output variables. Learn how DOE compares to trial and error and one-factor-at-a-time (OFAT) methods.

Design of Experiments, or DoE, is a systematic approach to planning, conducting, and analyzing experiments. The goal of Design of Experiments is to explore how various input variables, called factors, affect an output variable, known as the response.

A designed experiment is a type of scientific research where researchers control variables (factors) and observe their effect on the outcome variable (dependent variable). Five phases or steps of experimental design (DoE, Design of experiments) include: 1. Planning.