- Increase Font Size

6 Mechanism of Speech Production

Dr. Namrata Rathore Mahanta

Learning outcome:

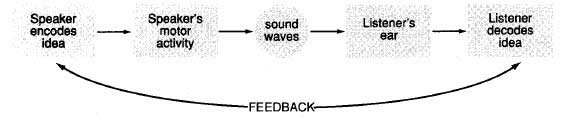

This module shall introduce the learner to the various components and processes that are at work in the production of human speech. The learner will also be introduced to the application of speech mechanism in other domains such as medical sciences and technology. After reading the module the learner will be able to distinguish speech from other forms of human communication and will be able to describe in detail the stages and processes involved in the production of human speech.

Introduction : What is speech and why it an academic discipline?

Speech is such a common aspect of human existence that its complexity is often overlooked in day to day life. Speech is the result of many interlinked intricate processes that need to be performed with precision. Speech production is an area of interest not only for language learners, language teachers, and linguists but also people working in varied domains of knowledge. The term ‘speech’ refers to the human ability to articulate thoughts in an audible form. It also refers to the formal one sided discourse delivered by an individual, on a particular topic to be heard by an audience.

The history of human existence and enterprise reveals that ‘speech’ was an empowering act. Heroes and heroines in history used ‘speech’ in clever ways to negotiate structures of power and overcome oppression. At times when the written word was an attribute of the elite and noble classes ‘speech’ was the vehicle which carried popular sentiments. In adverse times ‘speech’ was forbidden or regulated by authority. At such times poets and ordinary people sang their ‘speech’ in double meaning poems in defiance to authority. In present times the debate on an individual’s ‘right to free speech’ is often raised in varied contexts. As an academic discipline Speech Communication gained prominence in the 20th century and is taught in university departments across the globe. Departments of Speech Communication offer courses that engage with the speech interactions between people in public and private domain, in live as well as technologically mediated situations.

However, the student who peruses a study of ‘mechanism of speech production’ needs to focus primarily on the process of speech production. Therefore, the human brain and the physiological processes become the principal areas of investigation and research. Hence in this module ‘speech’ is delimited to the physiological processes which govern the production of different sounds. These include the brain, the respiratory organs, and the organs in our neck and mouth. A thorough understanding of the mechanism of speech production has helped correct speech disorders, simulate speech through machines, and develop devices for people with speech related needs. Needless to say, teachers of languages use this knowledge in the classroom in a variety of ways.

Speech and Language

In everyday parlance the terms ‘speech’ and ‘language’ are often used as synonyms. However, in academic use these two terms refer two very different things. Speech is the ‘spoken’ and ‘heard’ form of language. Language is a complex system of reception and expression of ideas and thoughts in verbal, non-verbal and written forms. Language can exist without speech but speech is meaningless without language. Language can exist in the mind in the form of a thought, on paper/screen in its orthographic form; it can exist in a gesture or action in its non-verbal form, it can also exist in a certain way of looking, winking or nodding. Thus speech is only a part of the vast entity of language. It is the verbal form of language.

Over the years Linguists have engaged themselves with the way in which speech and language exists within the human beings. They have examined the processes by which language is acquired and learnt. The role of the individual human being, the role of the society/community/the genetic or physiological attributes of the human beings all been investigated from time to time.

Ferdinand de Saussure a Swiss linguist who laid the foundation for Structuralism declared that language is imbibed by the individual within in a society or community. His lectures delivered at the University of Geneva during 1906-1911 were later collected and published in 1916 as Cours de linguistique générale . Saussure studied the relationship between speech and the evolution of language. He described language as a system of signs which exists in a pattern or structure. Saussure described language using terms such as ‘ langue ’ ‘ parole ’ and ‘langage ’. These terms are complex and cannot be directly translated. It would be misleading to equate Saussure’s ‘ langage ’ with ‘language’. However at an introductory stage these terms can be described as follows:

American linguist Avram Noam Chomsky argued that the human mind contains the innate source of language and declared that humans are born with a mind that is pre-programmed for language, i.e., humans are biologically programmed to use languages. Chomsky named this inherent human trait as ‘Innate Language’. He introduced two other significant terms: ‘Competence’ and ‘Performance’

‘Competence’ was described as the innate knowledge of language and ‘Performance’ as its actual use. Thus the concepts of ‘Innate Language’ ‘Language Competence’ and ‘Language Performance’ emerged and language came to be accepted as a cognitive attribute of humans while speech came to be accepted as one of the many forms of language communication. These ideas can be summarized in the chart given below:

In the present times speech and language are seen as interdependent and complementary attributes of humans. Current research focuses on finding the inner connections between speech and language. Consequently, the term ‘Speech and Language’ is used in most application based areas.

From Theory to Application

It is interesting to note that the knowledge of the intricacies of speech mechanism is used in many real life applications apart from Language and Linguistics. A vibrant area in Speech and Language application is ‘Speech and Language Processing’. It is used in Computational Linguistics, Natural Language Processing, Speech Therapy, Speech Recognition and many more areas. It is used to simulate speech in robots. Vocoders and Text to speech function (TTS) also makes use of speech mechanism. In Medical Sciences it is used to design therapy modules for different speech and language disorders, to develop advanced gadgets for persons with auditory needs. In Criminology it is used to recognize speech patterns of individuals and to identify manipulations in recorded speech patterns. Speech processing mechanism is also used in Music and Telecommunication in a major way.

What is Speech Mechanism?

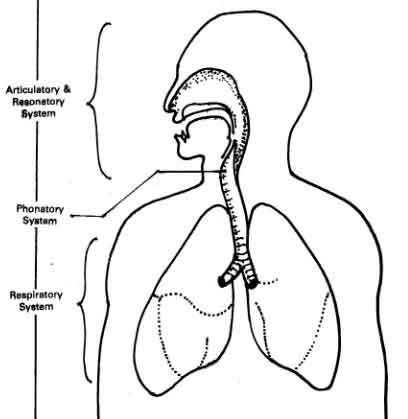

Speech mechanism is a function which starts in the brain, moves through the biological processes of respiration, phonation and articulation to produce sounds. These sounds are received and perceived through biological and neurological processes. The lungs are the primary organs involved in the respiratory stage, the larynx is involved in the phonation stage and the organs in the mouth are involved in the articulatory stage.

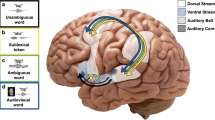

The brain plays a very important role in speech. Research on the human brain has led to identification of certain areas that are classically associated with speech. In 1861, French physician Pierre Paul Broca discovered that a particular portion of the frontal lobe governed speech production. This area has been named after him and is known as Broca’s area. Injury to this area is known to cause speech loss. In 1874, German neuropsychiatrist Carl Wernicke discovered that a particular area in the brain was responsible for speech comprehension and remembrance of words and images. At a time when brain was considered to be a single organ, Wernicke demonstrated that the brain did not function as a single organ but as a multi pronged organ with distinctive functions interconnected with neural networks. His most important contribution was the discovery that brain function was dependent on these neural networks. Today it is widely accepted that areas of the brain that are associated with speech are linked to each other through complex network of neurons and this network is mostly established after birth, through life experience, over a period of time.

It has been observed that chronology and patterning of these neural networks differ from individual to individual and also within the same individual with the passage of time or life experience. The formation of new networks outside the classically identified areas of speech has also been observed in people who have suffered brain injury at birth or through life experience. Although extensive efforts are being made to replicate or simulate the plasticity and creativity of the human brain, complete replication has not been achieved. Consequently, complete simulation of human speech mechanism remains elusive.

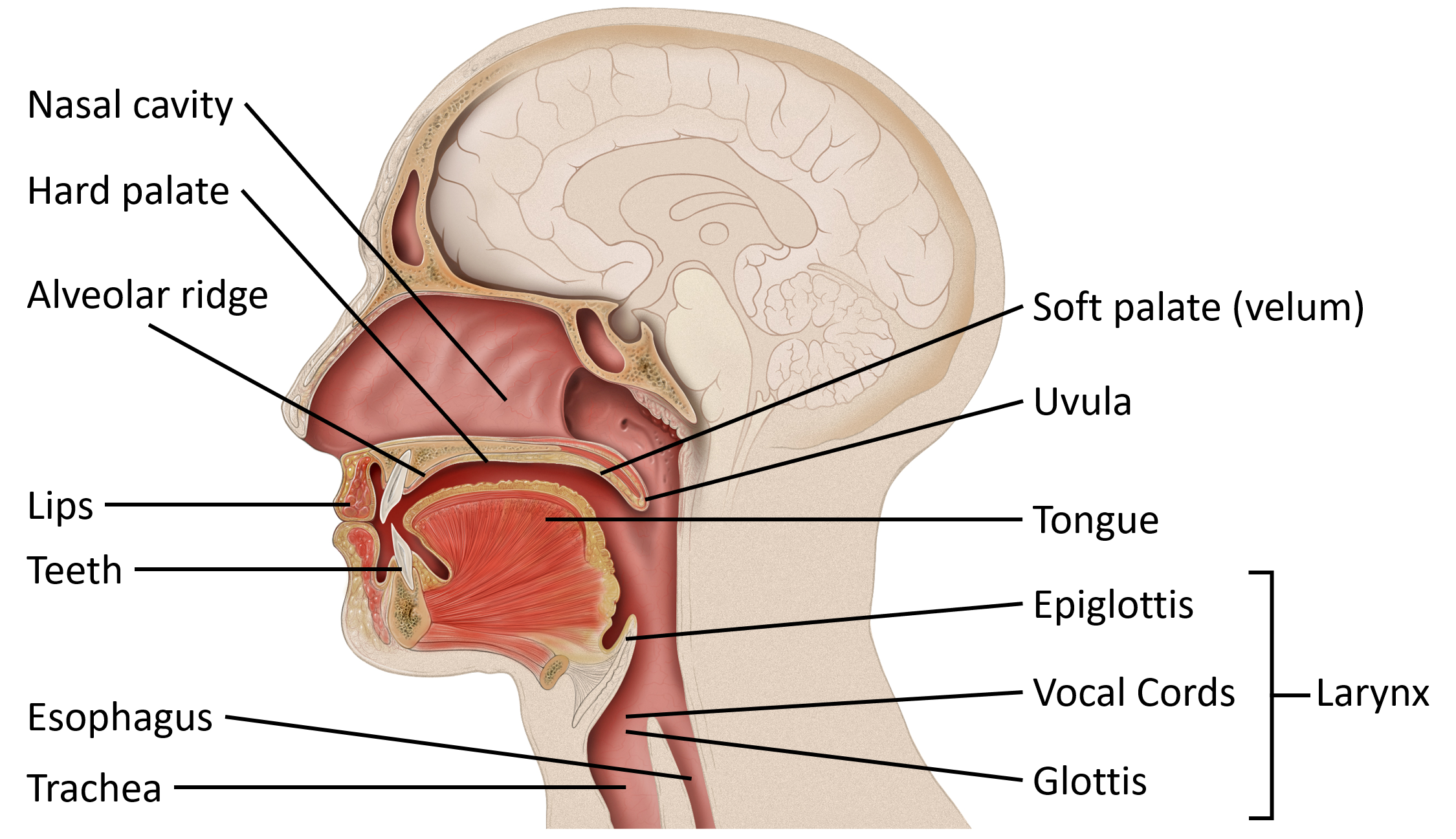

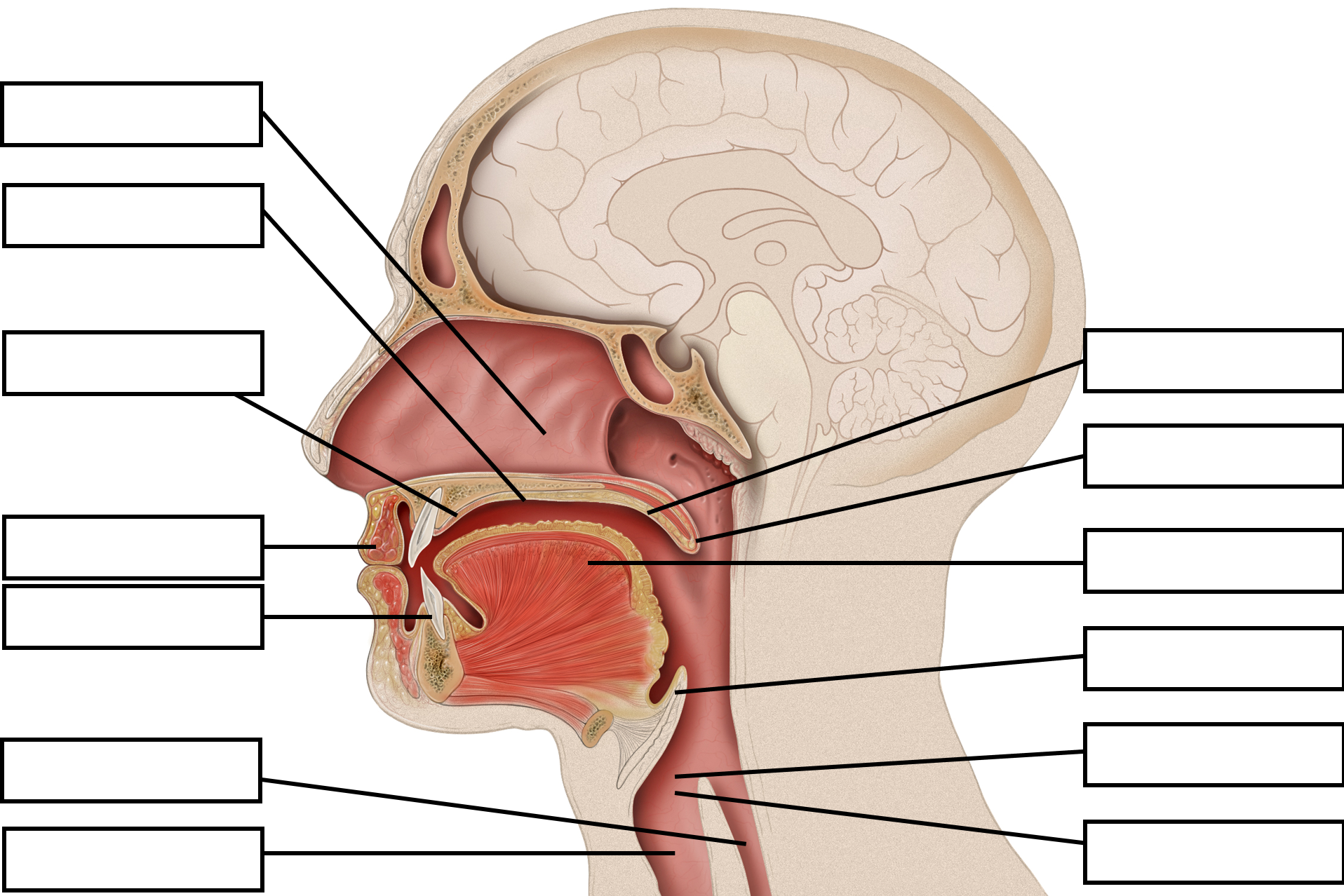

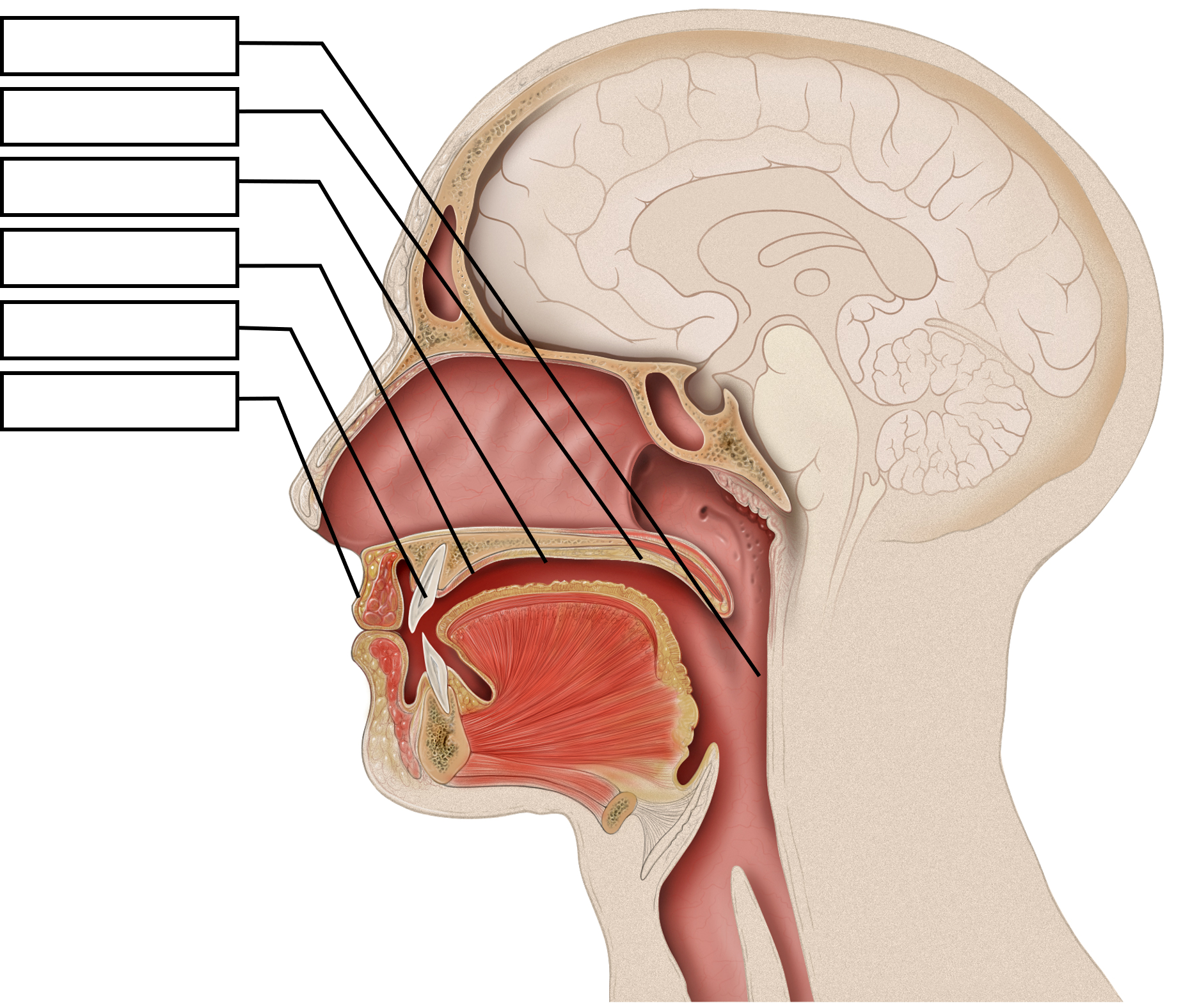

The organs of speech

In order to understand speech mechanism one needs to identify the organs used to produce speech. It is interesting to note that each of these organs has a unique life-function to perform. Their presence in the human body is not for speech production but for other primary bodily functions. In addition to primary physiological functions, these organs participate in the production of speech. Hence speech is said to be the ‘overlaid’ function of these organs. The organs of speech can be classified according to their position and function.

- The respiratory organs consist of: The Lungs and trachea. The lungs compress air and push it up the trachea.

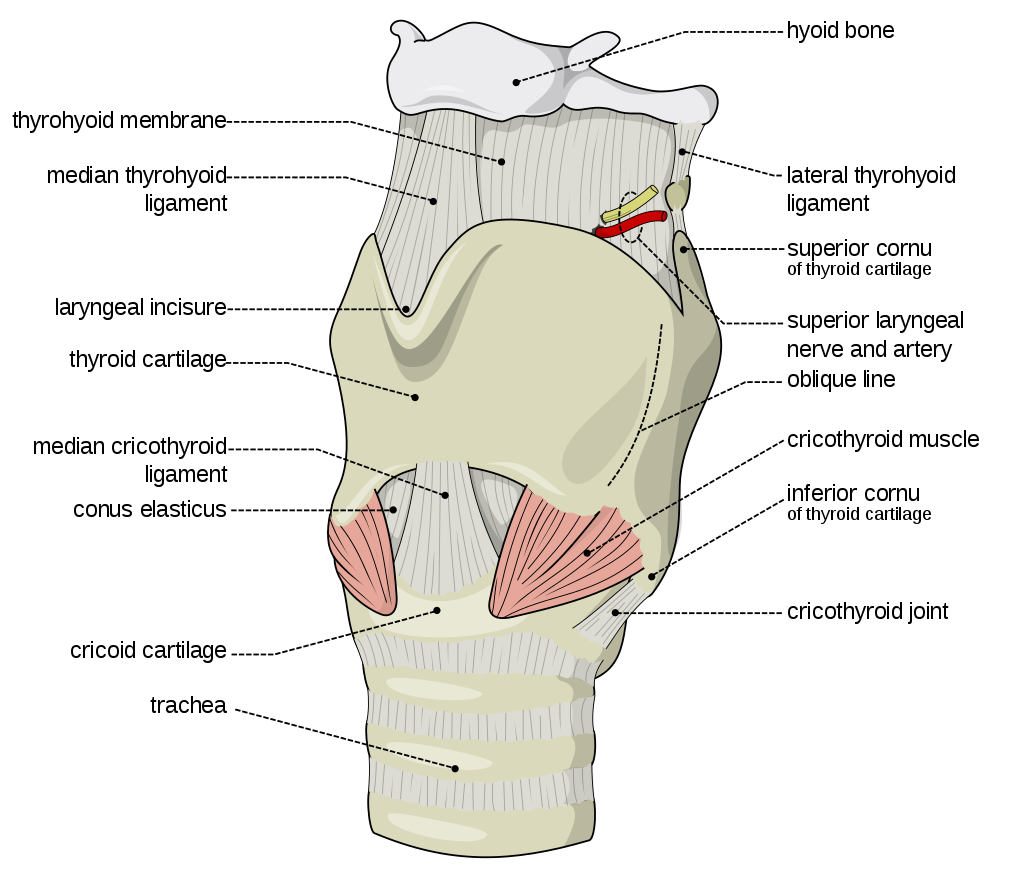

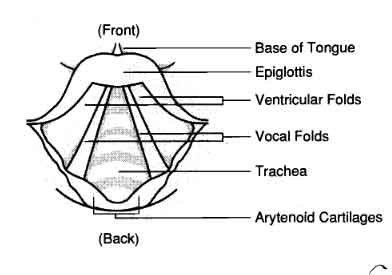

- The phonatory organs consist of the Larynx: The larynx contains two membrane- like structures called vocal cords or vocal folds. The vocal folds can come together or move apart.

- The articulatory organs consist of : lips, teeth, roof of mouth, tongue, oral and nasal cavities

The respiratory process involves the movement of air. Through muscle action of the lungs the air is compressed and pushed up to pass through the respiratory tract- trachea, larynx, pharynx, oral cavity, nasal cavity or both. While breathing in, the rib cage is expanded, the thoracic capacity is enlarged and lung volume is increased. Consequently, the air pressure in lungs drops down and the air is drawn into the lungs. While breathing out, the rib cage is contracted, the thoracic capacity is diminished and lung volume is decreased. Consequently, the air pressure in the lungs exceeds the outside pressure and air is released from the lungs to equalize it. Robert Mannel has explained the process through flowcharts and diagrammatic representations given below:

Once the air enters the pharynx, it can be expelled either through the oral passage, or through the nasal passage or through both depending upon the position of soft movable part of the roof of the mouth known as soft palate or velum.

Egressive and Ingressive Airstream: If the direction of the airstream is inward, it is termed as ‘Ingressive airstream. If the direction of the airstream is outward, it is ‘Egressive airstream’. Most languages of the world make use of Pulmonic Egressive airstream. Ingressive airstream is associated with Scandinavian languages of Northern Europe. However, no language can claim to use exclusively Ingressive or Egressive airstreams. While most languages of the world use predominantly Egressive airstreams, they are also known to use Ingressive airstreams in different situations. For extended list of use of ingressive mechanism you may visit Robert Eklund’s Ingressive Phonation and Speech page at www.ingressive.info .

Egressive process involves outward expulsion of air. Ingressive process involves inward intake of air. Egressive and Ingressive airstreams can be pulmonic (involving lungs) or non-pulmonic (involving other organs).

Non Pulmonic Airstreams: There are many languages which make use of non pulmonic airstream. In these cases the air expelled from the lungs is manipulated either in the pharyngeal cavity, or in the vocal tract, or in the oral cavity. Three major non pulmonic airstreams are:

In Ejectives, the air is trapped and compressed in the pharyngeal cavity by an obstruction in the mouth with simultaneous closure of the glottis. The larynx makes an upward movement which coincides with the removal of the obstruction causing the air to be released.

In Implosives, the air is trapped and compressed in the pharyngeal cavity by an obstruction in the mouth with simultaneous closure of the glottis. The larynx makes a downward movement which coincides with the removal of the obstruction causing the air to be sucked into the vocal tract.

In Clicks, the air is trapped and compressed in the oral cavity by lowering of the soft palate or velum and simultaneous closure of the mouth. Sudden opening causes air to be sucked in making a clicking sound. For a list of languages which use these airstream mechanisms you may visit https://community.dur.ac.uk/daniel.newman/phon10.pdf

While the process of phonation occurs before the airstream enters the oral or nasal cavity, the quality of speech is also determined by the state of the pharynx. Any irregularity in the pharynx leads to modification in speech quality.

The Phonatory Process: Inside the larynx are two membrane-like structures or folds called the vocal cords. The space between these is called the glottis. The vocal folds can be moved to varied distance. Robert Mannel has described five main positions of the vocal folds:

Voiceless: In this position the vocal folds are drawn far apart so that the air stream passes without any interference .

Breathy: Vocal folds are drawn loosely apart. The air passes making whisper like sound Voiced: Vocal folds are drawn close and are stretched. The air passes making vibrating sound.

Creaky : The vocal folds are drawn close & vibrate with maximum tension. Air passes making rough creaky sound. This sound is called ‘vocal fry’ and its use is on the rise amongst urban young women. However its sustained and habitual use is harmful.

For more details on laryngeal positions you may visit Robert Mannel’s page- http://clas.mq.edu.au/speech/phonetics/phonetics/airstream_laryngeal/laryngeal.html

You may see a small clip on the vocal fry by visiting the link – http://www.upworthy.com/what-is-vocal-fry-and-why-doesnt-anyone-care-when-men-talk- like-that

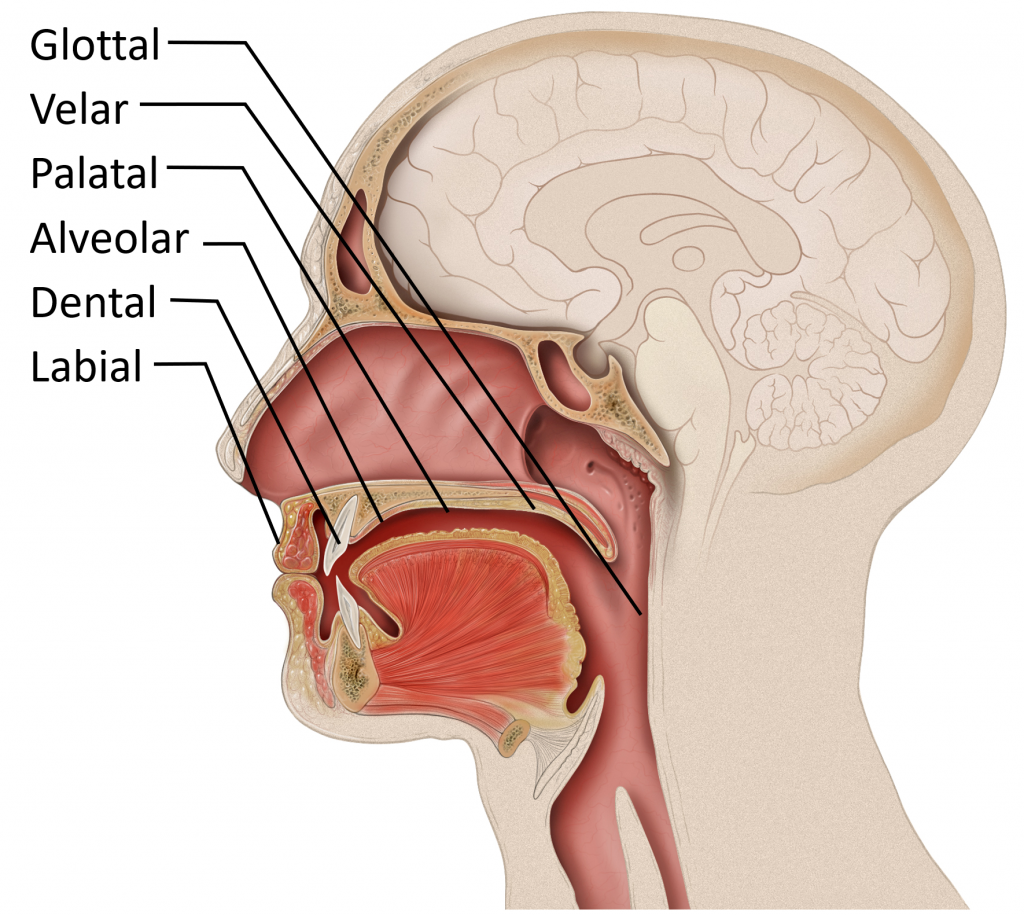

The Mouth The mouth is the major site for articulatory processes of speech production. It contains active articulators that can move and take different positions such as the tongue, the lips, the soft palate. There are passive articulators that cannot move but combine with the active articulators to produce speech. The teeth, the teeth ridge or the alveolar ridge, and the hard palate are the passive articulators.

Amongst the active articulators, the tongue can take the maximum number of positions and combinations to. Being an active muscle, its parts can be lowered or raised. The tongue is a major articulator in the production of vowel sounds. Position of the tongue determines the acoustics in the oral cavity during articulation of vowel sounds. For the purpose of identifying and describing articulatory processes, the tongue has been classified on two parameters.

a. The part of the tongue that is raised during the articulation process. There are four markers to classify the height to which the tongue is raised

- Maximum height

- Minimum height

- Two third of maximum height

- One third of maximum height

b. The height to which the tongue is raised during the articulation process. Three main parts of the tongue are identified as Front, Back, and Center.

For the purpose of description the positions of the tongue are diagrammatically represented through the tongue quadrilateral.

- Close: The Maximum height is called the high position or the close position. This is because the gap between the tongue and the roof of mouth is nearly closed.

- High-Mid or Half Close : Two third of maximum is called high- mid position or half – close position

- Low-Mid or Half Open : One third of maximum is called low – mid position or half- open position

- Low or Open : The Minimum height is called the Low or the Open position. This permits the maximum gap between the tongue and the roof of mouth.

The tongue also acts as an active articulator on the roof of the mouth to create obstruction in the oral cavity. Few prominent positions of the tongue are shown below

Lips: The lips are two strong muscles. In speech production the movement of the upper lip is less than that of the lower lip. The lips take different shapes: Rounded, Neutral or Spread

Teeth : The Upper Teeth are Passive Articulators.

The roof of the mouth:

The roof of the mouth has a hard portion and a soft portion which are fused seamlessly. The hard portion comprises of the Alveolar Ridge and the Hard Palate. The soft portion comprises of the Velum and the Uvula. The anterior part of the roof of the mouth is hard and unmovable. It begins from the irregular surface called alveolar ridge which lies behind the upper teeth. The alveolar ridge is followed by the hard palate which extends up to the centre of the tongue. The posterior part of the roof of the mouth is soft and movable. It lies after the hard palate and extends up to the small structure called the uvula.

The soft palate: It is movable and can take different positions during speech production.

- Raised position: In raised position the soft palate rests against the back of the mouth. The nasal passage is fully blocked and air passes through the mouth

- Lowered Position: In lowered position the soft palate rests against the back part of tongue in such a way that the oral passage is fully blocked and air passes through the nasal passage.

- Partially lowered Position: In partially lowered position, the oral as well as the nasal passages are partially open. Pulmonic air passes though the mouth as well as the nose to create ‘nasalized’ sounds.

The hard palate lies between the alveolar ridge and velum. It is a hard and unmovable part of the roof of the mouth. It lies opposite to the centre of the tongue and acts as a passive articulator against the tongue to produce sounds. Sounds produced with the involvement of the hard palate are called palatal sounds.

The alveolar ridge is the wavy part that lies just behind the teeth ridge opposite to the front of the tongue. It acts as a passive articulator against the tongue to produce sounds. Sounds produced with the involvement of the Alveolar ridge are called Alveolar sounds. Some sounds are created with the involvement of the posterior region of the Alveolar ridge. These sounds are called post alveolar sounds. Sometimes sounds are created with the involvement of the hindmost part of the alveolar ridge and the foremost part of the hard palate. Such sounds are called palato alveolar sounds.

Air stream mechanisms involved in speech production

The flow of air or the airstream is manipulated in a number of ways during production of speech. This is done with the movement of the active articulators in the oral cavity or the larynx. In this process the air stream plays a major role in the production of speech sound. Air stream works on the concept of air pressure. If the air pressure inside the mouth is greater than the pressure in the atmosphere, air will escape outward to create a balance. If the air pressure inside the mouth is lower than the pressure outside because of expansion of the oral or pharyngeal cavity, the air will move inward into the mouth to create balance. On the basis of the nature of the obstruction and manner of release, the following classification has been made:

Plosive: In this process there is full closure of the passage followed by sudden release of air. The air is compressed and when the articulators are suddenly removed the air in the mouth escapes with an explosive sound.

Affricate: In this process there is full closure of the passage followed by slow release of air.

Fricative : In this process the closure is not complete. The articulators come together to create a narrow passage. Air is compressed to pass through this narrow stricture so that air escapes with audible friction.

Nasal: The soft palate is lowered so that the oral cavity is closed. Air passes through the nasal passage creating nasal sounds. If the soft palate is partially lowered air passes simultaneously through the oral and nasal passages creating the ‘nasalized’ version of sounds. Lateral: The obstruction in the mouth is such that the air is free to pass on both sides of the obstruction.

Glide: The position of the articulators undergoes change during the articulation process. It begins with the articulators taking one position and then smoothly moving to another position.

Speech mechanism is a complex process unique to humans. It involves the brain, the neural network, the respiratory organs, the larynx, the oral cavity, the nasal cavity and the organs in the mouth. Through speech production humans engage in verbal communication. Since earliest times efforts have been made to comprehend the mechanism of speech. In 1791 Wolfgang von Kempelen made the first speech synthesizer. In the first few decades of the twentieth century scientific inventions such as x-ray, spectrograph, and voice recorders provided new tools for the study of speech mechanism. In the later part of the twentieth century electronic innovations were followed by the digital revolution in technology. These developments have made new revelations and have given new direction to the knowledge of human speech mechanism. In the digital world an understanding of speech mechanism has led to new applications in speech synthesis. Speech mechanism studies in present times are divided into areas of super specialization which focus intensively on any specialized attribute of speech mechanism.

References :

- Chomsky, Noam. Aspects of the Theory of Syntax.1965. Cambridge M.A.: MIT Press, 2015.

- Chomsky, Noam. Language and Mind. 3rd ed. New York: Cambridge University Press, 2006. Eklund, Robert. www.ingressive.info. Web. Accessed on 5 March 2017.

- Mannel,Robert. http://clas.mq.edu.au/speech/phonetics/phonetics/introduction/respiration.html. Web. Accessed on 5March 2017.

- Mannel,Robert. http://clas.mq.edu.au/speech/phonetics/phonetics/introduction/vocaltract_diagram.htm l. Web. Accessed on 5 March 2017.

- Mannel,Robert. http://clas.mq.edu.au/speech/phonetics/phonetics/airstream_laryngeal/laryngeal.html. Web. Accessed on 5 March 2017.

- Newman, Daniel. https://community.dur.ac.uk/daniel.newman/phon10.pdf. Web. Accessed on 5 March 2017.

- Saussure, Ferdinand. Course in General Linguistics. Translated by Wade Baskin. Edited by Perry Meisel and Haun Saussy. New York: Columbia University Press, 2011.

- Wilson, Robert Andrew and Frank C. Keil. Eds. The MIT Encyclopedia of Cognitive Sciences.1999. Cambridge M.A.: MIT Press, 2001.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2.1 How Humans Produce Speech

Phonetics studies human speech. Speech is produced by bringing air from the lungs to the larynx (respiration), where the vocal folds may be held open to allow the air to pass through or may vibrate to make a sound (phonation). The airflow from the lungs is then shaped by the articulators in the mouth and nose (articulation).

Check Yourself

Video script.

The field of phonetics studies the sounds of human speech. When we study speech sounds we can consider them from two angles. Acoustic phonetics , in addition to being part of linguistics, is also a branch of physics. It’s concerned with the physical, acoustic properties of the sound waves that we produce. We’ll talk some about the acoustics of speech sounds, but we’re primarily interested in articulatory phonetics , that is, how we humans use our bodies to produce speech sounds. Producing speech needs three mechanisms.

The first is a source of energy. Anything that makes a sound needs a source of energy. For human speech sounds, the air flowing from our lungs provides energy.

The second is a source of the sound: air flowing from the lungs arrives at the larynx. Put your hand on the front of your throat and gently feel the bony part under your skin. That’s the front of your larynx . It’s not actually made of bone; it’s cartilage and muscle. This picture shows what the larynx looks like from the front.

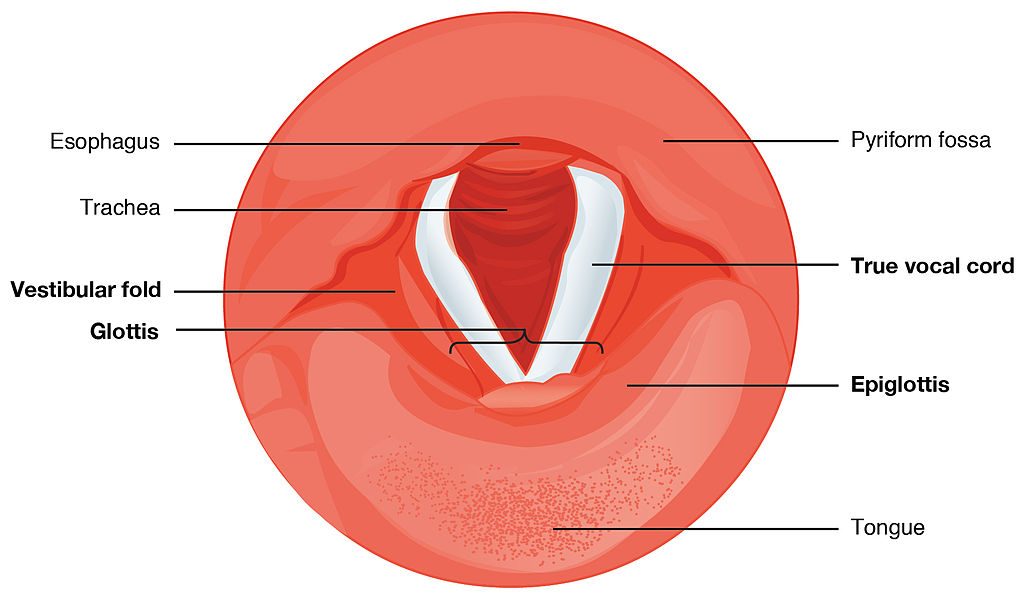

This next picture is a view down a person’s throat.

What you see here is that the opening of the larynx can be covered by two triangle-shaped pieces of skin. These are often called “vocal cords” but they’re not really like cords or strings. A better name for them is vocal folds .

The opening between the vocal folds is called the glottis .

We can control our vocal folds to make a sound. I want you to try this out so take a moment and close your door or make sure there’s no one around that you might disturb.

First I want you to say the word “uh-oh”. Now say it again, but stop half-way through, “Uh-”. When you do that, you’ve closed your vocal folds by bringing them together. This stops the air flowing through your vocal tract. That little silence in the middle of “uh-oh” is called a glottal stop because the air is stopped completely when the vocal folds close off the glottis.

Now I want you to open your mouth and breathe out quietly, “haaaaaaah”. When you do this, your vocal folds are open and the air is passing freely through the glottis.

Now breathe out again and say “aaah”, as if the doctor is looking down your throat. To make that “aaaah” sound, you’re holding your vocal folds close together and vibrating them rapidly.

When we speak, we make some sounds with vocal folds open, and some with vocal folds vibrating. Put your hand on the front of your larynx again and make a long “SSSSS” sound. Now switch and make a “ZZZZZ” sound. You can feel your larynx vibrate on “ZZZZZ” but not on “SSSSS”. That’s because [s] is a voiceless sound, made with the vocal folds held open, and [z] is a voiced sound, where we vibrate the vocal folds. Do it again and feel the difference between voiced and voiceless.

Now take your hand off your larynx and plug your ears and make the two sounds again with your ears plugged. You can hear the difference between voiceless and voiced sounds inside your head.

I said at the beginning that there are three crucial mechanisms involved in producing speech, and so far we’ve looked at only two:

- Energy comes from the air supplied by the lungs.

- The vocal folds produce sound at the larynx.

- The sound is then filtered, or shaped, by the articulators .

The oral cavity is the space in your mouth. The nasal cavity, obviously, is the space inside and behind your nose. And of course, we use our tongues, lips, teeth and jaws to articulate speech as well. In the next unit, we’ll look in more detail at how we use our articulators.

So to sum up, the three mechanisms that we use to produce speech are:

- respiration at the lungs,

- phonation at the larynx, and

- articulation in the mouth.

Essentials of Linguistics Copyright © 2018 by Catherine Anderson is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Overview of Speech Production and Speech Mechanism

by BASLPCOURSE.COM

Overview of Speech Production and Speech Mechanism: Communication is a fundamental aspect of human interaction, and speech production is at the heart of this process. Behind every spoken word lies a series of intricate steps that allow us to convey our thoughts and ideas effectively. Speech production involves three essential levels: conceptualization, formulation, and articulation. In this article, we will explore each level and understand how they contribute to the seamless flow of communication.

Overview of Speech Production

Speech Production deals in 3 levels:

Conceptualization

Formulation , articulation .

Speech production is a remarkable process that involves multiple intricate levels. From the initial conceptualization of ideas to their formulation into linguistic forms and the precise articulation of sounds, each stage plays a vital role in effective communication. Understanding these levels helps us appreciate the complexity of human speech and the incredible coordination between the brain and the vocal tract. By honing our speech production skills, we can become more effective communicators and forge stronger connections with others.

Steps of Speech Production

Conceptualization is the first level of speech production, where ideas and thoughts are born in the mind. At this stage, a person identifies the message they want to convey, decides on the key points, and organizes the information in a coherent manner. This process is highly cognitive and involves accessing knowledge, memories, and emotions related to the topic.

During conceptualization, the brain’s language centers, such as the Broca’s area and Wernicke’s area, play a crucial role. The Broca’s area is involved in the planning and sequencing of speech, while the Wernicke’s area is responsible for understanding and accessing linguistic information.

For example, when preparing to give a presentation, the conceptualization phase involves structuring the content logically, identifying the main ideas, and determining the tone and purpose of the speech.

The formulation stage follows conceptualization and involves transforming abstract thoughts and ideas into linguistic forms. In this stage, the brain converts the intended message into grammatically correct sentences and phrases. The formulation process requires selecting appropriate words, arranging them in a meaningful sequence, and applying the rules of grammar and syntax.

At the formulation level, the brain engages the motor cortex and the areas responsible for language production. These regions work together to plan the motor movements required for speech.

During formulation, individuals may face challenges, such as word-finding difficulties or grammatical errors. However, with practice and language exposure, these difficulties can be minimized.

Continuing with the previous example of a presentation, during the formulation phase, the speaker translates the organized ideas into spoken language, ensuring that the sentences are clear and coherent.

Articulation is the final level of speech production, where the formulated linguistic message is physically produced and delivered. This stage involves the precise coordination of the articulatory organs, such as the tongue, lips, jaw, and vocal cords, to create the specific sounds and speech patterns of the chosen language.

Smooth and accurate articulation is essential for clear communication. Proper articulation ensures that speech sounds are recognizable and intelligible to the listener. Articulation difficulties can lead to mispronunciations or speech disorders , impacting effective communication.

In the articulation phase, the motor cortex sends signals to the speech muscles, guiding their movements to produce the intended sounds. The brain continuously monitors and adjusts these movements to maintain the fluency of speech.

For instance, during the presentation, the speaker’s articulation comes into play as they deliver each sentence, ensuring that their words are pronounced correctly and clearly.

Overview of Speech Mechanism

Speech Sub-system

The speech mechanism is a complex and intricate process that enables us to produce and comprehend speech. The speech mechanism involves a coordinated effort of speech subsystems working together seamlessly. Speech Mechanism is done by 5 Sub-systems:

- Respiratory System

Phonatory System

- Resonatory System

- Articulatory System

Regulatory System

I. Respiratory System

Respiration: The Foundation of Speech

Speech begins with respiration, where the lungs provide the necessary airflow. The diaphragm and intercostal muscles play a crucial role in controlling the breath, facilitating the production of speech sounds.

II. Phonatory System

Phonation: Generating the Sound Source

Phonation refers to the production of sound by the vocal cords in the larynx. As air from the lungs passes through the vocal cords, they vibrate, creating the fundamental frequency of speech sounds.

Phonation, in simple terms, refers to the production of sound through the vibration of the vocal folds in the larynx. When air from the lungs passes through the vocal folds, they rapidly open and close, generating vibrations that produce sound waves. These sound waves then resonate in the vocal tract, shaping them into distinct speech sounds.

The Importance of Phonation in Speech Production

Phonation is a fundamental aspect of speech production as it forms the basis for vocalization. The process allows us to articulate various speech sounds, control pitch, and modulate our voices to convey emotions and meaning effectively.

Mechanism of Phonation

Vocal Fold Structure

To understand phonation better, we must examine the structure of the vocal folds. The vocal folds, also known as vocal cords, are situated in the larynx (voice box) and are composed of elastic tissues. They are divided into two pairs, with the true vocal folds responsible for phonation.

The Process of Phonation

The process of phonation involves a series of coordinated movements. When we exhale, air is expelled from the lungs, causing the vocal folds to close partially. The buildup of air pressure beneath the closed vocal folds causes them to be pushed open, releasing a burst of air. As the air escapes, the vocal folds quickly close again, repeating the cycle of vibrations, which results in a continuous sound stream during speech.

III. Resonatory System

Resonance: Amplifying the Sound

The sound produced in the larynx travels through the pharynx, oral cavity, and nasal cavity, where resonance occurs. This amplification process adds richness and depth to the speech sounds.

IV. Articulatory System

Articulation: Shaping Speech Sounds

Articulation involves the precise movements of the tongue, lips, jaw, and soft palate to shape the sound into recognizable speech sounds or phonemes.

When we speak, our brain sends signals to the muscles responsible for controlling these speech organs, guiding them to produce different articulatory configurations that result in distinct sounds. For example, to form the sound of the letter “t,” the tongue makes contact with the alveolar ridge (the ridge behind the upper front teeth), momentarily blocking the airflow before releasing it to create the characteristic “t” sound.

The articulation process is highly complex and allows us to produce a vast array of speech sounds, enabling effective communication. Different languages use different sets of speech sounds, and variations in articulation lead to various accents and dialects.

Efficient articulation is essential for clear and intelligible speech, and any impairment or deviation in the articulatory process can result in speech disorders or difficulties. Speech therapists often work with individuals who have articulation problems to help them improve their speech and communication skills. Understanding the mechanisms of articulation is crucial in studying linguistics, phonetics, and the science of speech production.

Articulators are the organs and structures within the vocal tract that are involved in shaping the airflow to produce specific sounds. Here are some of the main articulators and the sounds they help create:

- The tongue is one of the most versatile articulators and plays a significant role in shaping speech sounds.

- It can move forward and backward, up and down, and touch various parts of the mouth to produce different sounds.

- For example, the tip of the tongue is involved in producing sounds like “t,” “d,” “n,” and “l,” while the back of the tongue is used for sounds like “k,” “g,” and “ng.”

- The lips are essential for producing labial sounds, which involve the use of the lips to shape the airflow.

- Sounds like “p,” “b,” “m,” “f,” and “v” are all labial sounds, where the lips either close or come close together during articulation.

- The teeth are involved in producing sounds like “th” as in “think” and “this.”

- In these sounds, the tip of the tongue is placed against the upper front teeth, creating a unique airflow pattern.

Alveolar Ridge:

- The alveolar ridge is a small ridge just behind the upper front teeth.

- Sounds like “t,” “d,” “s,” “z,” “n,” and “l” involve the tongue making contact with or near the alveolar ridge.

- The palate, also known as the roof of the mouth, plays a role in producing sounds like “sh” and “ch.”

- These sounds, known as postalveolar or palato-alveolar sounds, involve the tongue articulating against the area just behind the alveolar ridge.

Velum (Soft Palate):

- The velum is the soft part at the back of the mouth.

- It is raised to close off the nasal cavity during the production of non-nasal sounds like “p,” “b,” “t,” and “d” and lowered to allow airflow through the nose for nasal sounds like “m,” “n,” and “ng.”

- The glottis is the space between the vocal cords in the larynx.

- It plays a role in producing sounds like “h,” where the vocal cords remain open, allowing the airflow to pass through without obstruction.

By combining the movements and positions of these articulators, we can produce the vast range of speech sounds used in different languages around the world. Understanding the role of articulators is fundamental to the study of phonetics and speech production .

V. Regulatory system

Regulation: The Role of the Brain and Nervous System

The brain plays a pivotal role in controlling and coordinating the speech mechanism.

Broca’s Area: The Seat of Speech Production

Located in the left frontal lobe, Broca’s area is responsible for speech production and motor planning for speech movements.

Wernicke’s Area: Understanding Spoken Language

Found in the left temporal lobe, Wernicke’s area is crucial for understanding spoken language and processing its meaning.

Arcuate Fasciculus: Connecting Broca’s and Wernicke’s Areas

The arcuate fasciculus is a bundle of nerve fibers that connects Broca’s and Wernicke’s areas, facilitating communication between speech production and comprehension centers.

Motor Cortex: Executing Speech Movements

The motor cortex controls the muscles involved in speech production, translating neural signals into precise motor movements.

References :

- Speech Science Primer (Sixth Edition) Lawrence J. Raphael, Gloria J. Borden, Katherine S. Harris [Book]

- Manual on Developing Communication Skill in Mentally Retarded Persons T.A. Subba Rao [Book]

- SPEECH CORRECTION An Introduction to Speech Pathology and Audiology 9th Edition Charles Van Riper [Book]

You are reading about:

Share this:.

- Click to share on Twitter (Opens in new window)

- Click to share on Facebook (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Telegram (Opens in new window)

- Click to share on WhatsApp (Opens in new window)

- Click to print (Opens in new window)

If you have any Suggestion or Question Please Leave a Reply Cancel reply

Recent articles.

- Types of Earmolds for Hearing Aid – Skeleton | Custom

- Procedure for Selecting Earmold and Earshell

- Ear Impression Techniques for Earmolds and Earshells

- Speech and Language Disability Percentage Calculation

- Frenchay Dysarthria Assessment 2 (FDA 2): Scoring | Interpretation

Articulatory Mechanisms in Speech Production

- First Online: 18 April 2021

Cite this chapter

- Štefan Beňuš 2 , 3

1377 Accesses

The chapter builds the foundation for understanding how our bodies create speech. The discussion is framed around three main processes related to speaking: breathing, voicing and articulation. The discovery activities and commentaries bring awareness of multiple communicative functions produced by coordinated actions of various organs participating in speaking. Benus closes the chapter by comparing the vocal tracts of humans and chimpanzees and presenting the hypotheses of Philip Lieberman that the human speech apparatus evolved adaptively favouring the communicative function over the more basic ones linked to survival.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

But interested readers can find many great illustrations of the anatomy of the tongue in the internet, for example here https://www.yorku.ca/earmstro/journey/tongue.html .

Many authors refer to this part of the tongue as the ‘front’. However, this is not very intuitive and thus the term ‘body’ will be used in this book.

Anderson, Rindy C., Casey A. Klofstad, William J. Mayew, and Mohan Venkatachalam. 2014. Vocal fry may undermine the success of young women in the labor market. PLoS ONE 9 (5): e97506. https://doi.org/10.1371/journal.pone.0097506 .

Article Google Scholar

Fitch, Tecumseh W., Bart de Boer, Neil Mathur, and Asif A. Ghazanfar. 2016. Monkey vocal tracts are speech-ready. Science Advances 2 (12): e1600723. https://doi.org/10.1126/sciadv.1600723 .

Laver, John. 1980. The phonetic description of voice quality . Cambridge: Cambridge University Press.

Google Scholar

Lieberman, Philip. 2016. Comment on “Monkey vocal tracts are speech-ready”. Science Advances 3 (7): e1700442. https://doi.org/10.1126/sciadv.1700442 .

Lieberman, Philip. 1975. On the origins of language: An introduction to the evolution of human speech . New York: Macmillan.

Lieberman, Philip, Edmund S. Crelin, and Dennis H. Klatt. 1972. Phonetic ability and related anatomy of the newborn and adult human, neanderthal man, and the chimpanzee. American Anthropologist 74: 287–307.

Wolk, Lesley, Nassima B. Abdelli-Beruh, and Dianne Slavin. 2011. Habitual use of vocal fry in young adult female speakers. Journal of Voice 26(3): 111–116.

Yuasa, Ikuko P. 2010. Creaky Voice: A new feminine voice quality for young urban-oriented upwardly mobile American women? American Speech 85(3): 315–337.

Download references

Author information

Authors and affiliations.

Department of English and American Studies, Faculty of Arts, Constantine the Philosopher University in Nitra, Nitra, Slovakia

Štefan Beňuš

Institute of Informatics, Slovak Academy of Sciences, Bratislava, Slovakia

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Štefan Beňuš .

3.1 Electronic Supplementary Material

Ch3_links.docx.

(DOCX 28 kb)

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s)

About this chapter

Beňuš, Š. (2021). Articulatory Mechanisms in Speech Production. In: Investigating Spoken English. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-54349-5_3

Download citation

DOI : https://doi.org/10.1007/978-3-030-54349-5_3

Published : 18 April 2021

Publisher Name : Palgrave Macmillan, Cham

Print ISBN : 978-3-030-54348-8

Online ISBN : 978-3-030-54349-5

eBook Packages : Social Sciences Social Sciences (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 11 March 2020

Phonatory and articulatory representations of speech production in cortical and subcortical fMRI responses

- Joao M. Correia ORCID: orcid.org/0000-0001-6624-7012 1 , 2 ,

- César Caballero-Gaudes 1 ,

- Sara Guediche 1 &

- Manuel Carreiras ORCID: orcid.org/0000-0001-6726-7613 1 , 3 , 4

Scientific Reports volume 10 , Article number: 4529 ( 2020 ) Cite this article

8040 Accesses

21 Citations

2 Altmetric

Metrics details

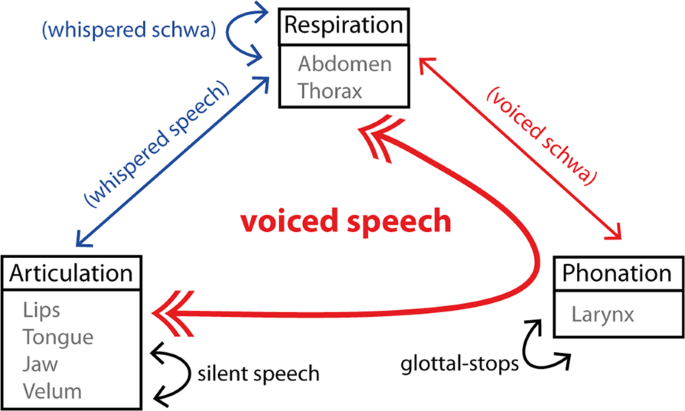

- Motor cortex

Speaking involves coordination of multiple neuromotor systems, including respiration, phonation and articulation. Developing non-invasive imaging methods to study how the brain controls these systems is critical for understanding the neurobiology of speech production. Recent models and animal research suggest that regions beyond the primary motor cortex (M1) help orchestrate the neuromotor control needed for speaking, including cortical and sub-cortical regions. Using contrasts between speech conditions with controlled respiratory behavior, this fMRI study investigates articulatory gestures involving the tongue, lips and velum (i.e., alveolars versus bilabials, and nasals versus orals), and phonatory gestures (i.e., voiced versus whispered speech). Multivariate pattern analysis (MVPA) was used to decode articulatory gestures in M1, cerebellum and basal ganglia. Furthermore, apart from confirming the role of a mid-M1 region for phonation, we found that a dorsal M1 region, linked to respiratory control, showed significant differences for voiced compared to whispered speech despite matched lung volume observations. This region was also functionally connected to tongue and lip M1 seed regions, underlying its importance in the coordination of speech. Our study confirms and extends current knowledge regarding the neural mechanisms underlying neuromotor speech control, which hold promise to study neural dysfunctions involved in motor-speech disorders non-invasively.

Similar content being viewed by others

Basal ganglia and cerebellum contributions to vocal emotion processing as revealed by high-resolution fMRI

Hand posture affects brain-function measures associated with listening to speech

Motor engagement relates to accurate perception of phonemes and audiovisual words, but not auditory words

Introduction.

Despite scientific interest in verbal communication, the neural mechanisms supporting speech production remain unclear. The goal of the current study is to capture the underlying representations that support the complex orchestration of articulators, respiration, and phonation needed to produce intelligible speech. Importantly, voiced speech can be defined as an orchestrated task, where concerted phonation-articulation is mediated by respiration 1 . In turn, a more detailed neural specification of these gestures in fluent speakers is necessary to develop biologically plausible models of speech production. The ability to image the speech production circuitry at work using non-invasive methods holds promise for future application in studies that aim to assess potential dysfunction.

Upper motor-neurons located within the primary motor cortex (M1) exhibit a somatotopic organization that projects onto the brain-stem innervating the musculature of speech 2 , 3 , 4 , 5 , 6 . This functional organization of M1 has been replicated with functional magnetic resonance imaging (fMRI) for the lip, tongue and jaw control regions 7 , 8 , 9 , 10 , 11 . However, the articulatory control of the velum, which has an active role in natural speech (oral and nasal sounds) remains largely underspecified. Furthermore, laryngeal muscle control, critical for phonation, has more recently been mapped onto two separate areas in M1 4 , 5 , 12 : a ventral and a dorsal laryngeal motor area (vLMA and dLMA). Whereas the vLMA (ventral to the tongue motor area) is thought to operate the extrinsic laryngeal muscles, controlling the vertical position of the glottis within the vocal tract, and thereby modulating pitch in voice, the dLMA (dorsal to the lip motor area) is thought to operate intrinsic laryngeal muscles responsible for the adduction and abduction of the vocal cords, which is central to voicing in humans. Isolating the neural control of the intrinsic laryngeal muscles during natural voiced speech is critical for developing a mechanistic understanding of the speaking circuit. At least three research strategies have been adopted in the past in fMRI: (a) contrasting overt (voiced) and covert (imagery) speech 7 , 11 , 13 ; (b) production of glottal stops 12 ; and (c) contrasting voiced and whispered-like (i.e., exhalation) speech 14 . The latter potentially isolates phonation while preserving key naturalistic features of speech, including the sustained and partial adduction of the glottis, the synchronization of phonation, respiration and articulation, and the generation of an acoustic output. In this way, whispered speech can be considered an ecological baseline condition for isolating phonatory processes, free of confounds that may be present with covert speech and the production of glottal stops. Nevertheless, until now, its use has been limited across fMRI studies.

Despite the detailed investigations of M1, the somatotopic organization during overt speech in regions beyond M1 has been relatively unexplored, especially with fMRI. Studying articulatory processes using fMRI has several advantages over other neuroimaging techniques, including high spatial detail 15 , 16 , and simultaneous cortical and subcortical coverage, which can reveal brain connectivity during speech production helping to achieve a better understanding of the underlying neural circuitry. Despite the benefits of fMRI for speech production research, the signal collected during online speech tasks can be confounded by multiple artefactual sources 17 , for example those associated to head motion 18 and breathing 19 , 20 , 21 . Head motion is modulated by speech conditions and breathing affects arterial concentrations of CO 2 , regulating cerebral blood flow (CBF) and volume (CBV) and contributing to the measured fMRI signal. Here, we take advantage of several methodological strategies to avoid both head motion and breathing confounds by employing sparse-sampling fMRI 18 and experimental conditions with well-matched respiratory demands that are measured, respectively.

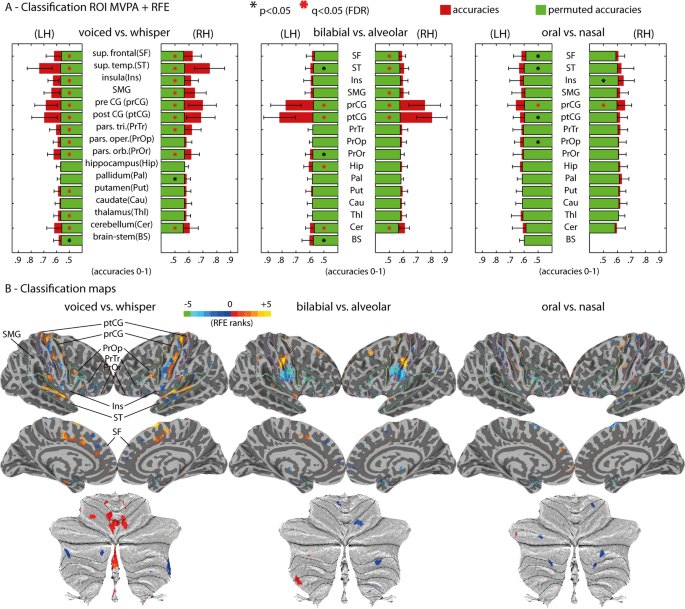

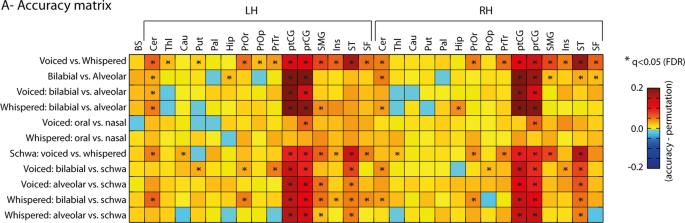

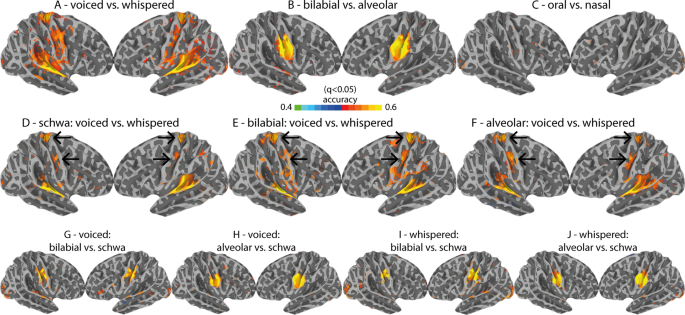

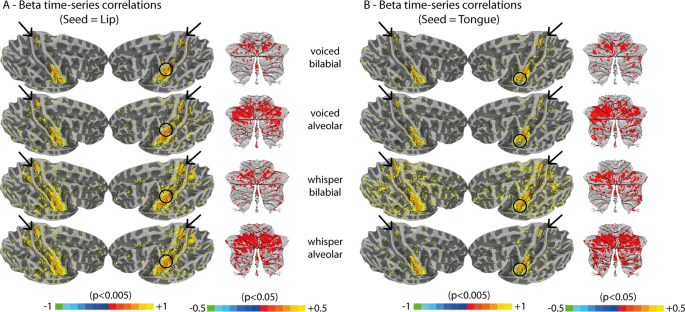

Using these methods, in this study we investigated fMRI representations of speech production, including articulatory and phonatory gestures across the human cortex and subcortex by employing multivariate decoding methods successfully used in fMRI studies of speech perception 22 , 23 , 24 . Articulatory representations were studied by discriminating individual speech gestures involving the lips, the tongue and the velum. Phonatory representations were studied by contrasting voiced and whispered speech. Furthermore, we recorded lung volume, articulatory measures and speech acoustics to rule out possible non-neural confounds in our analysis. Twenty fluent adults read a list of bi-syllabic non-words, balanced for bilabial and alveolar places of articulation, oral and nasal manners of articulation, and the non-articulated vowel ‘schwa’, using both voiced and whispered speech.

Our analysis employed multivariate decoding, based on anatomically-selected regions of interest (ROIs) that are part of the broad speech production circuitry, in combination with a recursive feature elimination (RFE) strategy 22 . Cortical results were further validated using a searchlight approach that uses a local voxel selection moved across the brain 25 . We expected to find articulatory-specific representations in multiple regions previously linked to somatotopic representations, which included the pre-motor cortex, SMA and pre-SMA, basal-ganglia, brain-stem and cerebellum 26 , 27 , 28 . We further expected to find evidence for larger fMRI responses for voiced in contrast to whispered speech in brain regions implicated in vocal fold adduction (e.g., dLMA 12 ). Finally, we investigated functional connectivity using seed regions responsible for lip and tongue control that were possible to localize at the individual subject-level. Accordingly, we expected connections between the different somatotopic organizations across the brain to differ for articulatory and phonatory processes, elucidating the distributed nature of the speaking circuitry 29 and the systems that support the control of different musculature for fluent speech. Overall, this study aims to replicate and extend prior fMRI work on the neural representations of voiced speech, which includes studying the neuromotor control of key speech articulators and phonation.

Participants

Twenty right-handed participants (5 males), native Spanish speaking, and aged between 20 and 44 years old (mean = 28, sd = 8.14) were recruited to this study using the volunteer recruitment platform ( https://www.bcbl.eu/participa ) at the Basque Centre on Cognition, Brain and Language (BCBL), Spain. Participation was voluntary and all participants gave their informed consent prior to testing. The experiment was conducted in accordance with the Declaration of Helsinki and approved by the BCBL ethics committee. Participants had MRI experience, and were informed of the scope of the project, and in particular the importance of avoiding head movements during the speech tasks. Two participants were excluded from group analyses due to exceeding head motion in the functional scans. We note that the group sample had an unbalanced number of male and female participants, which should be taken into account when comparing the results of this study to other studies. Attention to gender may be especially important when considering patient populations, where gender seems to play an important role in occurrence/recovery across different speech disorders 30 . Nevertheless, the objective of our research question relates to basic motor skills, which are not expected to differ extensively between male and female healthy adults with comparable levels of fluency 31 . For the voiced speech condition, participants were informed that they should utter the speech sounds at a comfortable low volume level as they would during a conversation with a friend located at one-meter distance. For the whispered speech condition, participants were informed and trained to produce soft whispering, minimizing possible compensatory supra-glottal muscle activation 32 . Because the fMRI sequence employed sparse sampling acquisition that introduced a silent period for production in absence of auditory MR-related noise, participants were trained to synchronize their speech with these silent periods prior to the imaging session, yielding production in more ecological settings.

Stimuli were composed of written text, presented for 1.5 second in Arial font-style and font-size 40 at the center of the screen with Presentation software ( https://www.neurobs.com ). Five text items were used (‘bb’, ‘dd’, ‘mm’, ‘nn’ and ‘әә’), where ‘ә’ corresponds to the schwa vowel (V) and consonant-consonant (CC) stimuli were instructed to be pronounced by adding the schwa vowel to form a CVCV utterance (e.g., ‘bb’ was pronounced ‘bәbә’). This assured the same number of letters across the stimuli. The schwa vowel involves minimal or no tongue and lip movements, which promoted a better discrimination of labial from tongue gestures. For the voiced speech task, items were presented in green color (RBG color code = [0 0 1]) and for the whispered speech task in red color (RGB color code = [1 0 0]). Throughout the fMRI acquisitions, we simultaneously obtained auditory recordings of individual (i.e., single token) productions using an MR-compatible microphone ( Optoacoustics, Moshav Mazor, Israel ) placed 2 to 4 cm away from the participants’ mouth. Auditory recordings (sampling rate = 22400 Hz) were used to obtain a list of acoustic measures per token, including speech envelope, spectrogram, formants F1 and F2, and loudness. Loudness was computed based on the average of the absolute acoustic signal in a time window of 100 ms centered at the peak of the speech envelope. Speech envelope was computed using the Hilbert transform: first, an initial high-pass filter was applied to the auditory signal (cut-off frequency = 150 Hz, Butterworth IIR design with filter order 4, implemented with the filtfilt Matlab function, Mathworks, version 2014); second, the Hilbert transform was computed using the Matlab function Hilbert ; finally, the magnitude signal (absolute value) of the Hilbert transform output was low-pass filtered (cut-off frequency = 8 Hz, Butterworth IIR design with filter order 4, implemented with the Matlab function filtfilt ). The spectrogram was computed using a short-time Fourier transformation based on the Matlab function spectrogram with a segment length of 100 time-points, overlap of 90% and 128 frequency intervals. From the spectrogram, F1 and F2 formants were computed based on a linear prediction filter ( lpc Matlab function).

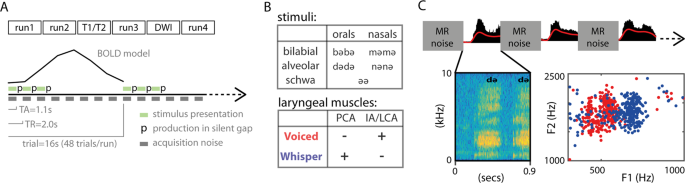

The task was to produce a given item either as voiced or whispered speech during a silent gap introduced between consecutive fMRI scans (i.e., sparse sampling). The silent gap was 900 ms. The relevance of speech production during the silent period was three-fold: first, it avoided the Lombard effect (speech intensity compensation due to environmental noise) 33 ; second, it limited the contamination of head movements related to speech production during fMRI acquisition 18 ; and third, it facilitated voice recording. Trials were presented in a slow event-related design, with an inter-trial-interval (ITI) of 16 seconds. Within each trial, participants read a given item 3 times, separated by a single fMRI volume acquisition (time of repetition, TR = 2000 ms) (Fig. 1A ). At each utterance, a written visual text cue was presented for 1500 ms aligned with the beginning of the TR, and as instructed, it indicated participants to utter the corresponding item in the following silent gap (i.e., between 1100 ms and 2000 ms). Item repetition was included to obtain fMRI responses of greater magnitude (i.e., higher contrast-to-noise-ratio, CNR) 34 , 35 . Between consecutive trials, a fixation cross was presented to maintain the attention of the participants at the center of the visual field. Each run lasted 13 minutes. A session was composed of 4 functional runs. After the second run, two anatomical scans were acquired (T1-weighted and T2-weighted). After the third run, two scans (10 volumes) with opposite phase-encoding directions (anterior-posterior and posterior-anterior) were acquired for in-plane distortion correction. Diffusion weighted imaging (DWI) scans were also acquired between run 3 and 4 for future analyses, but not included in the present analyses.

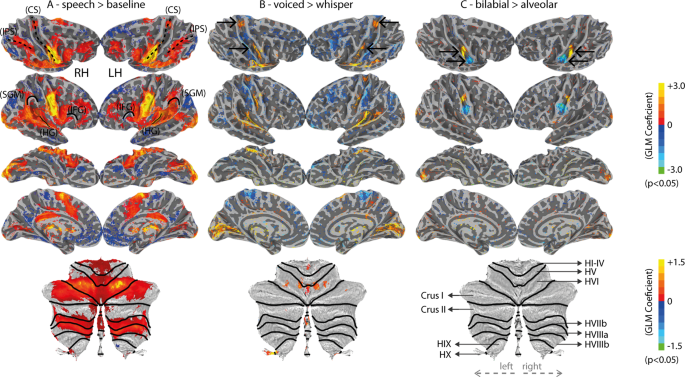

Description of the task. ( A ) Overview of the task: MRI session composed of 4 functional runs divided in trials separated by an inter-trial-interval of 16 s. In each trial, participants produced a given item 3 times. Items are disyllabic non-words (e.g.., bәbә). ( B ) Stimuli and laryngeal control: stimuli was balanced for place of articulation (bilabial and alveolar) and manner of articulation (orals and nasals), and the controlled vowel schwa (ә); for the voiced condition, the IA (interarytenoid) and LCA (lateral cricoarytenoid) laryngeal muscles are recruited, whereas the PCA (posterior cricoarytenoid) is not, and the reversed for the whispered condition. ( C ) Detail of task for a given trial: 0.9 s of silent gap were introduced between consecutive TRs for speech production without MRI noise; top: sound recording in black and low-pass-filtered signal envelope in red; below-left: spectrogram image of an utterance example; below-right: scatter plot of F1 and F2 formants in a given participant (each dot represents an utterance), red for voiced and blue for whispered speech.

MRI acquisition and preprocessing

MRI was acquired at the BCBL facilities using a 3 Tesla Siemens MAGNETOM Prisma-fit scanner with a 64-channel head coil (Erlangen, Germany). Two anatomical scans included a T1-weighted and a T2-weighted MRI-sequences with an isotropic voxel resolution of 1 mm 3 (176 slices, field of view = 256 × 256 mm, flip angle = 7 degrees; GRAPPA accelaration factor 2). T1-weighted (MPRAGE) used a TR (time of repetition) = 2530 ms and TE (time of echo) = 2.36 ms. T2-weighted (SPACE) used a TR = 3390 ms and TE = 389 ms. These scans were used for anatomical-functional alignment, and for gray-matter segmentation and generation of subject-specific cortical surface reconstructions using FreeSurfer (version 6.0.0, https://surfer.nmr.mgh.harvard.edu ). Gray-matter versus white-matter segmentation used the T1-weighted tissue contrast and gray-matter versus cerebral-spinal-fluid (CSF) segmentation used the T2-weighted tissue contrast based on the FreeSurfer segmentation pipeline. Individual segmentations were visually inspected, but none required manual corrections. T2*-weighted functional images were acquired with an isotropic voxel resolution of 2 × 2 × 2 mm 3 using a gradient-echo (GRE) simultaneous multi-slice (aka multiband) EPI sequence 15 , 16 with multiband acceleration factor 5, FOV = 208 × 208 mm (matrix size = 104 × 104), 60 axial slices with no distance factor between slices, flip angle = 78 degrees, TR = 2000 ms including a silent gap of 900 ms, TE = 37 ms, echo spacing = 0.58, bandwidth = 2290 Hz/Px, and anterior-to-posterior (AP) phase-encoding direction. Slices were oriented axially (and in oblique fashion) along the inferior limits of the frontal lobe, brain-stem and cerebellum. In cases where coverage did not guarantee full brain coverage, a portion of the anterior temporal pole was excluded. A delay in TR of 900 ms was introduced between consecutive TRs to allow speech production in absence of MR-related noise and minimize potential head motion artifacts (i.e., TA = 1100 ms). All functional pre-processing steps were performed in AFNI software (version 18.02.16) 36 using the afni_proc.py program in the individual native space of each participant and included: slice-timing correction; removal of first 3 TRs (i.e., 6 seconds), blip-up (aka, top-up) correction using the AP and PA scans 37 , co-registration of the functional images due to head motion relative to the image with minimal distance from the average displacement; and co-registration between anatomical and functional images.

Simultaneously with fMRI acquisition, physiological signals of respiration (chest volume) and articulation (pressure sensor placed under the chin of the participants) were recorded using the MP150 BIOPAC system (BIOPAC, Goleta, CA, USA). The BIOPAC system included MRI triggers delivered at each TR onset for synchronization between the physiological signals and the fMRI data. The respiratory waveform was measured using an elastic belt placed around the participant’s chest, connected to a silicon-rubber strain assembly (TSD201 module). The belt inputs directly to a respiration amplifier (RSP100C module) at 1000 Hz sampling rate. A low-pass filter with 10 Hz cut-off frequency was applied to the raw respiratory signal. The same sampling rate and low-pass filter was used for the pressure sensor (TSD160C) measuring articulatory movements.

Univariate analyses

Univariate statistics were based on individual general linear models implemented in AFNI (3dDeconvolve) for each participant. At a first level analysis (subject-level) regressors of interest for each condition type (i.e., 10 condition types: 2 tasks - voiced and whispered, and 5 words - ‘bb’, ‘dd’, ‘mm’, ‘nn’ and ‘ee’) were created using a double-gamma function ( SPMG1 ) to model the hemodynamic response function (HRF). Each modelled trial consisted of 3 consecutive production events separated by 1 TR, i.e., 6 second duration. Regressors of non-interest modelling low frequency trends (Legendre polynomials up to order 5) and the 6 realignment parameters (translation and rotation) were included in the design matrix of the GLM. Time points where the Euclidean norm of the derivatives of the realignment motion parameters exceeded 0.4 mm were also included in the GLM to censor occasional excessive motion. At a second level univariate analysis (group-level statistics), volumetric beta value maps were projected onto the cortical surfaces of each subject using SUMA (version Sep. 12 2018, https://afni.nimh.nih.gov/Suma ), based on the gray-matter ribbon segmentation obtained in FreeSurfer. Individual cortical surfaces in SUMA were inflated and mapped onto a spherical template based on macro-level curvature (i.e., gyri and sulci landmarks), which guarantees the anatomical alignment across participants. T-tests were employed to obtain group-level statistics on the cortical surfaces using AFNI ( 3dttest ++). Statistical maps comprised voiced versus whispered, bilabial versus alveolar, and oral versus nasal items. Group-level alignment of the cerebellum and intra-cerebellar lobules relied on a probabilistic parcelation method 38 provided in the SUIT toolbox ( version 3.3 , www.diedrichsenlab.org/imaging/suit.htm ) in conjunction with SPM ( version 12 , www.fil.ion.ucl.ac.uk/spm ) using the T1 and fMRI activation maps in MNI space and NIFTI format. Alignment to a surface-based template of the cerebellum’s gray-matter assured a higher degree of lobule specificity and across subject overlap. All cerebellar maps were projected onto a flat representation of the cerebellum’s gray-matter together with lobule parcelations for display purposes.

Univariate results were not corrected for multiple comparisons. Corrected statistics depended on the sensitivity of MVPA. In order to prepare single trial features for the MVPA analyses (i.e., feature estimation), fMRI responses for each trial and voxel were computed in non-overlapping epochs of 16 secs locked to trial onset (i.e. 9 time points) from the residual pre-processed files after regressing out the Legendre polynomials, realignment parameters and motion censoring volumes. Subsequently, single-trial voxel-wise fMRI responses were demeaned and detrended for a linear slope.

ROI + RFE MVPA

We adopted an initial MVPA approach based on an anatomical ROI selection using the Desikan-Killiany atlas followed by a nested recursive feature elimination (RFE) procedure 22 that iteratively selected voxels based on their sensitivity to decode experimental conditions. Thirty-one anatomical ROIs were selected given their predicted role in speech production 3 , 39 , and covered cortical and sub-cortical regions including the basal-ganglia, cerebellum and brainstem. Because MVPA potentially offers superior sensitivity for discriminating subtle experimental conditions, it was possible to include additional ROIs that have been reported in other human speech production experiments as well as those known to show somatotopy in animal research but that have insofar not shown speech selectivity in human fMRI. The ROIs included the brainstem and a set 15 ROIs per hemisphere: cerebellum (cer), thalamus (thl), caudate (cau), putamen (put), pallidum (pal), hippocampus (hip), pars orbitalis (PrOr), pars opercularis (PrOp), pars triangularis (PrTr), post-central gyrus (ptCG), pre-central gyrus (prCG), supramarginal gyrus (SMG), insula (ins), superior temporal lobe (ST), superior frontal lobe (SF, including SMA - supplementary motor area - and pre-SMA regions). After feature selection, single-trial fMRI estimates were used in multivariate classification using SVM based on a leave-run-out cross-validation procedure. This procedure was used conjointly with RFE 22 . RFE iteratively (here, 10 iterations were used) eliminates the least informative voxels (here, 30% elimination criterion was used) based on a nested cross-validation procedure (here, 40 nested splits based on a 0.9 ratio random selection of trials with replacement was used). In other words, within each cross-validation, SVM classification was applied to the 40 splits iteratively. Every fourth split, we averaged the SVM weights, applied spatial smoothing using a 3D filter [3 × 3 × 3] masked for the current voxel selection and removed the least informative 30% of voxels based on their absolute values. This procedure based on eliminating the least informative features continued for 10 iterations. The final classification accuracy of a given ROI and contrast was computed as the maximum classification obtained across the 10 RFE iterations. Because the maximum criterion is used to obtain sensitivity from the RFE method, chance-level is likely inflated and permutation testing is required. Permutation testing consisted of 100 label permutations, while repeating the same RFE classification procedure for every participant, ROI and classification contrast. This computational procedure is slow but provides sensitivity for detecting spatially distributed multivariate response patterns 23 , 24 .

Classification for the ROI + RFE procedure was performed using support vector machines (SVM). SVM classification was executed in Matlab using the libsvm library and the sequential minimal optimization (SMO) algorithm. Validation of classification results that is inherent to MVPA was performed using a leave-run-out cross-validation procedure, where one experimental run is left-out for testing, while the data from the remaining runs is used for training the classification model. SVM was performed using a linear kernel for a more direct interpretation of the classification weights obtained during training. Furthermore, fMRI patterns were suggested to reflect somatotopic organizations, thus we expected to observe spatial clustering of voxel preferences in the mapping of the SVM weights. SVM regularization was further used to account for MVPA feature outliers during training, which would otherwise risk overfitting the classification model and reduce model generalization (i.e., produce low classification of the testing set). Regularization in the SMO algorithm is operationalized by the Karush-Kuhn-Tucker (KKT) conditions. We used 5% for KKT, which indicates the ratio of trials allowed to be misclassified during model training. Group-level statistics of the classification accuracies were performed against averaged permutation chance-level using two-tailed t-tests. Multiple comparisons correction (i.e., multiple ROIs) was done using FDR (q < 0.05).

Searchlight MVPA

In order to further validate the ROI + RFE approach, we conducted a second MVPA approach based on a moving spherical cortical ROI selection 25 . The searchlight allows us to determine whether multivoxel patterns are local. In contrast to the ROI + RFE approach, the searchlight approach is not influenced by the boundaries of the anatomical ROIs. It explores local patterns of fMRI activations by selecting neighboring voxels within a spherical mask (here, 7 mm radius, thus 3 voxels in every direction plus its centroid was used). This spherical selection was moved across the gray matter ribbon of the cortex.

Classification was performed using linear discriminant analysis (LDA) 40 , which allows massive parallel classifications in a short period of time, enabling the statistical validation of the method using label permutations (100 permutations). LDA and SVM have similar classification accuracies when the number of features is relatively low, as it is normally the case in the searchlight method 41 .

Classification validation was based on a leave-run-out cross-validation procedure. Group-level statistics of averaged classification accuracies (across cross-validation splits) were performed against permutation chance-level (theoretical chance-level is 0.5 since all classifications were binary) using two-tailed t-tests. Multiple comparisons correction (i.e., multiple searchlight locations) was done using FDR (q < 0.05). A possible pitfall of volumetric searchlight is that the set of voxels selected in the same searchlight sphere may be close to each other in volumetric distance but far from each other in topographical distance 42 . This issue is particularly problematic when voxels from the frontal lobe and the temporal lobe are considered within the same searchlight sphere. To overcome this possible lack of spatial specificity of the volumetric searchlight analysis, we employed a voxel selection method based on cluster contiguity in Matlab: first, each searchlight sphere was masked by the gray-matter mask; then a 3D clustering analysis was computed ( bwconncomp, with connectivity parameter = 26); finally, when more than one cluster was found in the masked searchlight selection, voxels from clusters not belonging to the respective centroid cluster were removed from the current searchlight sphere. This assured that voxels from topographically distant portions of the cortical mesh were not mixed.

Beta time-series functional connectivity

Finally, we explored functional connectivity from seed regions involved in speech articulation. Functional clusters obtained for lip and tongue control in M1 were used because these somatotopic representations were expected to enable localization at the individual subject level. Functional connectivity was assessed using beta time-series correlations 43 . This measure of functional connectivity focuses on the level of fMRI activation summarized per trial and voxel, while neglecting temporal oscillations in the fMRI time-series. Given our relatively slow sampling rate (TR = 2 seconds), this method was chosen over other functional connectivity methods that depend on the fMRI time-series. Pearson correlations were employed to assess the level of synchrony between the average beta time-series of the voxels within each seed region and each brain voxel. This method produces a correlation map (−1 to 1) per seed and participant, converted to z-scores with Fisher’s transformation. Group level statistics were assessed using a two-sided t-test against the null hypothesis of no correlation between the seed regions and brain voxels. Exploring beta time-series correlations in a sub-set of trials from a particular experimental condition relates to the specificity of the functional connectivity measure for that condition independently 43 . Finally, articulatory-specific and phonatory-specific connections were studied using statistical contrasts between the z-scores of different conditions. Hence, articulatory-specific connections of the tongue articulator are those for which the z-scores obtained from tongue-gesture conditions are significantly higher than from lip-gesture conditions, and vice-versa. Phonatory-specific connections of the tongue seed are those for which the z-scores obtained from tongue-voiced conditions are significantly higher than tongue-whispered conditions, and the same for the lip seed.

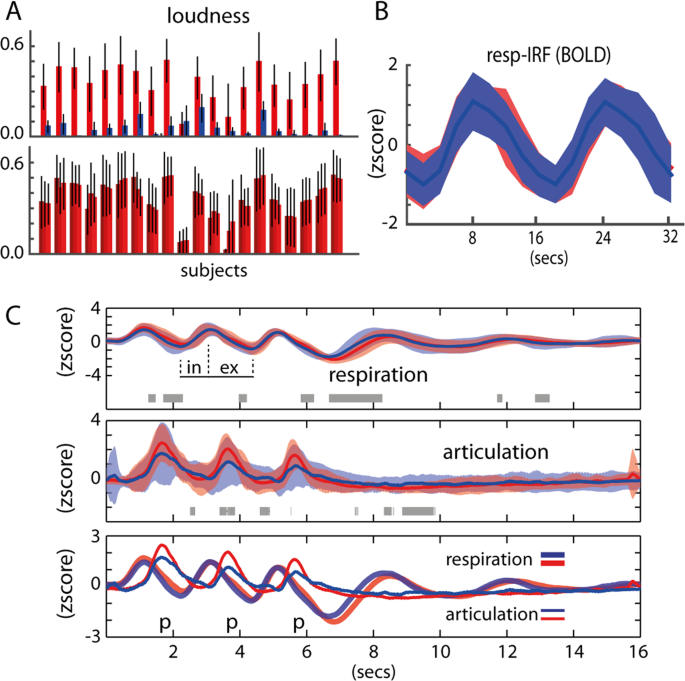

Behavioral and physiological measures

Chest volume was predictive of speech onset, regardless of the task (i.e., voiced or whispered speech). Measurement of articulatory movements using a pressure sensor placed under the chin of participants was also predictive of speech onset regardless of the speech task (Fig. 2 ). No significant differences were found between voiced and whispered speech at the group level (FDR q > 0.05) at any point of the averaged time-course of the trials, although in a few time points uncorrected p < 0.05 was found (Fig. 2C , gray shading horizontal bar). Overall, breathing and articulatory patterns were very similar across the production tasks and items. Both at the individual participant level and at the group level, lung volume peak preceded articulation onset. Speech sound recordings obtained synchronously with physiological changes matched the measures of articulatory movements. Speech was successfully synchronized in all participants with our fMRI protocol, i.e., speech was produced within the desired silent periods (900 ms) between consecutive TRs. As expected, voiced speech was significantly louder than whispered speech (p = 8.58 × 10 –10 , Fig. 2A upper panel). The three production events composing a single trial were consistent in loudness, hence variation was small across voiced events (3-way anova, p = 0.99, Fig. 2A lower panel) and the whispered task (p = 0.97). In most participants, the formant F1 extracted from the vowel segments was higher for whispered compared to voiced speech (Fig. 1C ) but not for F2. Overall and importantly, we confirmed that the voiced and whispered speech tasks were well-matched for respiration and articulation (Fig. 2C ).

Behavioral results. ( A ) Upper: Loudness per task across all participants. Red is voiced and blue is whispered speech. Bottom: Loudness per item repetition (3 items are produced per trial) across all participants for the voiced speech task. ( B ) Respiratory impulse response function (resp-IRF) using the average BOLD fluctuation within all cortical voxels. ( C ) Group results of the respiratory and articulatory recordings, red is voiced and blue is whispered speech: upper: voiced and whispered respiratory fluctuations (standard errors from the mean is shaded); gray horizontal bars refer to t-test differences (p < 0.05); ‘in’ and ‘ex’ depict inhale and exhale periods, respectively; middle: voiced and whispered articulatory fluctuations; bottom: combined respiratory and articulatory fluctuations.